Dynamic programming is a powerful algorithmic technique used to solve complex problems by breaking them down into simpler subproblems and storing their solutions to avoid redundant computations. This approach is especially effective for optimization problems and those with overlapping subproblems, enabling efficient and scalable solutions. Explore the rest of the article to discover how dynamic programming can optimize your problem-solving strategies and enhance computational efficiency.

Table of Comparison

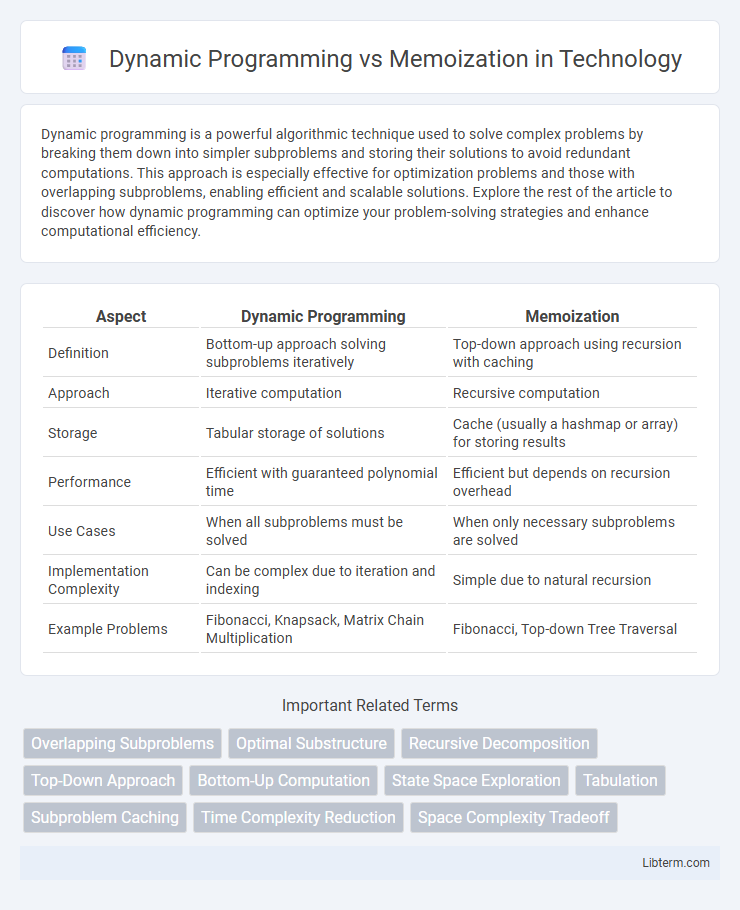

| Aspect | Dynamic Programming | Memoization |

|---|---|---|

| Definition | Bottom-up approach solving subproblems iteratively | Top-down approach using recursion with caching |

| Approach | Iterative computation | Recursive computation |

| Storage | Tabular storage of solutions | Cache (usually a hashmap or array) for storing results |

| Performance | Efficient with guaranteed polynomial time | Efficient but depends on recursion overhead |

| Use Cases | When all subproblems must be solved | When only necessary subproblems are solved |

| Implementation Complexity | Can be complex due to iteration and indexing | Simple due to natural recursion |

| Example Problems | Fibonacci, Knapsack, Matrix Chain Multiplication | Fibonacci, Top-down Tree Traversal |

Introduction to Dynamic Programming and Memoization

Dynamic Programming is a method for solving complex problems by breaking them down into simpler overlapping subproblems and storing their results to avoid redundant computations. Memoization is a specific technique used in Dynamic Programming that involves caching the results of expensive function calls and reusing them when the same inputs occur again. Both approaches optimize recursive algorithms by improving time complexity, with Dynamic Programming typically implemented in a bottom-up manner, while Memoization uses a top-down strategy.

Understanding the Core Concepts

Dynamic programming is a method for solving complex problems by breaking them down into simpler overlapping subproblems and storing the results to avoid redundant computations. Memoization is a specific optimization technique within dynamic programming that caches the results of expensive function calls and returns the cached result when the same inputs occur again. Understanding the distinction between bottom-up dynamic programming and top-down memoization is crucial for efficient algorithm design and performance enhancement.

Key Principles of Dynamic Programming

Dynamic Programming (DP) relies on breaking down problems into overlapping subproblems and solving each subproblem only once, storing the results to avoid redundant computations. Memoization is a top-down approach in DP, where solutions to subproblems are cached during recursive calls. Key principles of Dynamic Programming include optimal substructure, which ensures the optimal solution can be constructed from optimal solutions of subproblems, and overlapping subproblems, which enables efficient reuse of previously computed results.

The Role of Memoization in Problem Solving

Memoization plays a crucial role in dynamic programming by storing intermediate results to avoid redundant computations, significantly improving algorithm efficiency. It transforms exponential-time recursive solutions into polynomial or linear-time ones by caching previously solved subproblems. This optimization technique enhances problem-solving capabilities in complex areas such as combinatorics, graph theory, and numerical optimization.

Top-Down vs Bottom-Up Approaches

Dynamic programming uses both top-down and bottom-up strategies to solve complex problems by breaking them into simpler subproblems. Top-down approach with memoization stores and reuses results during recursion to avoid redundant calculations, while bottom-up approach iteratively fills a table starting from the smallest subproblems. Bottom-up often improves space efficiency and execution speed by eliminating overhead associated with recursion stacks found in top-down methods.

Comparing Efficiency: Dynamic Programming vs Memoization

Dynamic programming typically improves efficiency by solving subproblems iteratively and storing results in a table, reducing the time complexity to polynomial in many cases. Memoization, a top-down approach, caches function call results to avoid redundant computations but may incur overhead from recursive calls and memory usage. Overall, dynamic programming often outperforms memoization in terms of space and time efficiency, especially for problems with overlapping subproblems and optimal substructure.

Use Cases and Real-World Applications

Dynamic Programming excels in solving optimization problems such as the Knapsack problem, shortest path algorithms like Floyd-Warshall, and matrix chain multiplication by breaking them into overlapping subproblems and systematically solving them. Memoization is particularly effective in recursive algorithms with repeated function calls, such as computing Fibonacci numbers, parsing ambiguous grammars, and dynamic solution caching in game theory for improving performance. Real-world applications of Dynamic Programming and Memoization include resource allocation in operations research, route optimization in transportation networks, and predictive text input in natural language processing.

Common Pitfalls and Optimization Tips

Common pitfalls in dynamic programming and memoization include redundant state recalculations and excessive memory usage due to improper caching strategies. Optimizing by carefully defining state space, ensuring overlapping subproblems, and using top-down memoization with pruning techniques enhances efficiency. Avoiding global state mutations and leveraging iterative bottom-up approaches can further minimize runtime and stack overflow risks.

Code Examples: Dynamic Programming vs Memoization

Dynamic Programming solves problems by breaking them into overlapping subproblems and storing their solutions in a table, optimizing time complexity as shown in Fibonacci sequence implementations where bottom-up tabulation is used. Memoization is a top-down approach that caches the results of expensive function calls and returns the cached result for identical inputs, demonstrated with recursive Fibonacci functions using dictionaries or hash maps. Code examples highlight dynamic programming's iterative process versus memoization's recursion with caching, each optimizing performance by avoiding redundant calculations.

Choosing the Right Technique for Your Problem

Dynamic programming excels in solving complex problems by breaking them into overlapping subproblems and storing solutions in a table to avoid redundant calculations. Memoization, a top-down approach, caches results of recursive calls, optimizing only the computations actually made. Choosing between dynamic programming and memoization depends on problem structure, available memory, and whether an iterative or recursive solution better suits your algorithm design needs.

Dynamic Programming Infographic

libterm.com

libterm.com