Concept drift occurs when the statistical properties of target variables change over time, leading to degraded performance of machine learning models. Detecting and adapting to concept drift is crucial for maintaining accuracy in dynamic environments such as finance, healthcare, and online services. Explore the rest of the article to learn how you can effectively manage concept drift in your data-driven projects.

Table of Comparison

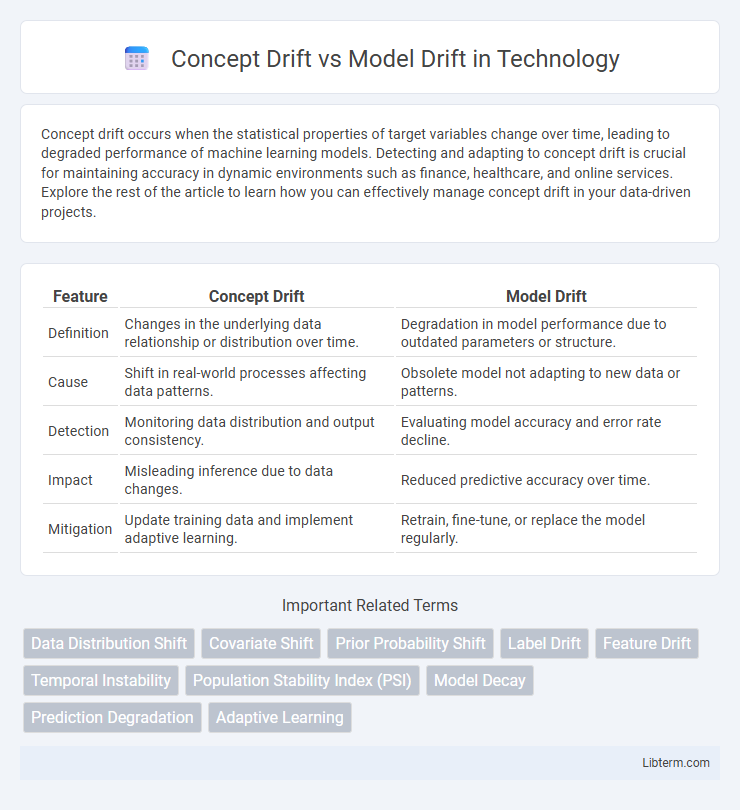

| Feature | Concept Drift | Model Drift |

|---|---|---|

| Definition | Changes in the underlying data relationship or distribution over time. | Degradation in model performance due to outdated parameters or structure. |

| Cause | Shift in real-world processes affecting data patterns. | Obsolete model not adapting to new data or patterns. |

| Detection | Monitoring data distribution and output consistency. | Evaluating model accuracy and error rate decline. |

| Impact | Misleading inference due to data changes. | Reduced predictive accuracy over time. |

| Mitigation | Update training data and implement adaptive learning. | Retrain, fine-tune, or replace the model regularly. |

Introduction to Concept Drift and Model Drift

Concept drift refers to changes in the statistical properties of the target variable over time, which affect the predictive model's accuracy by altering the underlying relationship between input and output variables. Model drift occurs when the performance of a deployed machine learning model degrades due to evolving data patterns or shifts in data distribution that were not accounted for during training. Understanding both concept drift and model drift is crucial for maintaining robust and adaptive machine learning systems in dynamic environments.

Defining Concept Drift

Concept drift refers to changes in the statistical properties of the target variable, causing predictive models to become less accurate over time as the relationship between input data and output labels shifts. This phenomenon occurs when the underlying data distribution evolves, affecting the assumption that training and future data are identically distributed. Unlike model drift, which involves degradation in model performance due to aging or external factors, concept drift specifically highlights shifts in data patterns that require model retraining or adaptation.

Understanding Model Drift

Model drift occurs when a machine learning model's predictive performance degrades over time due to changes in the underlying data distribution or external environment, impacting reliability. Unlike concept drift, which refers specifically to shifts in the statistical properties of the target variable, model drift emphasizes the model's declining accuracy and the need for retraining or recalibration. Monitoring key performance indicators such as accuracy, precision, and recall helps detect model drift to maintain robust AI system performance.

Key Differences Between Concept Drift and Model Drift

Concept drift refers to changes in the statistical properties of the target variable over time, affecting the underlying data distribution. Model drift occurs when the model's performance degrades due to outdated parameters or assumptions, even if the data distribution remains stable. Key differences include that concept drift arises from evolving data patterns, while model drift stems from model obsolescence and lack of retraining.

Causes of Concept Drift

Concept Drift occurs when the statistical properties of the target variable change over time, often due to shifts in user behavior, external environment changes, or evolving market conditions. Key causes include seasonal variations, changes in data distribution, and new patterns emerging that the original model was not trained to recognize. Model Drift, in contrast, refers to the degradation of a predictive model's performance caused by outdated parameters or insufficient retraining rather than changes in the underlying data concept.

Causes of Model Drift

Model drift primarily occurs due to changes in the underlying data distribution, such as shifts in feature space or target variable characteristics, often caused by evolving user behavior, market trends, or data acquisition processes. External factors like seasonal effects, sensor degradation, or system updates also contribute to the gradual decline in model accuracy over time. Detecting and addressing model drift requires continuous monitoring of data inputs and output performance metrics to maintain model reliability and predictive power.

Detecting Concept Drift

Detecting concept drift involves monitoring changes in the statistical properties of input data or the relationship between inputs and target variables over time, which can cause predictive model performance to degrade. Techniques such as statistical hypothesis testing, feature distribution monitoring, and performance metric tracking are commonly employed to identify shifts in data patterns indicative of concept drift. Early detection enables timely model retraining or adaptation to maintain accuracy in dynamic environments.

Detecting Model Drift

Model drift occurs when a machine learning model's performance degrades over time due to changes in the underlying data distribution or external factors, while concept drift specifically refers to shifts in the statistical properties of the target variable. Detecting model drift involves monitoring key performance indicators such as accuracy, precision, recall, or AUC over time and employing techniques like population stability index (PSI), Kolmogorov-Smirnov tests, or data distribution comparison to identify deviations from baseline behavior. Automated alerting systems and continuous model evaluation pipelines are essential for timely detection and remediation of drift to maintain model reliability in production environments.

Strategies to Handle Drift in Machine Learning

Concept drift and model drift both refer to shifts in data patterns affecting machine learning performance, with concept drift involving changes in the underlying data distribution and model drift indicating degradation of model accuracy over time. Strategies to handle drift include continuous model monitoring, periodic retraining with updated data, and adaptive algorithms that dynamically adjust to new patterns. Employing drift detection methods such as statistical tests or performance metrics triggers timely interventions to maintain model reliability in changing environments.

Impact of Drift on Model Performance

Concept drift refers to changes in the statistical properties of the target variable over time, leading to a decrease in model accuracy as the relationship between input features and output shifts. Model drift occurs when the model's learned parameters or structure become outdated or degrade due to evolving data distributions, causing performance deterioration. Both drifts significantly impact predictive accuracy, necessitating continual monitoring and model updates to maintain robustness in dynamic environments.

Concept Drift Infographic

libterm.com

libterm.com