Classification is a fundamental process in machine learning where data is categorized into predefined labels based on input features. Accurate classification models enhance decision-making across various fields like healthcare, finance, and marketing by predicting outcomes with high precision. Explore the rest of the article to discover techniques and best practices that can improve your classification skills.

Table of Comparison

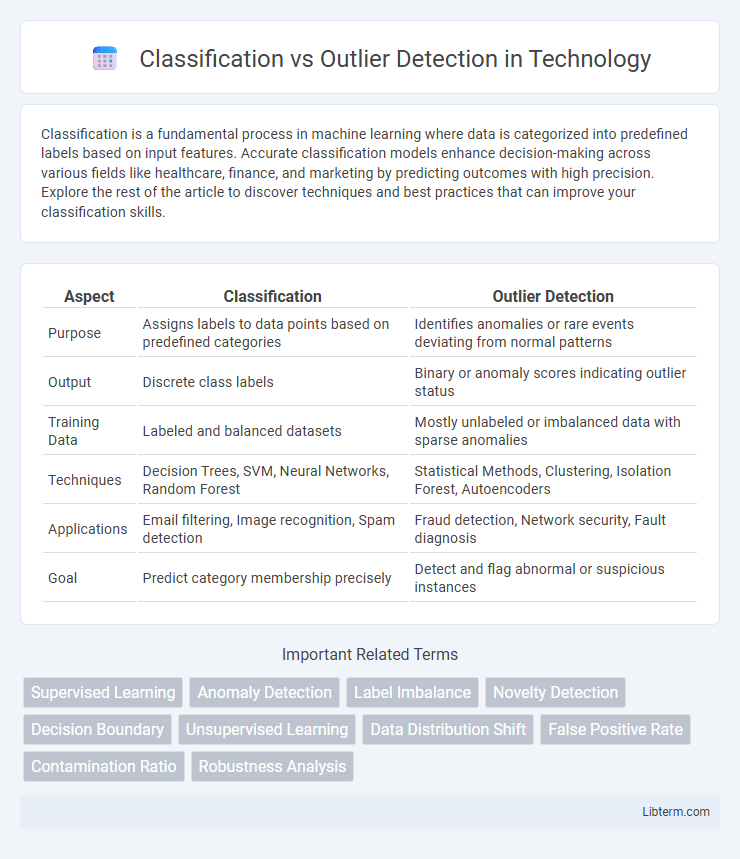

| Aspect | Classification | Outlier Detection |

|---|---|---|

| Purpose | Assigns labels to data points based on predefined categories | Identifies anomalies or rare events deviating from normal patterns |

| Output | Discrete class labels | Binary or anomaly scores indicating outlier status |

| Training Data | Labeled and balanced datasets | Mostly unlabeled or imbalanced data with sparse anomalies |

| Techniques | Decision Trees, SVM, Neural Networks, Random Forest | Statistical Methods, Clustering, Isolation Forest, Autoencoders |

| Applications | Email filtering, Image recognition, Spam detection | Fraud detection, Network security, Fault diagnosis |

| Goal | Predict category membership precisely | Detect and flag abnormal or suspicious instances |

Introduction to Classification and Outlier Detection

Classification involves assigning data points to predefined categories based on labeled training data, enabling predictive modeling in supervised learning. Outlier detection focuses on identifying data points that significantly deviate from the normal patterns within a dataset, often used in anomaly detection and unsupervised learning. Both techniques serve distinct purposes: classification predicts class membership, while outlier detection highlights unusual or rare instances.

Key Differences Between Classification and Outlier Detection

Classification involves assigning predefined labels to data points based on learned patterns, whereas outlier detection identifies data points that significantly deviate from the norm without prior labeling. Classification relies on labeled training data to categorize inputs, while outlier detection often operates in an unsupervised manner to find anomalies. The key difference lies in the objective: classification predicts known categories, and outlier detection discovers unknown or rare instances.

Core Concepts: Definitions and Objectives

Classification is a supervised learning technique aimed at assigning predefined labels to input data based on learned patterns from a labeled dataset. Outlier detection, also known as anomaly detection, focuses on identifying data points that significantly deviate from the norm or expected behavior within a dataset, often without prior labeling. The main objective of classification is to accurately predict the category of new instances, while outlier detection aims to uncover rare or unexpected observations that may indicate errors, fraud, or novel phenomena.

Types of Data Used in Each Approach

Classification primarily utilizes labeled data where each instance is associated with predefined categories, enabling algorithms to learn patterns for identifying classes. Outlier detection relies on unlabeled or partially labeled datasets to identify anomalies based on deviations from the normal data distribution, making use of both univariate and multivariate data types. While classification algorithms perform well with structured, categorical, or continuous data, outlier detection often requires sensitivity to rare events or subtle shifts in numerical or time-series data.

Algorithms Commonly Used for Classification

Classification relies on algorithms like Decision Trees, Support Vector Machines (SVM), and Random Forests to categorize data into predefined classes based on labeled training datasets. Neural Networks, including deep learning models such as Convolutional Neural Networks (CNN), excel in handling complex and high-dimensional data for improved classification accuracy. Ensemble methods like Gradient Boosting combine multiple weak classifiers to enhance performance, making them widely used in various classification tasks.

Algorithms Commonly Used for Outlier Detection

Outlier detection algorithms commonly used include Isolation Forest, Local Outlier Factor (LOF), and One-Class SVM, each designed to identify anomalies in different data distributions. Isolation Forest isolates anomalies by randomly partitioning data, making it efficient for high-dimensional datasets. Local Outlier Factor measures the local density deviation of a data point with respect to its neighbors, effectively detecting outliers in clustered data.

Evaluation Metrics: Measuring Performance

Classification evaluation metrics include accuracy, precision, recall, F1-score, and ROC-AUC, which assess the model's ability to correctly label data instances across all classes. Outlier detection relies on metrics such as precision, recall, F1-score, and area under the Precision-Recall Curve (PR AUC), emphasizing the identification of rare, anomalous instances. While classification metrics balance overall correctness, outlier detection metrics focus on correctly flagging anomalies despite extreme class imbalance.

Real-World Applications and Use Cases

Classification is widely used in email filtering, medical diagnosis, and customer segmentation by categorizing data into predefined labels for efficient decision-making. Outlier detection identifies anomalies in financial fraud detection, network security, and quality control to flag unusual patterns that deviate from normal behavior. Both techniques optimize operational accuracy by addressing distinct needs: classification streamlines categorization, while outlier detection enhances anomaly awareness in real-world scenarios.

Challenges in Classification vs Outlier Detection

Classification algorithms struggle with imbalanced datasets where rare classes are underrepresented, leading to biased or inaccurate predictions. Outlier detection faces challenges in distinguishing genuine anomalies from noisy or borderline data points, often requiring unsupervised approaches with limited labeled data. The dynamic and evolving nature of data distributions complicates both tasks by demanding adaptable models that maintain robustness over time.

Future Trends and Advancements

Future trends in classification emphasize integrating deep learning with explainable AI to enhance model transparency and decision-making accuracy across complex datasets. Outlier detection advancements prioritize unsupervised and semi-supervised techniques leveraging graph neural networks and dynamic anomaly detection in real-time streaming data. Both domains increasingly adopt hybrid models combining classification and outlier detection to improve robustness in cybersecurity, fraud detection, and predictive maintenance applications.

Classification Infographic

libterm.com

libterm.com