Cyclic Redundancy Check (CRC) is a powerful error-detecting code used in digital networks and storage devices to detect accidental changes to raw data. It works by performing a polynomial division of the data bits and appending the remainder to the data to ensure integrity during transmission or storage. Discover how CRC safeguards your data and why understanding its process is crucial by reading the rest of this article.

Table of Comparison

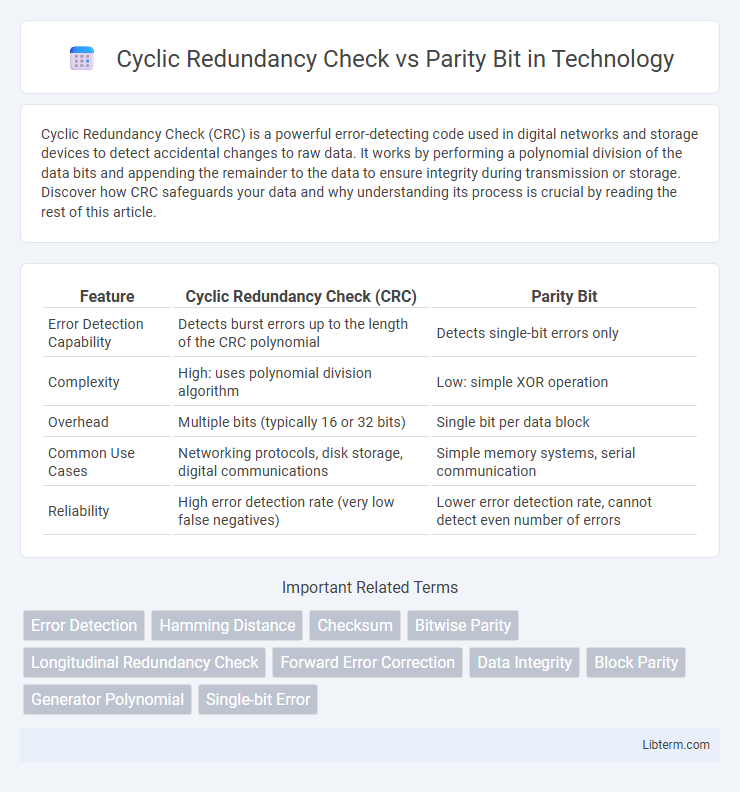

| Feature | Cyclic Redundancy Check (CRC) | Parity Bit |

|---|---|---|

| Error Detection Capability | Detects burst errors up to the length of the CRC polynomial | Detects single-bit errors only |

| Complexity | High: uses polynomial division algorithm | Low: simple XOR operation |

| Overhead | Multiple bits (typically 16 or 32 bits) | Single bit per data block |

| Common Use Cases | Networking protocols, disk storage, digital communications | Simple memory systems, serial communication |

| Reliability | High error detection rate (very low false negatives) | Lower error detection rate, cannot detect even number of errors |

Introduction to Data Integrity: CRC vs Parity Bit

Cyclic Redundancy Check (CRC) and Parity Bit are fundamental error-detection methods used in data integrity verification. CRC employs polynomial division to produce a multi-bit checksum providing high error detection accuracy, especially for complex data transmissions, whereas Parity Bit uses a single binary digit to indicate even or odd parity, offering simpler but less robust error detection. CRC is preferred in network protocols and storage devices due to its ability to detect burst errors, while Parity Bit is commonly used in memory systems for quick detection of single-bit errors.

Understanding Parity Bit: Basics and Functionality

Parity bit serves as a fundamental error detection method that appends a single bit to binary data to ensure the total count of 1s is either even (even parity) or odd (odd parity). This simple mechanism detects single-bit errors by verifying parity consistency during data transmission or storage. While parity bit is efficient for detecting basic errors, Cyclic Redundancy Check (CRC) offers superior error detection capabilities by using polynomial division to identify complex data corruption.

What is Cyclic Redundancy Check (CRC)?

Cyclic Redundancy Check (CRC) is an error-detecting code used to identify accidental changes to raw data in digital networks and storage devices. It operates by treating data as a polynomial and dividing it by a predetermined generator polynomial, producing a unique checksum appended to the data for verification. CRC offers higher error detection accuracy than simpler methods like parity bits, making it essential in protocols such as Ethernet, USB, and storage systems.

How Parity Bit Error Detection Works

Parity bit error detection works by adding a single bit to a data set, which makes the total number of 1s either even (even parity) or odd (odd parity). When data is transmitted, the receiver counts the 1s and compares the parity bit to verify if the data has an odd or even number of 1s as expected; any mismatch signals a potential error. This method detects single-bit errors effectively but has limited capability in identifying multiple-bit errors compared to Cyclic Redundancy Check (CRC).

CRC Mechanism: Error Detection in Depth

Cyclic Redundancy Check (CRC) uses polynomial division to generate a checksum that detects burst errors with high accuracy, while a parity bit only identifies single-bit errors through simple even or odd parity schemes. CRC's error detection mechanism encodes data as a binary polynomial and divides it by a predetermined generator polynomial, appending the remainder as the CRC code, enabling detection of multiple and complex error patterns. This robust polynomial-based approach outperforms parity bits by ensuring data integrity in communication protocols like Ethernet and USB.

Key Differences Between CRC and Parity Bit

Cyclic Redundancy Check (CRC) detects errors by treating data as polynomial division and generating a checksum, providing strong error detection for multiple bit errors. Parity Bit, a simpler method, adds a single bit to indicate whether the number of 1s in data is odd or even, effective mainly for detecting single-bit errors. CRC achieves higher reliability and error detection accuracy compared to the limited scope of parity bits, making it essential for robust communication systems.

Advantages and Disadvantages: Parity Bit vs CRC

Parity bit offers a simple, low-overhead method for error detection by adding a single bit to data, making it ideal for detecting single-bit errors in small data sets but ineffective against burst errors or multiple-bit errors. In contrast, Cyclic Redundancy Check (CRC) provides a more robust error detection capability by using polynomial division to generate a checksum, enabling detection of common error patterns including burst errors with much higher reliability. While CRC requires more computational resources and processing time compared to parity bit, its superior error-detection accuracy makes it preferable in applications demanding data integrity, such as network communications and storage devices.

Applications of Parity Bit in Real-world Systems

Parity bits are widely employed in communication systems, memory devices, and error detection protocols to identify single-bit errors. They are integral to simple error detection in serial communication interfaces, such as UART and I2C, ensuring data integrity during transmission. Parity bits also find applications in computer memory modules like ECC RAM, where they help detect and correct data corruption, enhancing system reliability.

Common Use Cases for CRC in Networking and Storage

Cyclic Redundancy Check (CRC) is extensively utilized in networking protocols such as Ethernet and Wi-Fi for error detection, ensuring data integrity during transmission by detecting accidental changes to raw data. In storage systems, CRC is embedded within hard drives, SSDs, and RAID configurations to identify data corruption in blocks, supporting reliable data retrieval and system stability. Parity bits, while simpler and faster, are often reserved for basic error detection in memory modules or low-overhead communication scenarios where CRC's enhanced error detection capability is not critical.

Which to Choose: CRC or Parity Bit for Data Protection?

Cyclic Redundancy Check (CRC) offers stronger error detection capabilities compared to a Parity Bit, making it ideal for protecting large data blocks in files, networks, and communications systems. Parity Bit provides minimal error detection, typically catching only single-bit errors and is best suited for simple, low-overhead applications or hardware. Choosing CRC over Parity Bit enhances data integrity in complex environments where reliable error detection is critical.

Cyclic Redundancy Check Infographic

libterm.com

libterm.com