A Graphics Processing Unit (GPU) is a specialized processor designed to accelerate rendering images, videos, and animations, making it essential for gaming, video editing, and machine learning tasks. By handling multiple calculations simultaneously, GPUs significantly enhance performance and deliver smoother visual experiences. Discover how choosing the right GPU can elevate your computing power and boost your projects by reading the rest of this article.

Table of Comparison

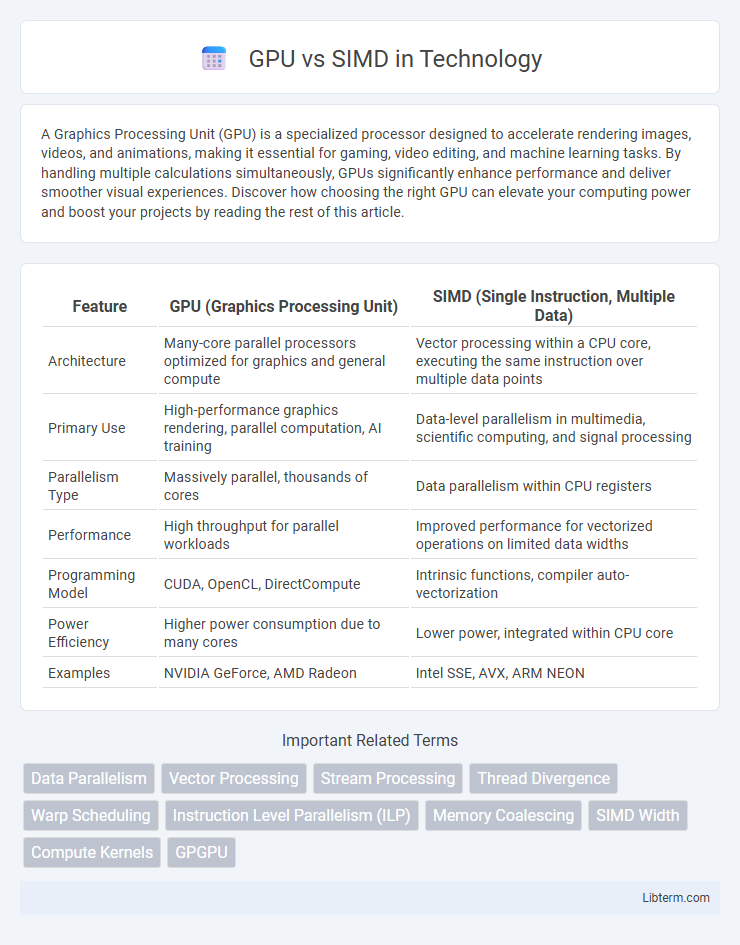

| Feature | GPU (Graphics Processing Unit) | SIMD (Single Instruction, Multiple Data) |

|---|---|---|

| Architecture | Many-core parallel processors optimized for graphics and general compute | Vector processing within a CPU core, executing the same instruction over multiple data points |

| Primary Use | High-performance graphics rendering, parallel computation, AI training | Data-level parallelism in multimedia, scientific computing, and signal processing |

| Parallelism Type | Massively parallel, thousands of cores | Data parallelism within CPU registers |

| Performance | High throughput for parallel workloads | Improved performance for vectorized operations on limited data widths |

| Programming Model | CUDA, OpenCL, DirectCompute | Intrinsic functions, compiler auto-vectorization |

| Power Efficiency | Higher power consumption due to many cores | Lower power, integrated within CPU core |

| Examples | NVIDIA GeForce, AMD Radeon | Intel SSE, AVX, ARM NEON |

Introduction to GPU and SIMD

GPUs (Graphics Processing Units) are specialized hardware designed for parallel processing, capable of handling thousands of threads simultaneously to accelerate complex computations in graphics rendering and scientific applications. SIMD (Single Instruction, Multiple Data) is a parallel computing architecture where a single instruction operates on multiple data points concurrently, commonly used within CPUs and GPUs to enhance data-level parallelism. Understanding the distinction between GPUs and SIMD involves recognizing GPUs as massively parallel processors integrating SIMD units for efficient execution of vectorized operations.

Fundamentals of GPU Architecture

GPU architecture is designed around thousands of small, efficient cores that execute parallel threads simultaneously, optimizing throughput for highly parallel tasks. Unlike SIMD (Single Instruction, Multiple Data) units which apply one instruction to multiple data points in lockstep, GPUs employ a hierarchical structure of streaming multiprocessors managing warps of threads with independent control flow. This fundamental distinction allows GPUs to handle complex, diverse workloads with massive parallelism and high memory bandwidth, essential for graphics rendering and scientific computing.

Overview of SIMD Architecture

SIMD (Single Instruction, Multiple Data) architecture enables parallel processing by executing the same instruction across multiple data points simultaneously, enhancing performance in vectorized tasks. Unlike GPUs, which contain thousands of cores optimized for highly parallel workloads, SIMD units are integrated within CPUs or specialized processors, offering efficient data-level parallelism at a finer granularity. SIMD architectures utilize wide registers and vector pipelines to process multiple elements in parallel, making them ideal for applications such as multimedia processing, scientific simulations, and real-time signal processing.

Core Differences Between GPU and SIMD

GPUs consist of thousands of cores designed for massive parallelism, enabling simultaneous execution of many threads across diverse data sets. SIMD (Single Instruction, Multiple Data) architecture employs a smaller number of lanes that perform the same instruction across a limited set of data points concurrently, offering efficiency in vectorized computations. The core difference lies in GPUs' capacity for high throughput with complex control flow and branching, whereas SIMD units excel in uniform, tightly-coupled data processing with minimal divergence.

Performance Comparison: GPU vs SIMD

GPUs deliver significantly higher parallel processing power compared to traditional SIMD architectures by leveraging thousands of cores designed for simultaneous execution of complex tasks, enabling superior throughput in data-intensive applications like machine learning and scientific simulations. SIMD units excel in executing uniform operations on data streams with low latency and power consumption, providing efficient performance for tightly controlled vectorized workloads. Performance metrics reveal GPUs outperform SIMD by orders of magnitude in scalability and flexibility, especially when handling diverse and large-scale parallelism beyond fixed data patterns.

Use Cases: When to Choose GPU or SIMD

GPUs excel at parallel processing for highly parallel workloads such as deep learning, real-time rendering, and large-scale scientific simulations due to their thousands of cores optimized for simultaneous thread execution. SIMD architectures, found in CPUs and specialized processors, are ideal for data-level parallelism in tasks like multimedia processing, signal processing, and small-scale matrix operations where fine-grained parallelism within a single instruction cycle improves performance. Choose GPUs for massive parallelism and high throughput requirements, and SIMD for efficient, low-latency execution of repetitive, vectorized computations.

Programming Models and Tools

GPU programming models such as CUDA and OpenCL enable fine-grained parallelism through thousands of lightweight threads, leveraging massive data-level parallelism for applications like graphics rendering and machine learning. SIMD programming relies on vector instructions within CPUs or specialized units, utilizing intrinsic functions and compiler auto-vectorization to process multiple data elements simultaneously with lower parallel thread counts. Tools for GPUs include NVIDIA Nsight and AMD ROCm, which provide debugging and profiling support, while SIMD development hinges on compiler support (e.g., Intel ICC, GCC) and libraries like Intel AVX or ARM NEON for efficient vectorized code generation.

Scalability and Efficiency Considerations

GPUs leverage thousands of cores to achieve massive parallelism, offering superior scalability compared to traditional SIMD architectures limited by fixed vector widths. Their ability to dynamically allocate threads enhances efficiency in handling diverse workloads, whereas SIMD units excel in tightly coupled, uniform data processing but face bottlenecks with irregular data patterns. Optimal performance hinges on matching workload characteristics to GPU thread management or SIMD vector execution models for energy-efficient computing.

Power Consumption and Cost Analysis

GPUs typically consume more power than SIMD units due to their complex architecture designed for parallel processing, with power consumption ranging from 150W to 300W compared to SIMD's lower 30W to 100W range. Cost analysis shows GPUs carry higher initial investment costs, often exceeding $500 for consumer models, while SIMD units used in embedded systems or specialized hardware can cost under $200, making SIMD more cost-effective for energy-sensitive applications. Power efficiency per operation also favors SIMD in scenarios requiring simpler parallelism, highlighting its advantage in reducing operational expenses over time.

Future Trends in Parallel Computing

Future trends in parallel computing highlight GPUs evolving with increased core counts and enhanced memory hierarchies to handle complex, data-intensive workloads efficiently. SIMD architectures continue to improve through wider vector units and better integration with CPU cores, optimizing low-latency computations and energy efficiency. Emerging hybrid models combining GPUs and SIMD units aim to maximize parallel throughput by leveraging their complementary strengths in heterogeneous computing environments.

GPU Infographic

libterm.com

libterm.com