Just-in-Time (JIT) compilation enhances program performance by translating code into machine language at runtime, which enables faster execution compared to traditional interpretation methods. This dynamic approach optimizes your application's speed while maintaining flexibility for various execution environments. Explore the rest of the article to understand how JIT compilation can improve your software's efficiency and responsiveness.

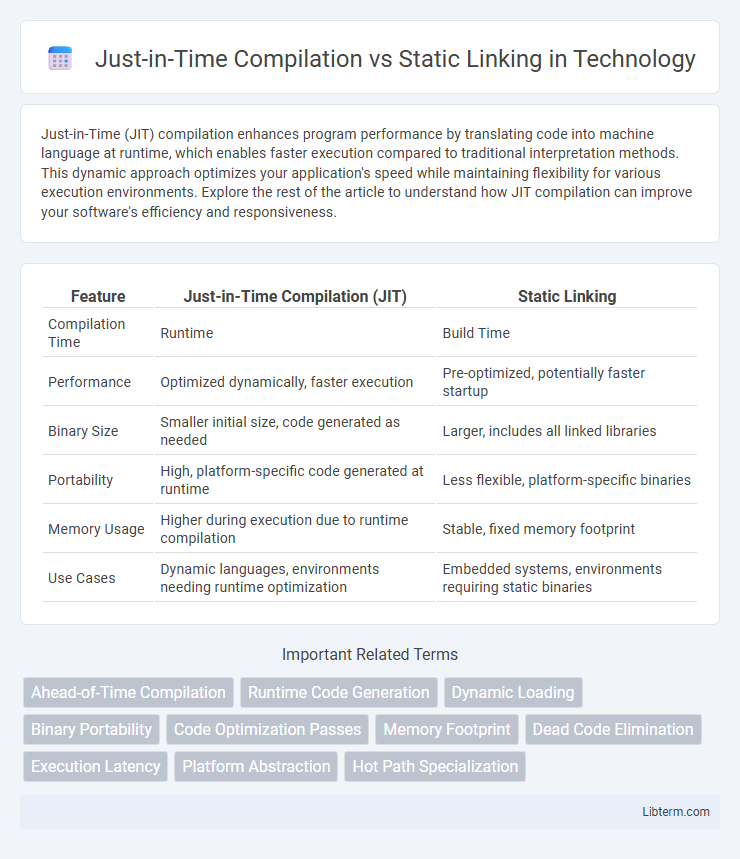

Table of Comparison

| Feature | Just-in-Time Compilation (JIT) | Static Linking |

|---|---|---|

| Compilation Time | Runtime | Build Time |

| Performance | Optimized dynamically, faster execution | Pre-optimized, potentially faster startup |

| Binary Size | Smaller initial size, code generated as needed | Larger, includes all linked libraries |

| Portability | High, platform-specific code generated at runtime | Less flexible, platform-specific binaries |

| Memory Usage | Higher during execution due to runtime compilation | Stable, fixed memory footprint |

| Use Cases | Dynamic languages, environments needing runtime optimization | Embedded systems, environments requiring static binaries |

Understanding Just-in-Time Compilation

Just-in-Time Compilation (JIT) dynamically translates code into machine language during program execution, improving runtime performance by optimizing code paths based on actual usage patterns. Unlike Static Linking, which bundles all libraries into a single executable before runtime, JIT compiles code on the fly, allowing for adaptive optimizations and reduced memory usage. This approach is commonly used in environments like Java Virtual Machine (JVM) and .NET CLR to enhance application responsiveness and efficiency.

Overview of Static Linking

Static linking embeds all necessary libraries and dependencies directly into the executable file during the compile-time, resulting in a standalone binary that does not require external libraries at runtime. This method increases the executable size but ensures faster program startup and consistent behavior across different environments. Static linking is commonly used in embedded systems and scenarios where dependency management and runtime performance are critical.

How JIT Compilation Works

Just-in-Time (JIT) compilation translates code into machine language during program execution, optimizing runtime performance by compiling only the necessary parts on demand. It collects profiling data to apply dynamic optimizations such as inlining, method specialization, and dead code elimination, improving execution speed based on actual usage patterns. JIT compilation balances the trade-off between interpretation overhead and the delay of upfront static linking, enabling adaptive performance tuning that static linking cannot provide.

The Static Linking Process Explained

The static linking process involves combining all code and library dependencies into a single executable at compile time, ensuring the program runs independently without relying on external shared libraries during execution. This method enhances runtime performance and reduces load-time overhead by eliminating dynamic symbol resolution and library loading. Static linking increases the executable size since all necessary components are embedded directly, making it advantageous for environments requiring predictable behavior and minimal runtime dependencies.

Performance Comparison: JIT vs Static Linking

Just-in-Time (JIT) compilation offers dynamic optimization at runtime, enabling improved performance by adapting to actual execution patterns, which can lead to faster code execution in long-running applications. Static linking, by embedding all libraries at compile time, eliminates runtime overhead and offers predictability with faster startup times and consistent execution speed. While JIT can surpass static linking in performance for workloads benefiting from runtime profiling and optimizations, static linking generally provides superior performance in environments where low latency and minimal runtime resource consumption are critical.

Memory Usage Differences

Just-in-Time (JIT) compilation reduces memory usage by compiling code at runtime, allowing for dynamic loading and unloading of code segments, which minimizes the overall memory footprint. Static linking embeds all necessary libraries into the executable, increasing the file size and memory consumption since every linked library is loaded into memory regardless of usage. Consequently, JIT compilation offers more efficient memory management in applications where runtime flexibility and reduced memory overhead are critical.

Platform Compatibility and Portability

Just-in-Time (JIT) compilation enhances platform compatibility by compiling code at runtime, allowing applications to adapt to diverse hardware and operating systems without prior platform-specific builds. Static linking embeds all necessary libraries into the executable, resulting in less portability since the binary is optimized for a specific environment but ensures consistent performance across identical platforms. JIT's dynamic nature offers greater flexibility for cross-platform deployment, while static linking simplifies distribution at the cost of reduced portability.

Security Implications

Just-in-Time (JIT) compilation exposes applications to heightened security risks such as code injection and runtime exploitation due to its dynamic code generation, creating attack surfaces that static linking mitigates by embedding all code at compile time. Static linking enhances security by reducing dependencies on external libraries and minimizing runtime code modification, thereby limiting vulnerability exposure. However, JIT's flexibility allows for dynamic patching and optimization but requires robust sandboxing and strict input validation to prevent malicious code execution.

Use Cases: When to Choose JIT or Static Linking

Just-in-time (JIT) compilation is ideal for applications requiring runtime flexibility, dynamic code optimization, and platform independence, such as web browsers, virtual machines, and scripting languages. Static linking suits performance-critical software and embedded systems where startup time, predictable behavior, and reduced runtime dependencies are essential, including real-time systems and low-level utilities. Choosing JIT enhances adaptability and rapid iteration, while static linking maximizes efficiency and reliability in tightly controlled environments.

Future Trends in Code Execution Techniques

Future trends in code execution techniques emphasize the increasing integration of Just-in-Time (JIT) compilation with static linking to optimize performance and flexibility. Advances in hybrid execution models leverage static linking for initial load-time efficiency while employing JIT compilation for runtime adaptability and platform-specific optimizations. Emerging technologies in machine learning-driven code generation and adaptive optimization promise to further enhance these combined methods, reducing latency and improving execution speed across diverse computing environments.

Just-in-Time Compilation Infographic

libterm.com

libterm.com