Load balancing distributes network or application traffic efficiently across multiple servers to ensure reliability and optimal performance. It minimizes downtime and enhances your system's scalability by preventing any single server from becoming overwhelmed. Explore the rest of the article to discover effective load balancing strategies and tools tailored to your needs.

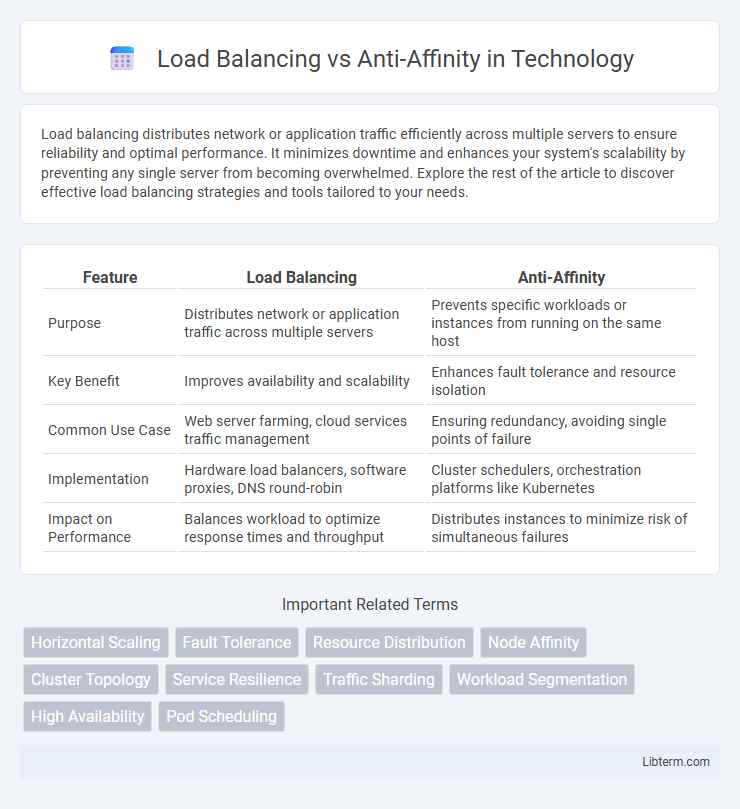

Table of Comparison

| Feature | Load Balancing | Anti-Affinity |

|---|---|---|

| Purpose | Distributes network or application traffic across multiple servers | Prevents specific workloads or instances from running on the same host |

| Key Benefit | Improves availability and scalability | Enhances fault tolerance and resource isolation |

| Common Use Case | Web server farming, cloud services traffic management | Ensuring redundancy, avoiding single points of failure |

| Implementation | Hardware load balancers, software proxies, DNS round-robin | Cluster schedulers, orchestration platforms like Kubernetes |

| Impact on Performance | Balances workload to optimize response times and throughput | Distributes instances to minimize risk of simultaneous failures |

Introduction to Load Balancing and Anti-Affinity

Load balancing distributes network or application traffic evenly across multiple servers to optimize resource use, maximize throughput, and prevent any single server from becoming a bottleneck. Anti-affinity rules control the placement of workloads by ensuring that specific instances run on separate hosts to enhance fault tolerance and reduce the risk of service disruption. Both strategies improve system reliability and availability but address different aspects of workload management: load balancing focuses on traffic distribution, while anti-affinity emphasizes strategic instance separation.

Defining Load Balancing: Core Concepts

Load balancing involves distributing network or application traffic across multiple servers to ensure optimal resource use, maximize throughput, minimize response time, and prevent server overload. Key concepts include algorithms like round-robin, least connections, and IP hash that determine how traffic is evenly allocated among servers. Effective load balancing improves system reliability, enhances scalability, and ensures high availability in distributed computing environments.

Understanding Anti-Affinity: Key Principles

Anti-affinity ensures that workloads or instances are intentionally placed on separate hosts to enhance fault tolerance and reduce the risk of correlated failures in distributed systems. It leverages rules that prevent co-location of specific virtual machines or containers, boosting system resiliency and availability. Understanding anti-affinity is critical for designing architecture where hardware failures must not impact multiple replicas of the same service or application simultaneously.

Architectural Differences: Load Balancing vs Anti-Affinity

Load balancing distributes network or application traffic across multiple servers to optimize resource use and prevent overload, ensuring high availability and scalability. Anti-affinity enforces separation by preventing specific workloads or instances from co-locating on the same physical host, enhancing fault tolerance and minimizing risk of simultaneous failure. Architecturally, load balancing operates at the traffic or request layer directing client requests, while anti-affinity functions at the placement or scheduling layer within cloud or container orchestration environments to control workload distribution.

Use Cases for Load Balancing

Load balancing optimizes resource utilization and enhances application availability by distributing incoming network traffic evenly across multiple servers or containers. It is essential for high-traffic web applications, ensuring minimal latency and preventing any single server from becoming a bottleneck. Use cases include handling dynamic workloads in cloud environments, supporting microservices architectures, and enabling fault tolerance in distributed systems.

When to Implement Anti-Affinity Rules

Anti-affinity rules should be implemented when you need to ensure that critical application components run on separate hosts to increase fault tolerance and minimize the risk of simultaneous failures. Load balancing distributes workloads evenly across servers to optimize resource utilization, while anti-affinity specifically prevents workloads from co-locating on the same physical or virtual resources. Use anti-affinity in multi-node clusters or cloud environments where high availability and disaster recovery are priorities, especially for stateful applications or databases.

Performance Implications and Scalability

Load balancing evenly distributes workloads across multiple servers or instances to optimize resource utilization and improve response times, enhancing overall system performance and scalability. Anti-affinity rules prevent specific workloads from co-locating on the same physical host to reduce failure risks but may limit resource consolidation and scalability due to constrained placement options. While load balancing maximizes throughput and fault tolerance through dynamic resource allocation, anti-affinity prioritizes availability and fault isolation at the potential cost of reduced resource efficiency and scalability.

High Availability and Fault Tolerance

Load balancing distributes network traffic evenly across multiple servers to enhance high availability and fault tolerance by preventing any single server from becoming a point of failure. Anti-affinity rules ensure that instances of the same service or application are not placed on the same physical host, reducing the risk of simultaneous failures due to hardware issues. Combining load balancing with anti-affinity policies strengthens system resilience by both optimizing resource utilization and minimizing correlated downtime.

Best Practices for Modern Cloud Environments

Load balancing optimizes resource utilization and application availability by distributing traffic evenly across multiple servers, ensuring scalability and fault tolerance in modern cloud environments. Anti-affinity rules prevent specific workloads from running on the same physical hosts, reducing the risk of correlated failures and improving resilience. Combining load balancing with anti-affinity best practices enhances high availability and disaster recovery strategies by balancing load while isolating critical components.

Choosing the Right Strategy: Key Considerations

Load balancing distributes workloads evenly across servers to maximize resource utilization and prevent bottlenecks, optimizing performance in high-traffic environments. Anti-affinity enforces separation of critical workloads by ensuring they do not run on the same host, improving fault tolerance and availability. Choosing the right strategy depends on factors such as workload characteristics, redundancy requirements, and the desired balance between performance and resilience.

Load Balancing Infographic

libterm.com

libterm.com