Effective resource allocation maximizes productivity by strategically distributing available assets, such as time, money, and personnel, to key projects and tasks. Businesses that master this process can reduce waste, improve efficiency, and achieve their objectives faster. Discover how to optimize your resource allocation to drive success in the rest of this article.

Table of Comparison

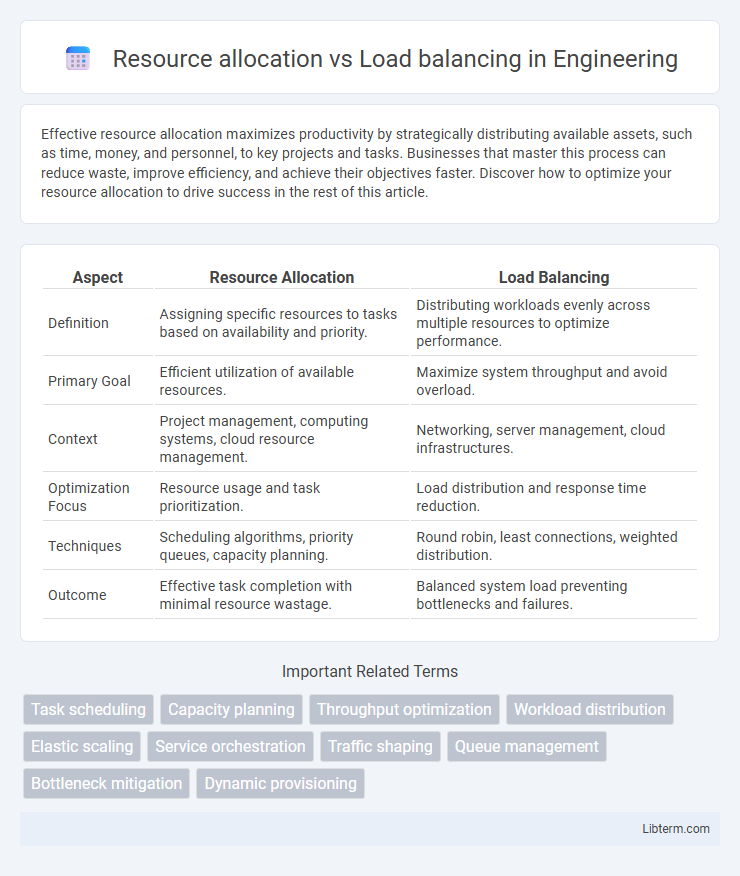

| Aspect | Resource Allocation | Load Balancing |

|---|---|---|

| Definition | Assigning specific resources to tasks based on availability and priority. | Distributing workloads evenly across multiple resources to optimize performance. |

| Primary Goal | Efficient utilization of available resources. | Maximize system throughput and avoid overload. |

| Context | Project management, computing systems, cloud resource management. | Networking, server management, cloud infrastructures. |

| Optimization Focus | Resource usage and task prioritization. | Load distribution and response time reduction. |

| Techniques | Scheduling algorithms, priority queues, capacity planning. | Round robin, least connections, weighted distribution. |

| Outcome | Effective task completion with minimal resource wastage. | Balanced system load preventing bottlenecks and failures. |

Understanding Resource Allocation

Resource allocation involves distributing available system resources such as CPU, memory, and bandwidth to various applications or tasks based on priority and demand to optimize performance and efficiency. It ensures that critical processes receive adequate resources while minimizing waste and preventing bottlenecks. Effective resource allocation directly impacts overall system stability and responsiveness by aligning resource supply with workload requirements.

Defining Load Balancing

Load balancing refers to the process of distributing incoming network traffic or workloads evenly across multiple servers or resources to ensure no single server becomes overwhelmed, improving system reliability and performance. It optimizes resource utilization, reduces response time, and prevents bottlenecks by dynamically allocating tasks based on current server load and capacity. Effective load balancing enhances scalability and ensures high availability in cloud computing, data centers, and web applications.

Key Differences Between Resource Allocation and Load Balancing

Resource allocation involves assigning available system resources such as CPU, memory, and bandwidth to various tasks based on priority and demand, ensuring efficient utilization. Load balancing distributes incoming network or application traffic across multiple servers or resources to prevent any single node from becoming overwhelmed, enhancing performance and reliability. Key differences include resource allocation's focus on optimizing individual resource usage, whereas load balancing emphasizes distributing workloads evenly to maintain system stability and minimize response times.

Importance in Modern Computing Environments

Resource allocation ensures efficient distribution of computational resources such as CPU, memory, and storage to optimize application performance and system utilization in cloud computing and data centers. Load balancing dynamically distributes network or processing traffic across multiple servers to prevent bottlenecks, enhance scalability, and maximize uptime in distributed systems. Both techniques are critical in modern computing environments to maintain high availability, reduce latency, and improve overall system resilience.

Algorithms for Resource Allocation

Resource allocation algorithms prioritize distributing limited computing resources like CPU, memory, and bandwidth efficiently to maximize system performance and meet application demands. Popular approaches include heuristic methods, genetic algorithms, and reinforcement learning techniques that dynamically assign resources based on workload characteristics and system constraints. Effective resource allocation algorithms reduce latency, improve utilization rates, and ensure quality of service in cloud computing, data centers, and distributed systems.

Load Balancing Techniques and Strategies

Load balancing techniques distribute workloads across multiple servers to optimize resource use, maximize throughput, and minimize response time. Common strategies include round-robin, least connections, and IP hash, each designed to address specific traffic patterns and server capacities. Advanced load balancing incorporates health checks, session persistence, and dynamic load adjustments to ensure high availability and fault tolerance.

Impact on System Performance

Resource allocation determines how system resources such as CPU, memory, and bandwidth are distributed among various processes, directly influencing overall system efficiency and responsiveness. Load balancing ensures the optimal distribution of workload across servers or nodes, preventing bottlenecks and enhancing throughput and system reliability. Effective coordination of resource allocation and load balancing minimizes latency, maximizes resource utilization, and improves system scalability.

Use Cases: Resource Allocation vs Load Balancing

Resource allocation involves assigning specific resources like CPU, memory, or bandwidth to tasks or applications to maximize efficiency, commonly used in cloud computing and project management to ensure optimal utilization. Load balancing distributes incoming network traffic or workloads across multiple servers or systems to prevent overload and enhance performance, typically applied in web services, data centers, and high-availability systems. While resource allocation focuses on optimal resource distribution for individual tasks, load balancing emphasizes maintaining system stability and responsiveness by managing workload distribution across multiple resources.

Challenges and Limitations

Resource allocation faces challenges in accurately predicting workload demands and efficiently distributing limited resources, often resulting in underutilization or congestion. Load balancing encounters limitations in real-time monitoring and seamless task distribution across heterogeneous systems, leading to potential delays and uneven performance. Both concepts struggle with scalability and dynamic adaptation in cloud and distributed environments, impacting overall system reliability and efficiency.

Future Trends in Resource Management

Resource allocation and load balancing are evolving with the integration of artificial intelligence and machine learning to predict workload demands and optimize resource distribution dynamically. Future trends emphasize edge computing and serverless architectures that require more granular, real-time resource management techniques to handle distributed environments efficiently. Advanced automation tools are being developed to enable adaptive decision-making, improving performance, reducing latency, and minimizing operational costs in complex cloud infrastructures.

Resource allocation Infographic

libterm.com

libterm.com