Scalability ensures your business can efficiently handle increased demands without compromising performance or customer experience. Adopting scalable solutions supports long-term growth and adaptability in dynamic markets. Explore the rest of the article to discover how scalability can transform your operations and drive success.

Table of Comparison

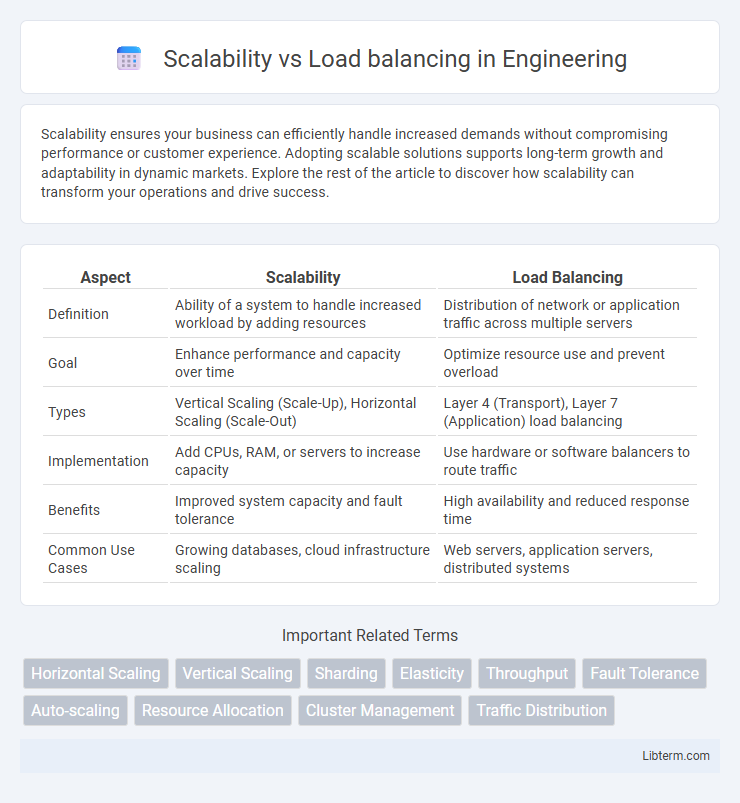

| Aspect | Scalability | Load Balancing |

|---|---|---|

| Definition | Ability of a system to handle increased workload by adding resources | Distribution of network or application traffic across multiple servers |

| Goal | Enhance performance and capacity over time | Optimize resource use and prevent overload |

| Types | Vertical Scaling (Scale-Up), Horizontal Scaling (Scale-Out) | Layer 4 (Transport), Layer 7 (Application) load balancing |

| Implementation | Add CPUs, RAM, or servers to increase capacity | Use hardware or software balancers to route traffic |

| Benefits | Improved system capacity and fault tolerance | High availability and reduced response time |

| Common Use Cases | Growing databases, cloud infrastructure scaling | Web servers, application servers, distributed systems |

Introduction to Scalability and Load Balancing

Scalability refers to the capability of a system to handle increased workload by adding resources, either vertically through upgrading existing hardware or horizontally by adding more machines. Load balancing distributes incoming network traffic across multiple servers to ensure no single server becomes overwhelmed, enhancing reliability and performance. Both concepts are essential for maintaining optimal service availability and response times in growing IT infrastructures.

Defining Scalability in Modern Systems

Scalability in modern systems refers to the capacity to handle increased workloads by expanding resources either vertically (adding power to existing machines) or horizontally (adding more machines). It ensures system performance remains stable under growing user demands through efficient resource management and system architecture. Load balancing complements scalability by distributing traffic evenly across servers to prevent bottlenecks and optimize resource utilization.

Understanding Load Balancing Fundamentals

Load balancing distributes network or application traffic across multiple servers to ensure no single server becomes overwhelmed, optimizing resource use and improving response times. Scalability involves the system's capacity to handle increased load by adding resources, either vertically (upgrading existing hardware) or horizontally (adding more servers). Understanding load balancing fundamentals is crucial for designing scalable architectures, as effective load balancing maintains performance and reliability during traffic spikes or growth.

Key Differences Between Scalability and Load Balancing

Scalability refers to the system's ability to handle increased workload by adding resources, either vertically (upgrading existing hardware) or horizontally (adding more devices). Load balancing distributes incoming network traffic across multiple servers to ensure no single server becomes overwhelmed, improving resource utilization and system reliability. Key differences include scalability's focus on capacity expansion, while load balancing optimizes resource allocation to maintain performance under varying demand.

Benefits of Implementing Scalability

Implementing scalability enhances system performance by enabling seamless handling of increased workloads, ensuring consistent user experience during traffic spikes. It allows businesses to efficiently allocate resources, reducing operational costs and preventing downtime. Scalable architectures also support long-term growth by accommodating expanding user bases without compromising speed or reliability.

Advantages of Load Balancing Solutions

Load balancing solutions enhance system reliability by distributing incoming network traffic evenly across multiple servers, preventing overload and minimizing downtime. They improve application performance through real-time traffic management, ensuring consistent response times and faster user experiences. These solutions also enable seamless scalability by dynamically allocating resources based on demand, optimizing operational efficiency and reducing hardware costs.

Common Scalability Strategies in Cloud Environments

Common scalability strategies in cloud environments include vertical scaling, which involves increasing the resources of a single server, and horizontal scaling, adding more servers to distribute the load. Load balancing complements these strategies by efficiently distributing network or application traffic across multiple servers to ensure no single resource is overwhelmed, thus optimizing performance and reliability. Auto-scaling mechanisms dynamically adjust resource allocation based on real-time demand, enabling seamless handling of variable workloads in cloud infrastructures.

Popular Load Balancing Techniques and Algorithms

Popular load balancing techniques include round-robin, least connections, and IP hash algorithms, each optimizing resource distribution based on server availability and client request patterns. Round-robin cycles through servers sequentially, providing equal request distribution, while the least connections algorithm directs traffic to servers with the fewest active sessions, enhancing responsiveness under variable loads. IP hash uses client IP addresses to ensure session persistence, making it ideal for applications requiring consistent user-server affinity and improving overall scalability by managing traffic efficiently across multiple servers.

Choosing Between Scalability and Load Balancing

Choosing between scalability and load balancing depends on the specific needs of a system's growth and performance management. Scalability focuses on increasing system capacity by adding resources vertically or horizontally to handle higher loads, while load balancing distributes incoming traffic evenly across multiple servers to optimize resource use and prevent overloads. Prioritizing scalability is essential for long-term growth, whereas load balancing is critical for maintaining system stability and response times during fluctuating demand.

Best Practices for Combining Scalability and Load Balancing

Combining scalability and load balancing involves deploying distributed systems that automatically allocate resources based on traffic patterns and demand spikes, ensuring optimal performance and resource utilization. Best practices include implementing horizontal scaling to add servers dynamically, using load balancers that support health checks and smart routing algorithms, and integrating auto-scaling mechanisms with real-time monitoring tools to balance load efficiently while preventing over-provisioning. Leveraging cloud-native solutions like Kubernetes and AWS Elastic Load Balancing enhances seamless scale-out and failover, delivering high availability and consistent user experiences.

Scalability Infographic

libterm.com

libterm.com