Contamination delay refers to the minimum time required after an input changes before the output begins to respond in a digital circuit, crucial for ensuring signal integrity and preventing glitches. Understanding contamination delay helps optimize timing analysis and improve overall circuit performance. Explore the rest of the article to discover how managing contamination delay enhances your digital systems.

Table of Comparison

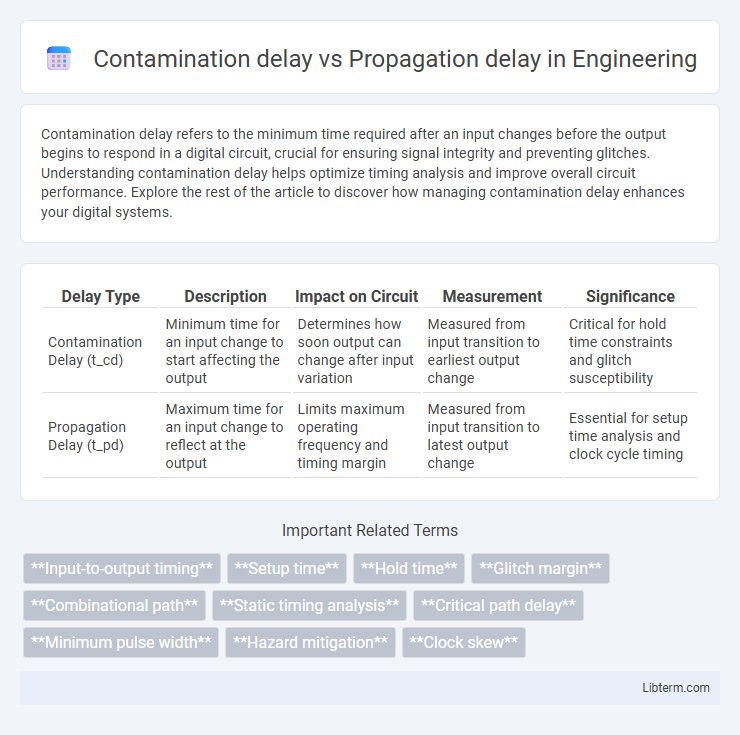

| Delay Type | Description | Impact on Circuit | Measurement | Significance |

|---|---|---|---|---|

| Contamination Delay (t_cd) | Minimum time for an input change to start affecting the output | Determines how soon output can change after input variation | Measured from input transition to earliest output change | Critical for hold time constraints and glitch susceptibility |

| Propagation Delay (t_pd) | Maximum time for an input change to reflect at the output | Limits maximum operating frequency and timing margin | Measured from input transition to latest output change | Essential for setup time analysis and clock cycle timing |

Introduction to Contamination Delay and Propagation Delay

Contamination delay refers to the minimum time interval after which the output of a digital circuit begins to change in response to an input change, indicating the earliest potential glitch occurrence. Propagation delay measures the maximum time taken for the output to reach a stable state after an input transition, defining the worst-case response time of the circuit. Understanding both delays is crucial for designing reliable synchronous systems and ensuring proper timing analysis in digital circuits.

Definitions: Contamination Delay vs Propagation Delay

Contamination delay refers to the minimum time interval after an input change before the output begins to show any alteration, representing the earliest signal transition through a digital circuit. Propagation delay is the maximum time taken for an input change to cause the final output to reach a stable and correct logical state, indicating the latest signal transition arrival at the output. Both delays are critical in timing analysis for synchronous circuits to ensure reliable operation without timing violations.

Importance in Digital Circuit Design

Contamination delay defines the minimum time before an output starts to change after an input transition, ensuring signals are stable enough to avoid erroneous data latching in digital circuits. Propagation delay indicates the maximum time taken for an input change to affect the output, critical for timing analysis, and clock cycle determination in sequential logic. Accurate characterization of both delays is essential for reliable timing closure, avoiding setup and hold time violations, and optimizing overall circuit performance.

Key Differences and Similarities

Contamination delay is the minimum time taken for an output to start changing after the input changes, while propagation delay is the maximum time for the output to settle to its final value. Both delays measure different timing aspects critical in digital circuit design, influencing signal integrity and timing analysis. Engineers use contamination delay to avoid glitches in sequential elements and propagation delay to ensure correct data synchronization.

Role in Timing Analysis

Contamination delay sets the minimum time before an output begins to change after an input transition, critical for identifying potential hold time violations in timing analysis. Propagation delay defines the maximum interval required for an output to settle at a stable value after input changes, essential for determining the setup time and overall clock period. Accurate assessment of both delays ensures reliable synchronization and prevents timing hazards in digital circuits.

Factors Affecting Both Delays

Contamination delay is influenced primarily by transistor switching thresholds and input signal rise/fall times, while propagation delay depends on gate capacitance, input signal transitions, and load conditions. Wire resistance and parasitic capacitances in the circuit further impact both delays by affecting signal integrity and timing. Process variations, temperature fluctuations, and supply voltage changes also contribute to variations in both contamination and propagation delays in digital circuits.

Impact on Circuit Performance and Reliability

Contamination delay, the minimum time before an output begins to change after an input change, critically affects circuit reliability by potentially causing glitches and race conditions. Propagation delay, the maximum time for a signal to travel through a circuit path, directly impacts overall timing performance, influencing the maximum operating frequency and synchronization accuracy. Accurate characterization of both delays is essential for ensuring robust circuit timing, minimizing setup and hold time violations, and optimizing clock distribution networks.

Methods for Measuring and Minimizing Delays

Contamination delay is measured by applying test vectors to detect the earliest time a signal begins to change, often utilizing high-speed oscilloscopes or timing analyzers to capture this minimum delay. Propagation delay measurement involves determining the total time for a signal to travel through a circuit, typically using time interval analyzers or simulation tools like SPICE for precise calculation. Minimizing delays leverages techniques such as transistor sizing, wire length optimization, and employing buffered stages to reduce resistance and capacitance, improving overall signal timing performance.

Contamination and Propagation Delays in Sequential Circuits

Contamination delay in sequential circuits refers to the minimum time taken for an input change to begin affecting the output, crucial for determining the earliest possible arrival of incorrect signals. Propagation delay represents the maximum time required for an input change to fully propagate through the circuit and stabilize at the output, essential for timing analysis and ensuring reliable data capture. Accurate assessment of both contamination and propagation delays ensures proper setup and hold times, preventing data corruption and maintaining synchronization in flip-flops and latches.

Practical Examples and Case Studies

Contamination delay refers to the minimum time required for an output to begin changing after an input change, critical in high-speed digital circuits like microprocessors where timing errors can cause glitches, as demonstrated in Intel's Pentium CPU design issues. Propagation delay is the maximum time taken for a signal to propagate through a logic gate or circuit, impacting overall system performance in applications such as SRAM memory access times, where delays affect read/write cycles. Case studies from Texas Instruments highlight how optimizing both delays in DSP chips improves signal integrity and operational speed, balancing fast response with stable output transitions.

Contamination delay Infographic

libterm.com

libterm.com