Data preprocessing involves cleaning, transforming, and organizing raw data to enhance its quality and make it suitable for analysis or machine learning models. This crucial step helps eliminate errors, handle missing values, and normalize datasets, improving the accuracy and efficiency of your results. Explore the rest of the article to learn essential techniques and best practices for effective data preprocessing.

Table of Comparison

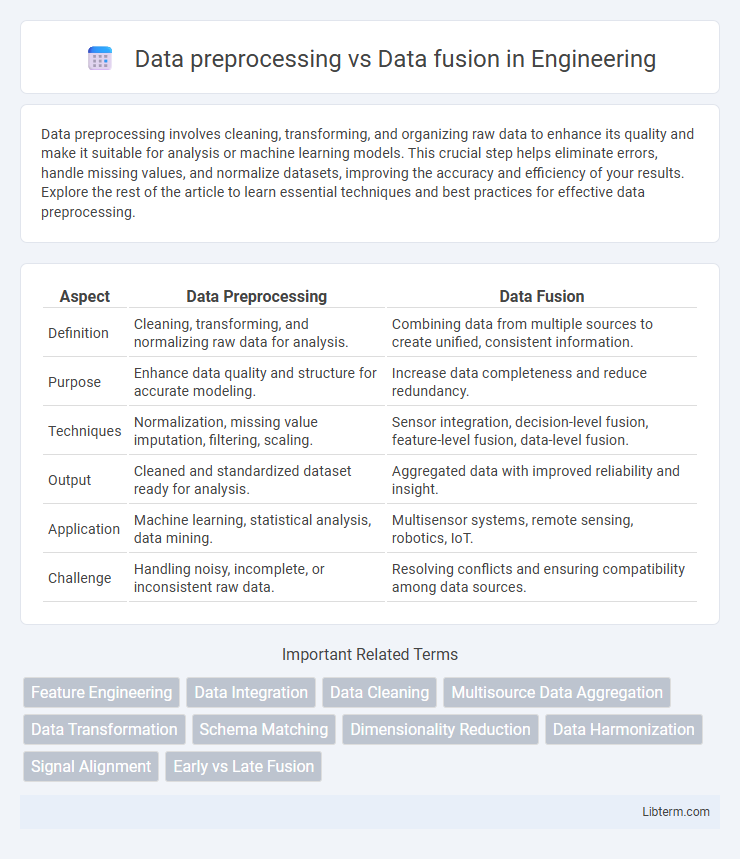

| Aspect | Data Preprocessing | Data Fusion |

|---|---|---|

| Definition | Cleaning, transforming, and normalizing raw data for analysis. | Combining data from multiple sources to create unified, consistent information. |

| Purpose | Enhance data quality and structure for accurate modeling. | Increase data completeness and reduce redundancy. |

| Techniques | Normalization, missing value imputation, filtering, scaling. | Sensor integration, decision-level fusion, feature-level fusion, data-level fusion. |

| Output | Cleaned and standardized dataset ready for analysis. | Aggregated data with improved reliability and insight. |

| Application | Machine learning, statistical analysis, data mining. | Multisensor systems, remote sensing, robotics, IoT. |

| Challenge | Handling noisy, incomplete, or inconsistent raw data. | Resolving conflicts and ensuring compatibility among data sources. |

Introduction to Data Preprocessing and Data Fusion

Data preprocessing involves cleaning, transforming, and organizing raw data to improve its quality and suitability for analysis, including tasks like normalization, handling missing values, and noise reduction. Data fusion combines information from multiple data sources or sensors to produce more consistent, accurate, and comprehensive datasets by integrating heterogeneous data types and resolving conflicts. Both processes enhance data reliability but serve different purposes: preprocessing prepares single datasets, while fusion merges multiple data inputs for enriched insights.

Defining Data Preprocessing

Data preprocessing involves cleaning, transforming, and organizing raw data to improve its quality and usability for analysis or modeling. It includes techniques such as normalization, missing value imputation, noise reduction, and feature extraction to ensure accurate and consistent input for machine learning algorithms. Data fusion, in contrast, integrates multiple data sources to produce more comprehensive and reliable information, often after preprocessing has been completed.

Defining Data Fusion

Data fusion is the process of integrating multiple data sources to produce more consistent, accurate, and useful information than that provided by any individual source alone. It involves techniques such as sensor fusion, feature-level fusion, and decision-level fusion to combine data at various stages of processing. Unlike data preprocessing, which primarily cleanses and transforms raw data, data fusion aims to synthesize complementary information for enhanced analysis and decision-making.

Key Goals: Data Preprocessing vs Data Fusion

Data preprocessing aims to clean, normalize, and transform raw data into a usable format, enhancing data quality and consistency for accurate analysis. Data fusion integrates data from multiple sources to create a unified, comprehensive dataset that improves decision-making and reduces uncertainty. Key goals for preprocessing include error correction and noise reduction, while fusion focuses on combining complementary information to enhance data richness and reliability.

Techniques Used in Data Preprocessing

Data preprocessing techniques include data cleaning, normalization, transformation, and feature extraction, which prepare raw data for analysis by removing noise, handling missing values, and scaling features. In contrast, data fusion integrates data from multiple heterogeneous sources to create a unified and consistent dataset for improved accuracy and reliability. Effective preprocessing enhances data quality, facilitating better fusion outcomes and more robust machine learning models.

Techniques Used in Data Fusion

Data fusion employs techniques such as feature-level fusion, decision-level fusion, and sensor-level fusion to integrate data from multiple sources for enhanced accuracy and reliability. Techniques like Kalman filtering, Bayesian inference, and Dempster-Shafer theory enable effective combination of heterogeneous data, improving overall data quality and insights. These methodologies contrast with data preprocessing, which primarily focuses on cleansing and transforming single-source data to prepare it for analysis.

Comparative Analysis: Benefits and Challenges

Data preprocessing enhances data quality by cleaning, normalizing, and transforming raw data, which improves model accuracy and efficiency but can be time-consuming and computationally intensive. Data fusion integrates data from multiple heterogeneous sources to provide a more comprehensive and reliable dataset, offering improved decision-making insights yet facing challenges like data heterogeneity and alignment complexity. Comparing both, preprocessing is essential for individual dataset refinement, while fusion adds value by combining diverse data streams, with benefits balanced against difficulties in scalability and integration.

Use Cases Across Industries

Data preprocessing, essential for cleaning and transforming raw data, enables accurate analytics and machine learning in industries like finance for fraud detection and healthcare for patient data normalization. Data fusion integrates multi-source data to create comprehensive insights, used in defense for surveillance systems and automotive for autonomous vehicle sensor integration. Both techniques optimize data quality and enhance decision-making but serve distinct roles across sectors like retail, telecommunications, and energy.

Integrating Data Preprocessing with Data Fusion

Integrating data preprocessing with data fusion enhances the quality and reliability of combined datasets by systematically cleaning, normalizing, and transforming raw data before fusion algorithms are applied. This integration ensures that heterogeneous data sources are harmonized, missing values and noise are reduced, and feature extraction is optimized for more accurate and robust fusion outcomes. Effective coupling of preprocessing techniques such as normalization, dimensionality reduction, and outlier detection with data fusion methods significantly improves decision-making in applications like sensor networks, medical diagnosis, and remote sensing.

Future Trends in Data Processing and Fusion

Future trends in data processing and fusion emphasize the integration of advanced AI algorithms and real-time analytics to enhance data quality and insights. Data preprocessing is evolving with automated cleaning, normalization, and feature extraction techniques powered by machine learning to handle complex, large-scale datasets. Data fusion advances focus on multi-source sensor integration, edge computing, and semantic data alignment to support intelligent decision-making in autonomous systems and IoT networks.

Data preprocessing Infographic

libterm.com

libterm.com