Context-sensitive language refers to a type of formal language whose rules depend on the surrounding symbols, allowing for more complex and precise grammar structures compared to context-free languages. These languages are essential in computational linguistics and programming language design, as they can model phenomena like nested dependencies and variable scope. Explore the rest of the article to understand how context-sensitive languages enhance language processing and computational theory.

Table of Comparison

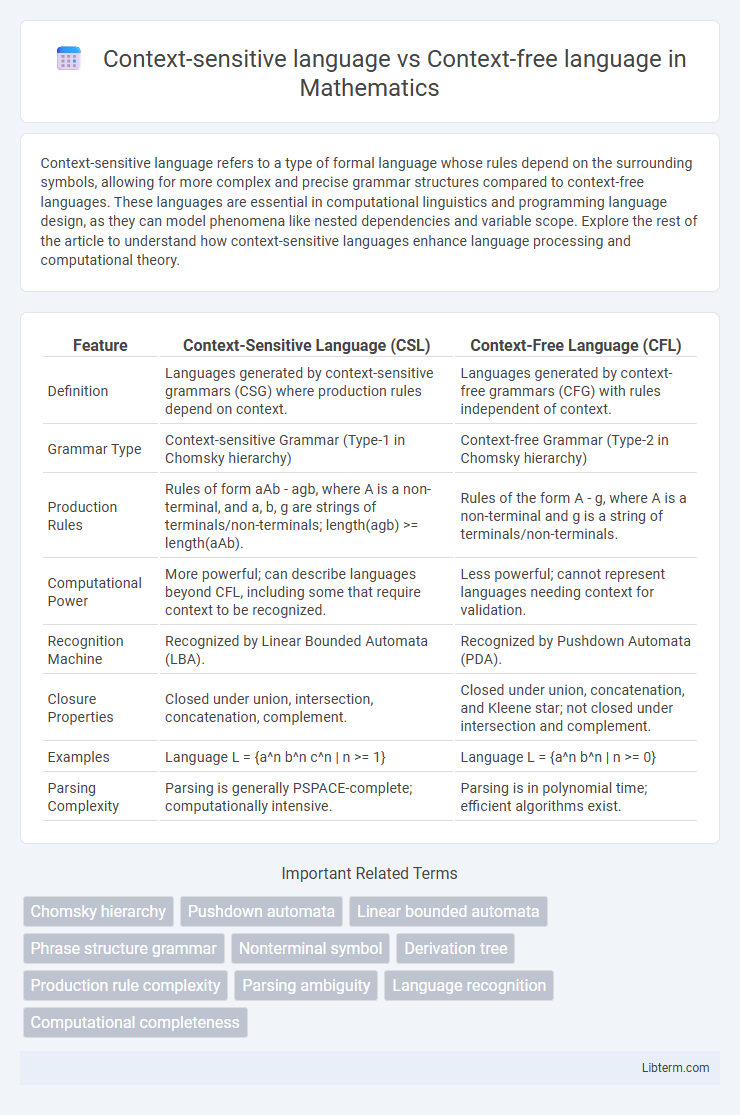

| Feature | Context-Sensitive Language (CSL) | Context-Free Language (CFL) |

|---|---|---|

| Definition | Languages generated by context-sensitive grammars (CSG) where production rules depend on context. | Languages generated by context-free grammars (CFG) with rules independent of context. |

| Grammar Type | Context-sensitive Grammar (Type-1 in Chomsky hierarchy) | Context-free Grammar (Type-2 in Chomsky hierarchy) |

| Production Rules | Rules of form aAb - agb, where A is a non-terminal, and a, b, g are strings of terminals/non-terminals; length(agb) >= length(aAb). | Rules of the form A - g, where A is a non-terminal and g is a string of terminals/non-terminals. |

| Computational Power | More powerful; can describe languages beyond CFL, including some that require context to be recognized. | Less powerful; cannot represent languages needing context for validation. |

| Recognition Machine | Recognized by Linear Bounded Automata (LBA). | Recognized by Pushdown Automata (PDA). |

| Closure Properties | Closed under union, intersection, concatenation, complement. | Closed under union, concatenation, and Kleene star; not closed under intersection and complement. |

| Examples | Language L = {a^n b^n c^n | n >= 1} | Language L = {a^n b^n | n >= 0} |

| Parsing Complexity | Parsing is generally PSPACE-complete; computationally intensive. | Parsing is in polynomial time; efficient algorithms exist. |

Introduction to Context-Sensitive and Context-Free Languages

Context-sensitive languages are generated by context-sensitive grammars, allowing production rules where the length of the output is at least the length of the input, enabling more complex language constructs compared to context-free languages. Context-free languages, produced by context-free grammars, use production rules where a single non-terminal is replaced regardless of the surrounding symbols, simplifying parsing and enabling efficient algorithms like pushdown automata. Understanding the distinction between these language classes is crucial in formal language theory, compiler design, and automata theory for modeling and processing syntactic structures.

Defining Context-Free Languages

Context-free languages are defined by grammars where production rules replace a single non-terminal symbol with a string of terminals and non-terminals, independent of the surrounding symbols, enabling efficient parsing methods like pushdown automata. Context-sensitive languages require production rules that consider the surrounding context of symbols, making them more powerful but computationally complex. The distinction lies in the generative capacity and parsing complexity, with context-free languages widely used in programming language syntax due to their balance of expressiveness and tractability.

Key Properties of Context-Free Languages

Context-free languages are characterized by their ability to be generated by context-free grammars, where production rules replace a single nonterminal with a string of terminals and nonterminals regardless of surrounding symbols. Key properties include closure under union, concatenation, and Kleene star operations, enabling efficient parsing algorithms like those based on pushdown automata. Unlike context-sensitive languages, context-free languages are less expressive but allow polynomial-time membership testing and simpler syntax analysis in programming languages.

Understanding Context-Sensitive Languages

Context-sensitive languages are a class of formal languages where the production rules in their grammars depend on the context of nonterminal symbols, allowing more complex structures than context-free languages. These languages are recognized by linear bounded automata, which operate within memory bounded by the input size, distinguishing them from the pushdown automata used for context-free languages. Understanding context-sensitive languages is crucial for modeling natural language constructs and certain programming language semantics that require context-dependent syntax verification.

Distinguishing Features of Context-Sensitive Languages

Context-sensitive languages are characterized by grammar rules where the production depends on the surrounding symbols, allowing rules of the form aAb - agb with non-empty g, contrasting with context-free languages that use simpler productions A - g. These languages can generate more complex patterns and require linear bounded automata for recognition, reflecting their higher computational power compared to pushdown automata used for context-free languages. Distinguishing features include the ability to enforce length constraints between symbols and handle multiple dependencies, making context-sensitive languages crucial for describing natural language syntax and advanced programming language constructs.

Grammar Rules: Context-Free vs Context-Sensitive

Context-free grammar (CFG) rules generate languages by replacing non-terminal symbols with strings of terminals and non-terminals independently of surrounding symbols, enabling simpler parsing algorithms and efficient syntax analysis. In contrast, context-sensitive grammar (CSG) rules allow productions where substitutions depend on the surrounding context, enabling representation of more complex language structures but increasing parsing complexity and computational resources required. Context-sensitive grammars can describe languages that context-free grammars cannot, such as those requiring agreement or hierarchy constraints dependent on neighboring elements.

Computational Power and Limitations

Context-sensitive languages are more computationally powerful than context-free languages, as they can describe languages requiring a linear bounded automaton, whereas context-free languages are recognized by pushdown automata. Context-free languages are limited in handling nested structures but fail to represent certain complexities like cross-serial dependencies, which context-sensitive languages can capture. The increased computational power of context-sensitive languages comes at the cost of higher complexity, making parsing and recognition more resource-intensive compared to the more efficient algorithms available for context-free languages.

Real-World Applications and Examples

Context-sensitive languages are crucial in natural language processing where meaning depends on context, enabling more accurate syntactic and semantic analysis for applications like machine translation and speech recognition. Context-free languages underpin the design of programming languages and compilers, facilitating efficient parsing of code structures in software development environments such as Java and Python. The complexity of context-sensitive grammars makes them less practical for many automated tools but essential for modeling human languages with their inherent contextual dependencies.

Parsing Techniques for Both Language Types

Parsing context-sensitive languages involves complex algorithms like linear bounded automata and generalized LR parsers, which can handle the dependencies and constraints dependent on context within the language. In contrast, context-free languages are typically parsed using simpler and more efficient techniques such as pushdown automata, LL(k), and LR(k) parsers that operate on hierarchical syntactic structures without requiring contextual information. The choice of parsing technique directly impacts the computational complexity and practical implementation of language processors in compilers and interpreters.

Summary and Implications for Language Theory

Context-sensitive languages require rules that depend on surrounding symbols, enabling them to model more complex syntax than context-free languages, which have rules independent of context and generate simpler structures. This distinction explains why context-sensitive languages can represent natural language features like agreement and variable binding, which context-free languages cannot adequately capture. Understanding these differences impacts language theory by emphasizing the limits of parsing algorithms and guiding the development of computational models suitable for natural language processing and formal language analysis.

Context-sensitive language Infographic

libterm.com

libterm.com