Gradient refers to the rate of change or slope of a function, graph, or surface, representing how one variable varies in relation to another. It is widely used in mathematics, physics, and machine learning to optimize functions and understand trends or directions of change. Discover more about the concept of gradient and its practical applications in the full article.

Table of Comparison

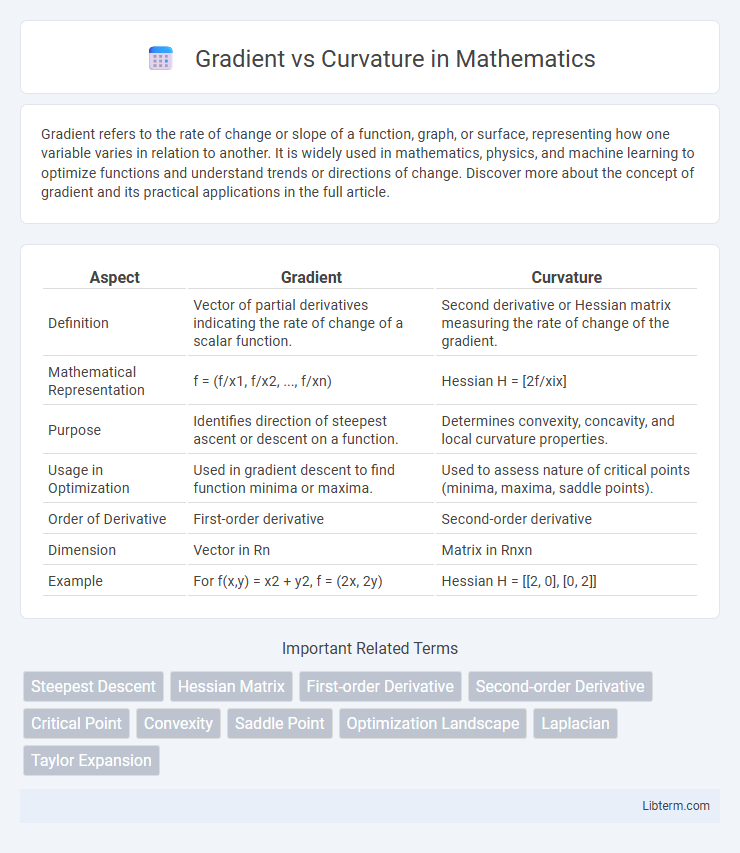

| Aspect | Gradient | Curvature |

|---|---|---|

| Definition | Vector of partial derivatives indicating the rate of change of a scalar function. | Second derivative or Hessian matrix measuring the rate of change of the gradient. |

| Mathematical Representation | f = (f/x1, f/x2, ..., f/xn) | Hessian H = [2f/xix] |

| Purpose | Identifies direction of steepest ascent or descent on a function. | Determines convexity, concavity, and local curvature properties. |

| Usage in Optimization | Used in gradient descent to find function minima or maxima. | Used to assess nature of critical points (minima, maxima, saddle points). |

| Order of Derivative | First-order derivative | Second-order derivative |

| Dimension | Vector in Rn | Matrix in Rnxn |

| Example | For f(x,y) = x2 + y2, f = (2x, 2y) | Hessian H = [[2, 0], [0, 2]] |

Introduction to Gradient and Curvature

Gradient measures the rate and direction of change in a scalar field, represented as a vector pointing toward the steepest ascent. Curvature quantifies how sharply a curve or surface bends, describing the deviation from a straight path or flat plane. Both concepts are fundamental in differential geometry and play critical roles in fields like physics, computer vision, and optimization algorithms.

Fundamental Definitions

Gradient represents the vector of partial derivatives indicating the direction and rate of fastest increase of a scalar function, fundamental in optimization and machine learning. Curvature describes the rate at which the gradient changes, often characterized by the Hessian matrix, providing critical information about the local shape of a function and its convexity or concavity. Understanding the interplay between gradient and curvature is essential for advanced optimization methods such as Newton's method and for assessing convergence and stability in numerical algorithms.

Mathematical Representation

Gradient is a vector representing the first-order partial derivatives of a scalar function, indicating the direction of the steepest ascent. Curvature quantifies the rate of change of the gradient, often expressed through second-order derivatives or the Hessian matrix in multidimensional contexts. Mathematically, the gradient f(x) is a vector of partial derivatives, while curvature involves the Hessian 2f(x), which captures the function's local concavity or convexity.

Gradient: Meaning and Applications

Gradient represents the vector of partial derivatives indicating the steepest ascent direction in a scalar field, crucial for optimizing functions in machine learning and computer vision. It enables efficient minimization of loss functions through gradient descent algorithms, improving model accuracy and training speed. Applications include image processing, neural network training, and physics simulations where directional change rates are essential.

Curvature: Meaning and Applications

Curvature measures the rate at which a curve deviates from being straight by quantifying its bending at each point. It is fundamental in fields such as differential geometry, computer graphics, and robotics for path planning and surface analysis. Applications include optimizing trajectories in autonomous vehicles, enhancing image reconstruction in medical imaging, and designing smoother animations in 3D modeling.

Key Differences Between Gradient and Curvature

Gradient measures the rate and direction of change of a scalar function, represented as a vector indicating the steepest ascent, while curvature quantifies how sharply a curve deviates from being straight or a surface bends at a point. In mathematical terms, the gradient is the first derivative of a scalar field, whereas curvature involves second derivatives or higher, capturing the local geometric bending. Gradients guide optimization by pointing toward maxima or minima, whereas curvature informs about concavity and stability, critical in fields like differential geometry and machine learning.

Real-World Examples

Gradient measures the rate of change in a quantity, such as temperature or elevation, and is crucial for navigation in hilly terrains or optimizing machine learning algorithms. Curvature describes how sharply a surface or curve bends, seen in road design where smooth curves ensure driver safety or in medical imaging to analyze anatomical structures. Real-world navigation systems rely on gradient to calculate steepness, while curvature informs engineering decisions to create stable bridges and ergonomic products.

Importance in Optimization

Gradient measures the rate of change of a function and directs the optimization process toward local minima or maxima by indicating the steepest descent or ascent. Curvature, represented by the Hessian matrix, provides insight into the function's second-order behavior, revealing the local shape and aiding in adjusting step sizes for more accurate convergence. Understanding both gradient and curvature enhances optimization algorithms by balancing speed and precision, crucial for solving complex, high-dimensional problems effectively.

Common Misconceptions

Gradient and curvature are often misunderstood as interchangeable, but they represent distinct aspects of multivariable functions: the gradient indicates the direction and rate of fastest increase, while curvature measures the rate of change of the gradient itself, capturing how the function bends. A common misconception is that a zero gradient implies a flat surface globally, whereas it merely denotes a stationary point that might be a minimum, maximum, or saddle point, which curvature helps to classify. Confusion also arises when interpreting curvature solely as curvature of physical curves, ignoring its role as the second derivative information in optimization and differential geometry contexts.

Conclusion and Future Perspectives

Gradient methods provide efficient first-order optimization with low computational cost, making them suitable for high-dimensional problems. Curvature-based techniques leverage second-order information to achieve faster convergence and better accuracy, especially near optima, but at higher computational expense. Future perspectives emphasize hybrid algorithms that combine gradient efficiency with curvature awareness, alongside adaptive schemes for scalable and robust optimization in machine learning and scientific computing.

Gradient Infographic

libterm.com

libterm.com