Mixing combines multiple audio tracks to create a balanced and cohesive sound that enhances the overall listening experience. Effective mixing involves adjusting levels, panning, equalization, and effects to ensure clarity and impact. Discover how mastering these techniques can transform your projects by reading the rest of this article.

Table of Comparison

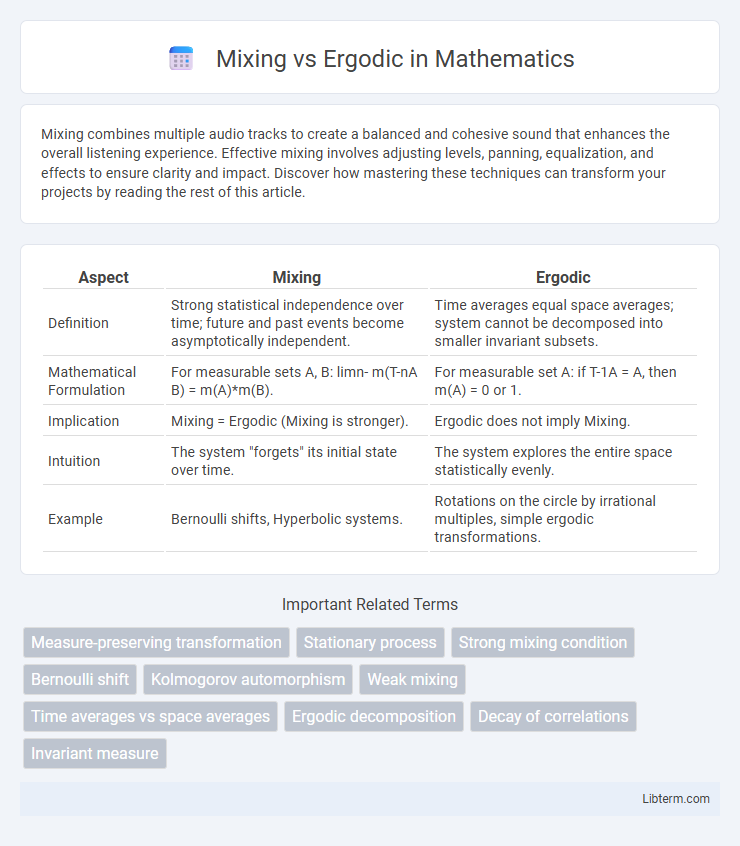

| Aspect | Mixing | Ergodic |

|---|---|---|

| Definition | Strong statistical independence over time; future and past events become asymptotically independent. | Time averages equal space averages; system cannot be decomposed into smaller invariant subsets. |

| Mathematical Formulation | For measurable sets A, B: limn- m(T-nA B) = m(A)*m(B). | For measurable set A: if T-1A = A, then m(A) = 0 or 1. |

| Implication | Mixing = Ergodic (Mixing is stronger). | Ergodic does not imply Mixing. |

| Intuition | The system "forgets" its initial state over time. | The system explores the entire space statistically evenly. |

| Example | Bernoulli shifts, Hyperbolic systems. | Rotations on the circle by irrational multiples, simple ergodic transformations. |

Introduction to Mixing and Ergodic Concepts

Mixing and ergodic properties describe different levels of randomness and predictability in dynamical systems, with mixing indicating the system's states become statistically independent over time. Ergodic systems ensure that time averages equal space averages, meaning long-term behavior can be understood by studying a single trajectory. Understanding these concepts is crucial in fields such as statistical mechanics, probability theory, and chaos theory.

Historical Background: Origins in Dynamical Systems

Mixing and ergodic concepts originated in the early 20th century within the study of dynamical systems, tracing back to the work of George Birkhoff and John von Neumann on ergodic theory. The mixing property, a stronger form of ergodicity, was formalized to describe systems where any initial distribution eventually blends uniformly over the phase space. These foundational developments established a rigorous mathematical framework for analyzing long-term behavior in deterministic systems, influencing statistical mechanics and chaos theory.

Defining Mixing in Measure Theory

Mixing in measure theory is a strong form of statistical independence where the measure of the intersection of a set and its image under repeated iterations of a transformation converges to the product of their individual measures. Formally, a measure-preserving transformation \( T \) on a probability space \((X, \mathcal{B}, \mu)\) is mixing if for any measurable sets \(A, B \in \mathcal{B}\), the limit \(\lim_{n \to \infty} \mu(T^{-n}A \cap B) = \mu(A) \mu(B)\) holds. This property implies that long-term behavior under \(T\) loses all memory of initial conditions, exhibiting stronger randomness than ergodicity, which only requires invariance of sets under \(T\).

Understanding Ergodicity: Key Principles

Ergodicity is a fundamental principle in dynamical systems theory, indicating that time averages of a system's trajectory equal its space averages over the entire phase space. Unlike mixing, which implies a stronger form of statistical independence and the eventual loss of memory of initial conditions, ergodicity ensures that a single trajectory densely explores the accessible state space. Key factors in understanding ergodicity include invariant measures, measure-preserving transformations, and the property that almost every point's orbit is representative of the system's statistical behavior.

Mathematical Formulation: Mixing vs Ergodic

Mixing in dynamical systems implies that the measure of the intersection of a set and its image under iteration converges to the product of their measures, expressed mathematically as \(\lim_{n \to \infty} \mu(T^{-n}A \cap B) = \mu(A)\mu(B)\) for measurable sets \(A\) and \(B\). Ergodicity requires that any invariant set under the transformation \(T\) has measure zero or one, encoded by the condition that the time average equals the space average: \(\lim_{n \to \infty} \frac{1}{n} \sum_{k=0}^{n-1} f(T^k x) = \int f d\mu\) for integrable functions \(f\). Mixing is a stronger property than ergodicity, guaranteeing decay of correlations and statistical independence of states at large times, while ergodicity ensures indistinguishability of almost all orbits in terms of their long-term statistical behavior.

Physical Interpretations and Real-World Examples

Mixing describes systems where initial states become increasingly independent over time, reflecting complete loss of memory and uniform distribution of trajectories, exemplified by turbulent fluid flow where particles spread evenly. Ergodic systems ensure time averages equal ensemble averages but may retain some correlations, as seen in ideal gas particles exploring all accessible microstates, fulfilling the ergodic hypothesis. Both concepts capture different degrees of statistical randomness crucial in thermodynamics and statistical mechanics, aiding in understanding phenomena like diffusion and equilibrium states.

Key Differences Between Mixing and Ergodic Behavior

Mixing behavior in dynamical systems implies that any two subsets of the phase space become increasingly interwoven over time, leading to a strong form of statistical independence. Ergodic behavior ensures that time averages of a system's trajectory equal space averages with respect to an invariant measure, but does not guarantee the thorough intertwining characteristic of mixing. The key difference lies in mixing being a stronger condition that implies ergodicity, whereas ergodicity alone does not imply mixing, reflecting distinct types of convergence in measure-theoretic dynamics.

Applications in Statistical Mechanics

Mixing and ergodic properties are fundamental in statistical mechanics for describing the long-term behavior of dynamical systems. Ergodic systems ensure time averages converge to ensemble averages, enabling equilibrium statistical mechanics to predict macroscopic properties from microscopic dynamics. Mixing, as a stronger property, guarantees rapid decay of correlations and underpins the justification for thermodynamic irreversibility and the approach to equilibrium in many-body systems.

Importance in Chaos Theory and Stochastic Processes

Mixing and ergodic properties are fundamental in chaos theory and stochastic processes, characterizing the unpredictability and long-term statistical behavior of dynamical systems. Mixing ensures that system states become statistically independent over time, crucial for modeling complex chaotic behavior and justifying assumptions of randomness in physical processes. Ergodicity guarantees that time averages converge to ensemble averages, enabling accurate predictions and statistical descriptions from single trajectories in both chaotic systems and random processes.

Conclusion: Implications for Future Research

Mixing properties imply strong forms of statistical independence that ensure rapid decay of correlations, while ergodicity guarantees long-term average behavior without specifying rates. Future research should focus on quantifying the transition between ergodic and mixing regimes, utilizing advanced spectral analysis and probabilistic methods. Improved understanding of these distinctions can enhance models in statistical mechanics, dynamical systems, and stochastic processes.

Mixing Infographic

libterm.com

libterm.com