Tensor product combines two vector spaces into a new, higher-dimensional space where interactions between elements can be explored algebraically. This operation is fundamental in fields like quantum mechanics, multilinear algebra, and computer science for modeling complex relationships. Discover how understanding tensor products can enhance your mathematical toolkit by reading the full article.

Table of Comparison

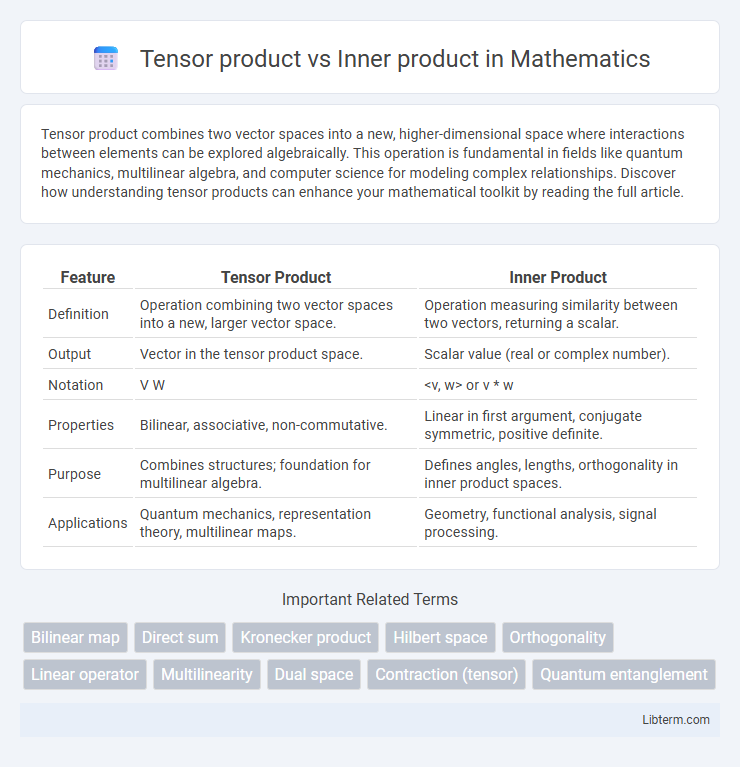

| Feature | Tensor Product | Inner Product |

|---|---|---|

| Definition | Operation combining two vector spaces into a new, larger vector space. | Operation measuring similarity between two vectors, returning a scalar. |

| Output | Vector in the tensor product space. | Scalar value (real or complex number). |

| Notation | V W | <v, w> or v * w |

| Properties | Bilinear, associative, non-commutative. | Linear in first argument, conjugate symmetric, positive definite. |

| Purpose | Combines structures; foundation for multilinear algebra. | Defines angles, lengths, orthogonality in inner product spaces. |

| Applications | Quantum mechanics, representation theory, multilinear maps. | Geometry, functional analysis, signal processing. |

Introduction to Tensor Products and Inner Products

Tensor products combine vector spaces to form higher-dimensional spaces, enabling multilinear maps and representing complex interactions, while inner products define geometric concepts like length and angle within vector spaces by assigning a scalar to each pair of vectors. The tensor product of two vector spaces V and W, denoted V W, constructs a new space whose dimension equals the product of dimensions of V and W, supporting bilinear operations. Inner products, formalized as a positive-definite, symmetric bilinear form <*,*> , facilitate orthogonality and norm calculations crucial in Hilbert spaces and quantum mechanics.

Fundamental Concepts: Definitions and Notation

The tensor product of two vectors constructs a higher-dimensional vector space representing all possible pairwise combinations, denoted as \( u \otimes v \) where \( u \in V \) and \( v \in W \) belong to vector spaces \(V\) and \(W\). The inner product, symbolized by \(\langle u, v \rangle\) for vectors in an inner product space, is a scalar that measures the angle and length relationship, satisfying linearity, symmetry, and positive-definiteness. Both concepts serve distinct purposes: tensor products enable multilinear mappings and the construction of composite spaces, while inner products facilitate metric structures and orthogonality within a vector space.

Mathematical Framework: Scalars, Vectors, and Spaces

The tensor product extends the concept of multiplication from scalars to vectors, producing an element in a higher-dimensional tensor space formed by the Cartesian product of individual vector spaces. Inner products define a scalar-valued function that maps two vectors within the same vector space to a single scalar, incorporating notions of length and angle through bilinear, symmetric, positive-definite properties. Both operations rely on underlying scalar fields, vector spaces, and their algebraic structures, with the tensor product generating multilinear maps and the inner product enabling geometric interpretations via norm and orthogonality.

Geometric Interpretation of Inner Product

The inner product in linear algebra geometrically represents the projection of one vector onto another, quantifying their similarity through the cosine of the angle between them, which contrasts with the tensor product that creates a higher-dimensional space by combining vectors without implying projection. The inner product induces notions of length and angle, defining orthogonality and enabling distance calculations in vector spaces. This geometric interpretation is pivotal in fields such as computer graphics, quantum mechanics, and data science for understanding vector relationships and transformations.

Algebraic Structure of Tensor Product

The tensor product creates a new algebraic structure by combining two vector spaces into a higher-dimensional space that encapsulates bilinear maps, preserving multilinearity and enabling complex interactions between vectors. Unlike the inner product, which maps pairs of vectors to scalars and induces geometric notions like length and angle, the tensor product forms a vector space with a basis derived from the bases of the original spaces, increasing dimensionality multiplicatively. This algebraic construction is fundamental in multilinear algebra, representation theory, and quantum computing for modeling composite systems and higher-order relationships.

Key Differences: Linear Algebraic Perspective

The tensor product combines two vector spaces into a higher-dimensional space, capturing multilinear relationships and enabling the construction of new vector spaces from existing ones. The inner product is a bilinear form that assigns a scalar to a pair of vectors within the same space, defining notions of length, angle, and orthogonality. Unlike the inner product, which maps vectors to scalars, the tensor product maps pairs of vectors to a vector in the tensor product space, reflecting a fundamental difference in dimensionality and function in linear algebra.

Applications in Physics and Engineering

Tensor products enable the representation of complex quantum states and multipartite systems in quantum mechanics, facilitating the description of entanglement and composite particle systems. Inner products provide the foundation for calculating probabilities and expectations in Hilbert spaces, critical for analyzing wavefunctions and signal processing. In engineering, tensor products model multidimensional data in control systems and robotics, while inner products are essential in signal correlation and optimization algorithms.

Computational Uses in Machine Learning

Tensor products enable the construction of higher-dimensional feature spaces by combining multiple vector spaces, facilitating complex data representations essential in deep learning architectures such as convolutional neural networks and tensor factorization methods. Inner products measure similarity and projection in vector spaces, forming the foundation of algorithms like support vector machines and kernel methods by computing dot products efficiently for classification and regression tasks. Leveraging tensor products enhances multi-modal data fusion and interactions, while inner products optimize computational speed and numerical stability in high-dimensional machine learning workflows.

Common Misconceptions and Pitfalls

Tensor product and inner product are fundamentally different operations in linear algebra with distinct purposes and properties. A common misconception is treating the tensor product as if it behaves like an inner product, leading to errors such as expecting commutativity or scalar output from the tensor product, whereas it produces a higher-dimensional tensor. Confusing these concepts often causes mistakes in quantum mechanics and multilinear algebra where the tensor product combines vector spaces, while the inner product measures vector similarity and produces a scalar.

Conclusion: Choosing Between Tensor and Inner Products

Selecting between tensor and inner products depends on the desired mathematical operation and context; the tensor product constructs higher-dimensional vector spaces essential for multilinear algebra, quantum computing, and complex data representations, while the inner product provides a scalar measure of vector similarity, orthogonality, and projection in Euclidean spaces. Tensor products enable the combination of separate vector spaces into a unified framework, facilitating transformations and interactions in fields like physics and machine learning. Inner products focus on metric properties and vector space geometry, making them indispensable in optimization and signal processing tasks.

Tensor product Infographic

libterm.com

libterm.com