Linearly independent vectors in a vector space are those that cannot be expressed as a linear combination of each other, ensuring unique representation of any vector in that space. Understanding this concept is crucial for solving systems of linear equations, determining the dimension of vector spaces, and simplifying complex mathematical models. Explore the rest of the article to deepen your grasp of linear independence and its applications in various fields.

Table of Comparison

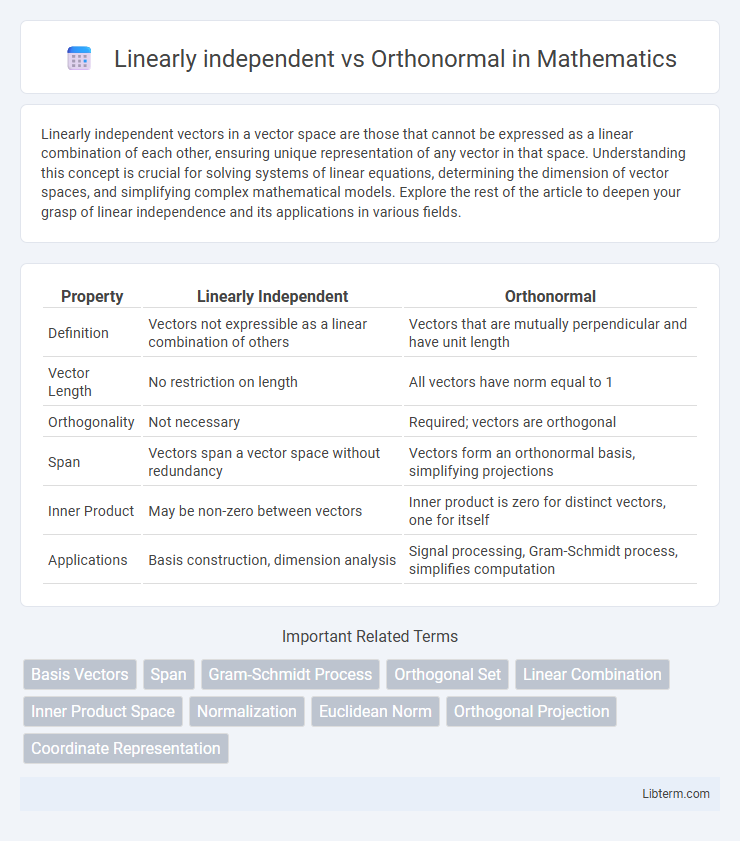

| Property | Linearly Independent | Orthonormal |

|---|---|---|

| Definition | Vectors not expressible as a linear combination of others | Vectors that are mutually perpendicular and have unit length |

| Vector Length | No restriction on length | All vectors have norm equal to 1 |

| Orthogonality | Not necessary | Required; vectors are orthogonal |

| Span | Vectors span a vector space without redundancy | Vectors form an orthonormal basis, simplifying projections |

| Inner Product | May be non-zero between vectors | Inner product is zero for distinct vectors, one for itself |

| Applications | Basis construction, dimension analysis | Signal processing, Gram-Schmidt process, simplifies computation |

Understanding Linear Independence

Linear independence in vector spaces means no vector can be expressed as a linear combination of others, ensuring uniqueness in representation. Orthonormal sets extend this concept by combining linear independence with unit length and mutual perpendicularity, simplifying computations in inner product spaces. Understanding linear independence is crucial for determining the dimension of vector spaces and constructing bases for efficient vector decomposition.

Defining Orthonormality

Orthonormality is defined by a set of vectors that are both orthogonal and normalized, meaning each vector has a unit length and is perpendicular to every other vector in the set. Unlike linear independence, which requires vectors simply not to be expressible as linear combinations of each other, orthonormal vectors maintain a strict inner product condition where the dot product between any two distinct vectors is zero and the dot product of a vector with itself is one. This property simplifies many vector space operations such as projections and transformations by preserving vector length and angles inherently.

Key Differences Between Linear Independence and Orthonormality

Linearly independent vectors are defined as a set where no vector can be expressed as a linear combination of the others, ensuring uniqueness in vector representation within a vector space. Orthonormal vectors not only satisfy linear independence but also have unit length and are mutually perpendicular, providing both magnitude normalization and angular separation. Key differences arise as orthonormality imposes stricter conditions, combining orthogonality and normalization, whereas linear independence solely requires non-redundancy without constraints on length or angle.

Mathematical Criteria for Linear Independence

Linear independence in mathematics requires that no vector in a set can be expressed as a linear combination of the others, meaning the only solution to the equation \( c_1 \mathbf{v}_1 + c_2 \mathbf{v}_2 + \cdots + c_n \mathbf{v}_n = \mathbf{0} \) is \( c_1 = c_2 = \cdots = c_n = 0 \). Orthonormal vectors are always linearly independent because they are both orthogonal (their inner product is zero) and normalized (each vector has unit length). The criterion for linear independence is fundamental in vector space theory and underlies the construction of bases, but orthonormality adds geometric constraints that simplify computations and ensure numerical stability.

Mathematical Criteria for Orthonormal Sets

Orthonormal sets require each vector to be both unit length and mutually orthogonal, meaning the inner product between distinct vectors is zero and the inner product of a vector with itself is one. In contrast, linearly independent sets only require that no vector can be expressed as a linear combination of others, without any constraints on length or orthogonality. The mathematical criteria for orthonormality are typically expressed as

Importance in Vector Spaces

Linearly independent vectors form the foundation of vector spaces by ensuring no vector can be represented as a combination of others, which is crucial for determining the dimension and constructing bases. Orthonormal vectors, a special subset of linearly independent vectors, simplify calculations with their unit length and mutual perpendicularity, making operations like projections and coordinate transformations more efficient. The presence of an orthonormal basis enhances numerical stability and computational ease in applications such as signal processing, quantum mechanics, and machine learning.

Examples Illustrating Linear Independence

Vectors \(\mathbf{v}_1 = (1, 2, 3)\), \(\mathbf{v}_2 = (4, 5, 6)\), and \(\mathbf{v}_3 = (7, 8, 9)\) are linearly dependent since \(\mathbf{v}_3\) can be expressed as a linear combination of \(\mathbf{v}_1\) and \(\mathbf{v}_2\). In contrast, vectors \(\mathbf{u}_1 = (1, 0, 0)\), \(\mathbf{u}_2 = (0, 1, 0)\), and \(\mathbf{u}_3 = (0, 0, 1)\) form an orthonormal set as they are mutually perpendicular and each have unit length. Linear independence requires that no vector in the set can be written as a linear combination of the others, while orthonormality also demands normalized magnitudes and orthogonality.

Examples Demonstrating Orthonormality

Orthonormal vectors are not only linearly independent but also have unit length and are mutually perpendicular, such as the standard basis vectors \( \mathbf{e}_1 = (1,0,0) \), \( \mathbf{e}_2 = (0,1,0) \), and \( \mathbf{e}_3 = (0,0,1) \) in \(\mathbb{R}^3\). An example of orthonormality is the set of vectors \( \{ \frac{1}{\sqrt{2}}(1,1), \frac{1}{\sqrt{2}}(-1,1) \} \) in \(\mathbb{R}^2\), which are orthogonal with dot product zero and each have norm one. While linearly independent vectors can have arbitrary length and angles, orthonormal sets simplify computations in linear algebra, signal processing, and machine learning due to their convenient geometric properties.

Applications in Mathematics and Engineering

Linearly independent vectors form the foundation for vector space dimension determination, enabling solutions to systems of linear equations and matrix rank analysis. Orthonormal sets, characterized by vectors that are both orthogonal and normalized, facilitate simplified computations in signal processing, quantum mechanics, and computer graphics by ensuring numerical stability and efficiency. In engineering, orthonormal bases optimize data compression algorithms like PCA and improve the accuracy of finite element methods used in structural analysis.

Choosing Between Linearly Independent and Orthonormal Sets

Choosing between linearly independent and orthonormal sets depends on the application requirements; orthonormal sets simplify computations in vector spaces by ensuring both orthogonality and unit norm, which is crucial in fields like signal processing and quantum mechanics. Linearly independent sets provide the minimal condition for spanning a vector space without redundancy but lack the computational advantages of orthonormality. When numerical stability and ease of projection operations are priorities, orthonormal bases are preferred, while linearly independent sets suffice for defining vector subspace dimensions.

Linearly independent Infographic

libterm.com

libterm.com