Max norm is a mathematical concept used to measure the largest absolute value among the components of a vector, providing a crucial tool in optimization and numerical analysis. It helps maintain stability in algorithms by bounding the size of parameter updates during training in machine learning models. Explore the rest of the article to understand how max norm impacts various fields and practical applications in your work.

Table of Comparison

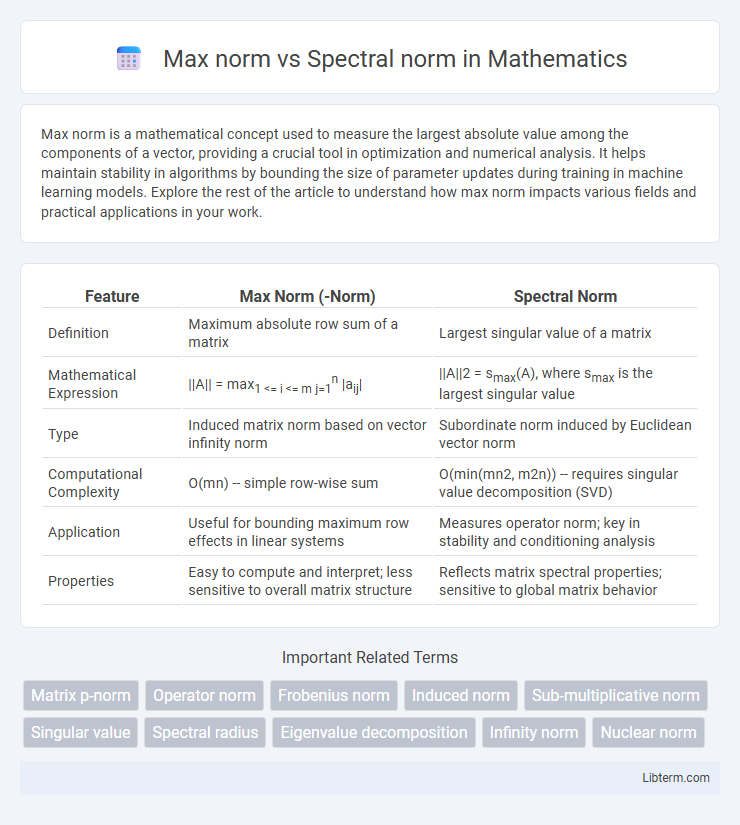

| Feature | Max Norm (-Norm) | Spectral Norm |

|---|---|---|

| Definition | Maximum absolute row sum of a matrix | Largest singular value of a matrix |

| Mathematical Expression | ||A|| = max1 <= i <= m j=1n |aij| | ||A||2 = smax(A), where smax is the largest singular value |

| Type | Induced matrix norm based on vector infinity norm | Subordinate norm induced by Euclidean vector norm |

| Computational Complexity | O(mn) -- simple row-wise sum | O(min(mn2, m2n)) -- requires singular value decomposition (SVD) |

| Application | Useful for bounding maximum row effects in linear systems | Measures operator norm; key in stability and conditioning analysis |

| Properties | Easy to compute and interpret; less sensitive to overall matrix structure | Reflects matrix spectral properties; sensitive to global matrix behavior |

Introduction to Matrix Norms

Matrix norms provide essential tools for measuring the size or length of matrices, with Max norm and Spectral norm serving distinct purposes in linear algebra. The Max norm, also known as the infinity norm or maximum absolute row sum norm, evaluates the largest absolute value among all matrix entries, offering a straightforward measure of matrix magnitude. The Spectral norm, defined as the largest singular value of a matrix, captures the maximum stretching effect of the matrix on a vector, playing a crucial role in stability analysis and operator theory.

Defining Max Norm and Spectral Norm

Max norm defines the maximum absolute value among all entries in a matrix, capturing the largest individual element. Spectral norm measures the largest singular value of the matrix, reflecting its maximum stretching factor in Euclidean space. These norms provide distinct perspectives on matrix size, with max norm focusing on entry-wise magnitude and spectral norm emphasizing operator behavior.

Mathematical Formulations

The Max norm, defined as the maximum absolute row sum or column sum of a matrix, is mathematically expressed as \(\|A\|_{\max} = \max_{i,j} |a_{ij}|\) for matrix elements \(a_{ij}\). The Spectral norm, also known as the operator 2-norm, corresponds to the largest singular value \(\sigma_{\max}(A)\), calculated as \(\|A\|_2 = \sqrt{\lambda_{\max}(A^*A)}\), where \(\lambda_{\max}\) denotes the largest eigenvalue of \(A^*A\). Both norms measure different aspects of matrix magnitude, with Max norm focusing on element-wise bounds and Spectral norm capturing the maximal amplification factor in vector transformations.

Key Differences Between Max Norm and Spectral Norm

Max norm measures the maximum absolute row sum of a matrix, providing a bound on the largest individual row contribution, while spectral norm represents the largest singular value, indicating the maximum stretching factor of the matrix. Max norm is computationally simpler and often used in element-wise regularization, whereas spectral norm is critical in stability analysis and operator norm estimation. The key difference lies in max norm capturing maximum row magnitude and spectral norm capturing overall matrix-induced transformation magnitude.

Use Cases and Applications

Max norm is primarily utilized in machine learning models to enforce sparsity and control the maximum weight magnitude, improving generalization in neural networks and promoting feature selection in high-dimensional data. Spectral norm, often applied in deep learning and control theory, ensures stability by limiting the largest singular value of weight matrices, which helps prevent exploding gradients and enhances robustness in adversarial training. Both norms are pivotal in regularization strategies, with max norm favoring element-wise constraints and spectral norm emphasizing global matrix properties for diverse applications like natural language processing and system identification.

Computational Complexity Comparison

Max norm computation involves evaluating the maximum absolute value of matrix entries, resulting in a straightforward O(mn) time complexity for an m-by-n matrix. Spectral norm calculation requires finding the largest singular value, typically through iterative algorithms like power iteration or singular value decomposition, leading to a higher complexity around O(mn^2) or O(n^3) depending on matrix dimensions and algorithm specifics. Consequently, max norm offers significantly lower computational overhead compared to spectral norm in large-scale matrix applications.

Advantages and Limitations

Max norm provides element-wise control by limiting the absolute maximum value in a matrix, making it advantageous for sparsity enforcement and interpretability in high-dimensional data. Spectral norm constrains the largest singular value, which effectively controls the overall matrix's Lipschitz constant, enhancing stability and convergence in neural network training. Max norm's limitation lies in its potential to overlook global matrix structure, while spectral norm can be computationally expensive and less effective for sparsity promotion.

Norm Selection Guidelines in Machine Learning

Max norm limits the absolute maximum value of parameters, promoting sparsity and stability in deep neural networks, especially beneficial in weight regularization for convolutional layers. Spectral norm controls the largest singular value of weight matrices, effectively bounding the Lipschitz constant and improving generalization and robustness in models such as GANs and recurrent neural networks. Norm selection guidelines recommend using Max norm for stabilizing training and preventing exploding weights, while Spectral norm is preferred for controlling model expressivity and ensuring smooth transformations, critical in adversarial settings and sensitive architectures.

Impact on Model Performance

Max norm regularization constrains the maximum absolute value of weights, promoting sparsity and preventing extreme weight values that can lead to overfitting, which often results in more robust generalization in neural networks. Spectral norm regularization controls the largest singular value of weight matrices, directly impacting the Lipschitz constant of the network and enhancing stability during training, thereby improving convergence and reducing the risk of exploding gradients. Models using spectral norm regularization typically exhibit smoother decision boundaries and better adversarial robustness, while max norm can be more effective in scenarios requiring strict weight magnitude constraints.

Summary and Future Directions

Max norm measures the largest absolute value of matrix entries, offering simplicity and robustness in regularization for neural networks, while spectral norm evaluates the largest singular value, providing tighter control over Lipschitz continuity and model stability. Recent advancements emphasize integrating max norm constraints with spectral norm regularization to balance sparsity and stability, enhancing generalization in deep learning models. Future research directions include developing adaptive norm combinations tailored to specific architectures and exploring scalable algorithms for large-scale spectral norm computations.

Max norm Infographic

libterm.com

libterm.com