The operator norm measures the maximum stretching effect a linear operator has on vectors within a given space, serving as a critical tool in functional analysis and matrix theory. It quantifies how much an operator can expand or shrink a vector, providing insights into stability and boundedness in various applications. Discover how understanding the operator norm can enhance your grasp of linear transformations by exploring the full article.

Table of Comparison

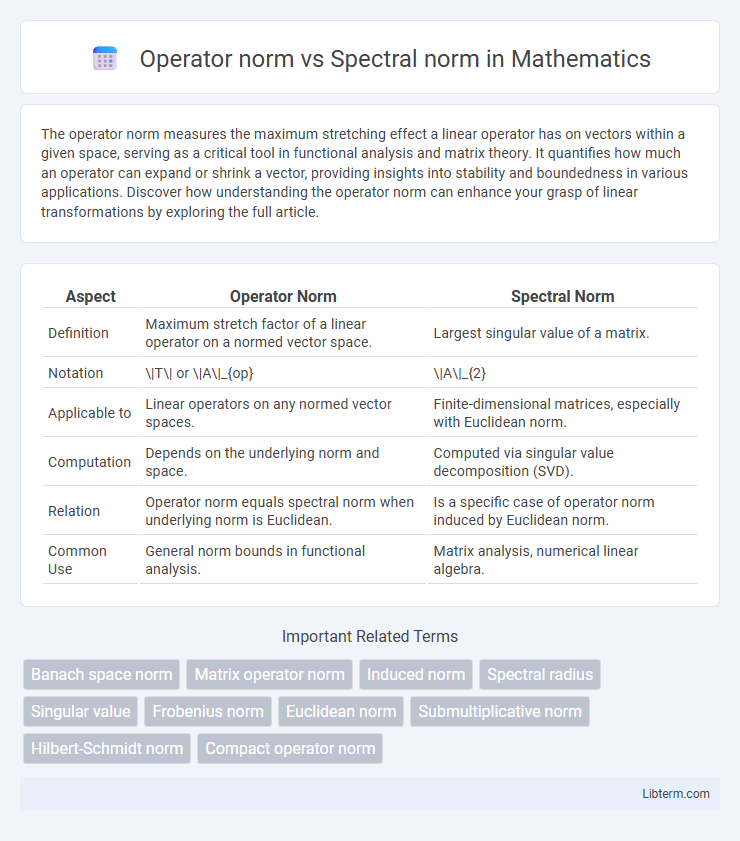

| Aspect | Operator Norm | Spectral Norm |

|---|---|---|

| Definition | Maximum stretch factor of a linear operator on a normed vector space. | Largest singular value of a matrix. |

| Notation | \|T\| or \|A\|_{op} | \|A\|_{2} |

| Applicable to | Linear operators on any normed vector spaces. | Finite-dimensional matrices, especially with Euclidean norm. |

| Computation | Depends on the underlying norm and space. | Computed via singular value decomposition (SVD). |

| Relation | Operator norm equals spectral norm when underlying norm is Euclidean. | Is a specific case of operator norm induced by Euclidean norm. |

| Common Use | General norm bounds in functional analysis. | Matrix analysis, numerical linear algebra. |

Introduction to Matrix Norms

Operator norm, often defined as the maximum singular value of a matrix, measures the largest amplification factor the matrix can apply to a vector. Spectral norm, a specific case of the operator norm for square matrices, equals the largest eigenvalue's absolute value for normal matrices. Both norms are fundamental in matrix analysis, providing insights into stability and conditioning in numerical linear algebra.

Understanding Operator Norm

The operator norm measures the maximum stretching factor a linear transformation applies to vectors in a normed vector space, defined as the supremum of the norm of the image over the norm of the original vector. It generalizes the concept of matrix norms to infinite-dimensional spaces and is crucial for analyzing bounded linear operators. Understanding the operator norm involves recognizing its role in quantifying stability and sensitivity in numerical analysis and functional analysis frameworks.

Defining the Spectral Norm

The spectral norm of a matrix is defined as its largest singular value, representing the maximum amount the matrix can stretch a vector in Euclidean space. It is equivalent to the operator norm induced by the L2 norm on vectors, calculated as the square root of the largest eigenvalue of the matrix multiplied by its conjugate transpose. This norm provides a precise measure of matrix magnitude in applications such as numerical analysis, stability assessment, and optimization problems.

Mathematical Formulations

The operator norm of a matrix \( A \) is defined as \( \|A\| = \sup_{\|x\| \neq 0} \frac{\|Ax\|}{\|x\|} \), representing the maximum scaling factor of \( A \) on any vector \( x \). The spectral norm corresponds specifically to the operator norm induced by the Euclidean vector norm and is equal to the largest singular value \( \sigma_{\max}(A) \) of the matrix. Both norms provide key insights into matrix behavior, but the spectral norm's connection to singular values offers a direct computational approach through singular value decomposition (SVD).

Key Differences Between Operator and Spectral Norms

The operator norm measures the maximum stretching factor of a linear operator on a vector space with respect to given norms, often defined as the supremum of the ratio of output to input norms. The spectral norm, specifically for matrices, corresponds to the largest singular value and represents the operator norm induced by the Euclidean vector norm. Key differences include that the operator norm can be defined for general normed spaces and depends on chosen norms, while the spectral norm is a special case tied to the Euclidean norm and directly linked to singular values for finite-dimensional matrices.

Significance in Functional Analysis

The operator norm measures the largest stretching factor of a linear operator on a normed vector space, serving as a crucial tool to analyze operator boundedness and continuity in functional analysis. The spectral norm, specifically for matrices, equals the largest singular value and provides insights into operator behavior relative to Euclidean norms, making it essential in stability and convergence studies. Understanding these norms facilitates the study of operator spectra, compactness, and perturbation impacts within infinite-dimensional spaces.

Relation to Singular Values

The operator norm of a matrix, specifically the induced 2-norm, is equal to its largest singular value, making it directly connected to the spectral norm. The spectral norm of a matrix is defined as the maximum singular value obtained from the singular value decomposition (SVD). Both norms measure the matrix's maximum stretching factor on a unit vector, where the spectral norm quantifies the largest singular value, thus establishing the equivalence of the operator norm to the spectral norm in the context of singular values.

Applications in Machine Learning

Operator norm measures the largest singular value of a matrix, capturing the maximum amplification factor on vectors, which is crucial for analyzing stability in neural networks. Spectral norm, a specific type of operator norm based on singular values, is frequently used in regularization techniques like spectral norm regularization to prevent overfitting and improve generalization in deep learning models. Both norms play key roles in optimizing matrix computations, ensuring controlled gradient propagation, and enhancing robustness in various machine learning applications.

Computational Considerations

The operator norm and spectral norm both measure matrix size but differ in computational complexity; the spectral norm is the largest singular value and is typically computed via singular value decomposition (SVD), which can be costly for large matrices. The operator norm, defined with respect to specific vector norms, may have simpler or more specialized computational methods depending on the chosen norms, often leading to more efficient approximations. Balancing precision and computational efficiency is crucial when selecting between these norms for large-scale numerical applications.

Summary and Practical Recommendations

The operator norm and spectral norm both measure matrix size but differ in definition and application; the operator norm is induced by vector norms and generalizes to various norms, while the spectral norm specifically equals the largest singular value of a matrix. Spectral norm is preferred for stability analysis and singular value decomposition due to its relation to eigenvalues, whereas operator norm is useful in functional analysis and when dealing with different norm-induced matrix bounds. For practical use, choose spectral norm for numerical linear algebra problems emphasizing eigenstructure, and select operator norm when the context requires norms induced by non-Euclidean vector spaces.

Operator norm Infographic

libterm.com

libterm.com