Absolute convergence occurs when the series of absolute values of a sequence converges, ensuring the original series converges regardless of term order. This property is crucial in mathematical analysis and helps establish the stability of infinite sums in complex functions and numerical methods. Explore the rest of the article to deepen your understanding of absolute convergence and its applications.

Table of Comparison

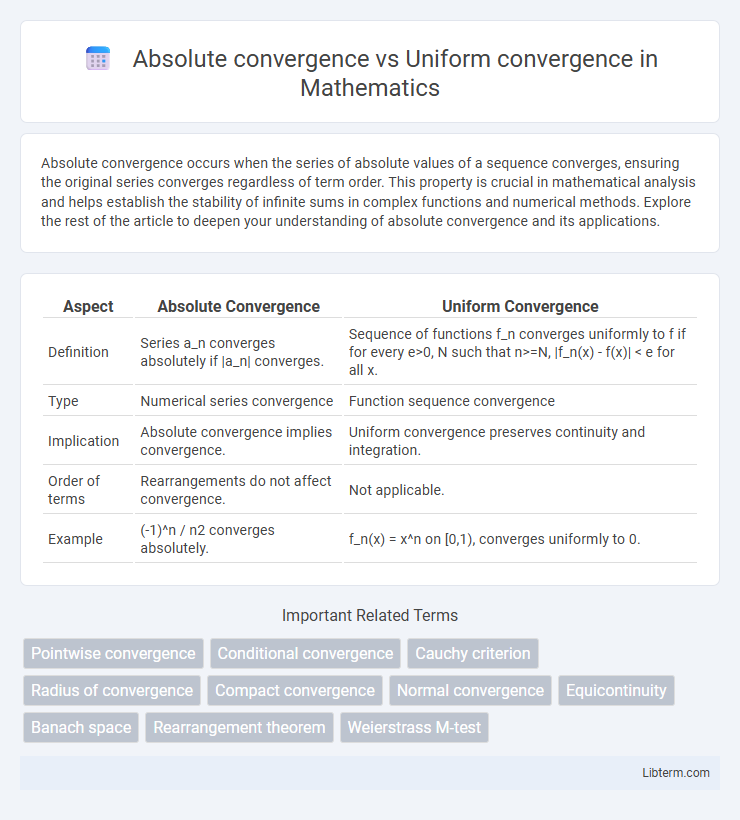

| Aspect | Absolute Convergence | Uniform Convergence |

|---|---|---|

| Definition | Series a_n converges absolutely if |a_n| converges. | Sequence of functions f_n converges uniformly to f if for every e>0, N such that n>=N, |f_n(x) - f(x)| < e for all x. |

| Type | Numerical series convergence | Function sequence convergence |

| Implication | Absolute convergence implies convergence. | Uniform convergence preserves continuity and integration. |

| Order of terms | Rearrangements do not affect convergence. | Not applicable. |

| Example | (-1)^n / n2 converges absolutely. | f_n(x) = x^n on [0,1), converges uniformly to 0. |

Introduction to Series Convergence

Absolute convergence occurs when the series of absolute values converges, ensuring the original series converges regardless of term arrangement. Uniform convergence guarantees that sequence of functions converges to a limit function uniformly across the domain, preserving continuity and integrability. Understanding the distinction is vital in series convergence analysis, as absolute convergence implies convergence, while uniform convergence affects function behavior in function spaces.

Defining Absolute Convergence

Absolute convergence of a series occurs when the series formed by taking the absolute values of its terms converges, meaning \(\sum |a_n|\) converges. This property guarantees the convergence of the original series \(\sum a_n\) regardless of the order of terms. Absolute convergence is a stronger condition than conditional convergence and is crucial in analysis for ensuring series convergence under rearrangements.

Understanding Uniform Convergence

Uniform convergence occurs when a sequence of functions converges to a limiting function at the same rate across the entire domain, ensuring the difference between functions and the limit is uniformly small. This property is crucial for interchanging limits with integrals or derivatives, preserving continuity and integrability in function sequences. Absolute convergence, typically related to series rather than functions, requires the sum of absolute values to converge, which is a stronger condition but distinct from the equidistributed approximation inherent in uniform convergence.

Key Differences Between Absolute and Uniform Convergence

Absolute convergence occurs when the series of absolute values converges, ensuring convergence regardless of term order, which is essential in analyzing series in complex analysis and functional spaces. Uniform convergence requires that a sequence of functions converges to a limit function uniformly over its domain, preserving continuity and integrability properties essential in real analysis and applied mathematics. The key difference lies in absolute convergence dealing with series magnitude convergence, while uniform convergence focuses on the mode and uniformity of function convergence over an entire domain.

Criteria and Tests for Absolute Convergence

Absolute convergence occurs when the series of absolute values converges, ensuring the original series converges regardless of term order. The primary criterion for absolute convergence is the comparison or root test applied to the absolute values of terms, such as using the ratio test where \(\lim_{n \to \infty} |a_{n+1}/a_n| < 1\) guarantees absolute convergence. Uniform convergence, often analyzed through the Weierstrass M-test, requires that a sequence of functions be bounded by a convergent series of constants, which differs from tests for series absolute convergence focused on sum magnitudes rather than uniform function behavior.

Criteria and Tests for Uniform Convergence

Uniform convergence is established through the Uniform Cauchy Criterion, which requires the supremum of the absolute difference between sequence functions to approach zero as the sequence progresses, ensuring convergence independent of the input variable. The Weierstrass M-test provides a practical technique: if a sequence of functions is bounded by a convergent series of constants, uniform convergence is guaranteed. Unlike absolute convergence, which deals with the absolute sum of series terms, uniform convergence focuses on maintaining convergence consistency across the entire domain, crucial for the interchange of limits and integration.

Importance in Real Analysis and Functional Analysis

Absolute convergence is crucial in real analysis for ensuring series convergence independent of term rearrangement, which guarantees stability in infinite summations. Uniform convergence plays a vital role in functional analysis as it preserves continuity, integrability, and differentiability when passing to the limit of function sequences. Understanding their distinctions enables rigorous manipulation of infinite series and function sequences, providing foundational tools for solving differential equations and advancing operator theory.

Examples Illustrating Absolute vs Uniform Convergence

Consider the series (x^n)/n!, which converges absolutely and uniformly on every real interval due to the rapid factorial denominator growth; absolute convergence ensures term-wise limit operations are valid, and uniform convergence guarantees continuity preservation. In contrast, the series x^n converges uniformly only on intervals where |x| < 1, but does not converge absolutely for |x| = 1, illustrating uniform but not absolute convergence. These examples highlight that absolute convergence implies uniform convergence on compact intervals, while uniform convergence alone does not ensure absolute convergence.

Implications in Integration, Differentiation, and Continuity

Absolute convergence guarantees term-by-term integration and differentiation of series while ensuring the series represents a continuous limit function. Uniform convergence preserves continuity and allows interchanging limits with integration and differentiation, making it crucial for justifying operations under integral signs. Unlike absolute convergence, uniform convergence does not require series terms' absolute values to converge but still maintains control over the approximation error essential in analysis.

Common Misconceptions and Pitfalls

Absolute convergence guarantees that a series converges regardless of term rearrangement, while uniform convergence ensures convergence occurs uniformly across the entire domain. A common misconception is assuming uniform convergence implies absolute convergence, which is false because uniform convergence does not require the absolute values of terms to converge. Pitfalls include mistaking pointwise convergence for uniform convergence, leading to errors in exchanging limits and integrals or derivatives in analysis.

Absolute convergence Infographic

libterm.com

libterm.com