Conditional convergence occurs when an infinite series converges, but does not converge absolutely, meaning the series converges only under specific arrangements of its terms. This phenomenon plays a crucial role in understanding the behavior of series in mathematical analysis and has implications in areas such as Fourier series and rearrangement theorems. Explore the rest of the article to deepen your understanding of conditional convergence and its mathematical significance.

Table of Comparison

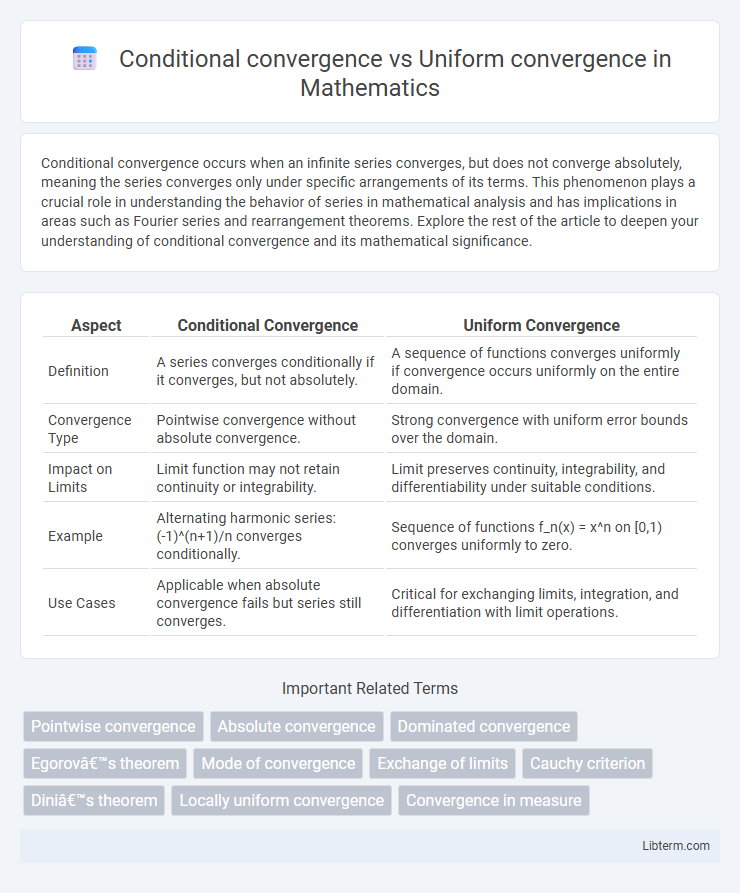

| Aspect | Conditional Convergence | Uniform Convergence |

|---|---|---|

| Definition | A series converges conditionally if it converges, but not absolutely. | A sequence of functions converges uniformly if convergence occurs uniformly on the entire domain. |

| Convergence Type | Pointwise convergence without absolute convergence. | Strong convergence with uniform error bounds over the domain. |

| Impact on Limits | Limit function may not retain continuity or integrability. | Limit preserves continuity, integrability, and differentiability under suitable conditions. |

| Example | Alternating harmonic series: (-1)^(n+1)/n converges conditionally. | Sequence of functions f_n(x) = x^n on [0,1) converges uniformly to zero. |

| Use Cases | Applicable when absolute convergence fails but series still converges. | Critical for exchanging limits, integration, and differentiation with limit operations. |

Introduction to Sequence and Series of Functions

Conditional convergence occurs when a series of functions converges pointwise but fails to converge uniformly, meaning convergence depends on the location within the domain and may vary significantly at different points. Uniform convergence guarantees that a sequence of functions converges to a limit function such that the speed of convergence is consistent across the entire domain, preserving continuity, integrability, and differentiability properties. In the study of sequences and series of functions, distinguishing between conditional and uniform convergence is crucial for analyzing the limit behavior and ensuring function properties hold under limits.

Defining Conditional Convergence

Conditional convergence occurs when a series converges only when the terms are arranged in a specific order, typically seen in the alternating harmonic series. Unlike absolute convergence, conditional convergence means the series' sum depends on the sequence's arrangement, and rearranging terms can lead to different limits or divergence. Understanding conditional convergence is crucial in real analysis and series manipulation to distinguish it from uniform convergence, which pertains to the convergence behavior of function sequences.

Defining Uniform Convergence

Uniform convergence occurs when a sequence of functions converges to a limit function such that the speed of convergence is uniform across the entire domain. Unlike conditional convergence, which depends on the pointwise behavior of functions and may vary at different points, uniform convergence ensures that for any given epsilon, there exists an index N beyond which all function values deviate from the limit function by less than epsilon uniformly for every point. This property is crucial in analysis because it preserves continuity and integrability under the limit operation.

Key Differences Between Conditional and Uniform Convergence

Conditional convergence occurs when a series converges only under specific conditions or arrangements of terms, typically the terms must be reordered to ensure convergence, whereas uniform convergence guarantees the convergence of a sequence of functions across the entire domain simultaneously without dependence on the choice of points. Uniform convergence ensures that the limit function preserves continuity, integrability, and differentiability properties from the sequence, while conditional convergence may fail to maintain these properties. The distinction lies in the strength of convergence: uniform convergence is stronger and implies pointwise convergence, but conditional convergence only ensures convergence in a less strict, order-sensitive manner.

Criteria for Conditional Convergence

Criteria for conditional convergence involve the convergence of a series whose terms decrease in absolute value to zero, yet the series does not converge absolutely. A key criterion is the Alternating Series Test, which requires terms to be monotonically decreasing and approach zero, ensuring conditional convergence. This contrasts with uniform convergence, where convergence must be consistent across an entire domain rather than depending on term-wise behavior.

Tests for Uniform Convergence

Tests for uniform convergence include the Weierstrass M-test, which provides a criterion based on bounding functions with a convergent series of constants, ensuring uniform convergence of function series. The Cauchy criterion for uniform convergence requires that for any e > 0, there exists an N such that for all m, n > N and for all points in the domain, the difference between the partial sums is less than e. Other important tests involve checking equicontinuity and applying Arzela-Ascoli theorem, which guarantees uniform convergence by demonstrating pointwise convergence and uniform boundedness in function sequences.

Implications for Continuity and Integration

Conditional convergence allows a series to converge without guaranteeing the preservation of continuity or integrability of the limit function, potentially leading to discontinuities or non-integrable limits. Uniform convergence ensures that the limit function inherits continuity from the sequence of functions and permits term-by-term integration, preserving integrability. This distinction is crucial in analysis, as uniform convergence supports stronger results such as interchange of limits and integrals, while conditional convergence may fail these properties.

Counterexamples Illustrating the Differences

Conditional convergence occurs when a series converges but does not converge absolutely, such as the alternating harmonic series \(\sum (-1)^{n+1} \frac{1}{n}\), which converges conditionally but not uniformly. Uniform convergence, on the other hand, requires the series of functions to converge uniformly to a limit function, exemplified by \(\sum \frac{x^n}{n^2}\) on \([0,1]\), which converges uniformly and hence preserves continuity and integration term-by-term. A classic counterexample is the Dirichlet series \(\sum \frac{\sin(nx)}{n}\), which converges pointwise but fails to converge uniformly, highlighting the critical distinction between pointwise (conditional) convergence and uniform convergence in function sequences.

Applications in Mathematical Analysis

Conditional convergence appears in series where the terms converge to a limit only when taken in a specific order and is essential in the study of rearrangements of infinite series and their impact on functional limits. Uniform convergence guarantees the preservation of continuity, integration, and differentiation operations within sequences of functions, making it a critical tool in approximation theory and solving differential equations. These convergence types facilitate rigorous analysis in functional spaces and ensure the validity of limit operations in integral transforms and Fourier series expansions.

Summary and Comparative Insights

Conditional convergence occurs when a series converges only if its terms are arranged in a specific order, often requiring the Alternating Series Test, while uniform convergence guarantees convergence of function sequences uniformly over a domain, preserving continuity and integrability. Uniform convergence is stronger and ensures that the limit function inherits properties from the sequence, unlike conditional convergence which may fail to do so. Understanding the distinctions is crucial in real analysis, particularly for justifying interchange of limits, integration, and differentiation under infinite processes.

Conditional convergence Infographic

libterm.com

libterm.com