A strong hyperplane is a fundamental concept in machine learning that separates data points with maximum margin, enhancing classification accuracy and robustness. It plays a crucial role in algorithms like Support Vector Machines, where the optimal boundary improves generalization on unseen data. Explore the rest of the article to understand how a strong hyperplane can improve your predictive models.

Table of Comparison

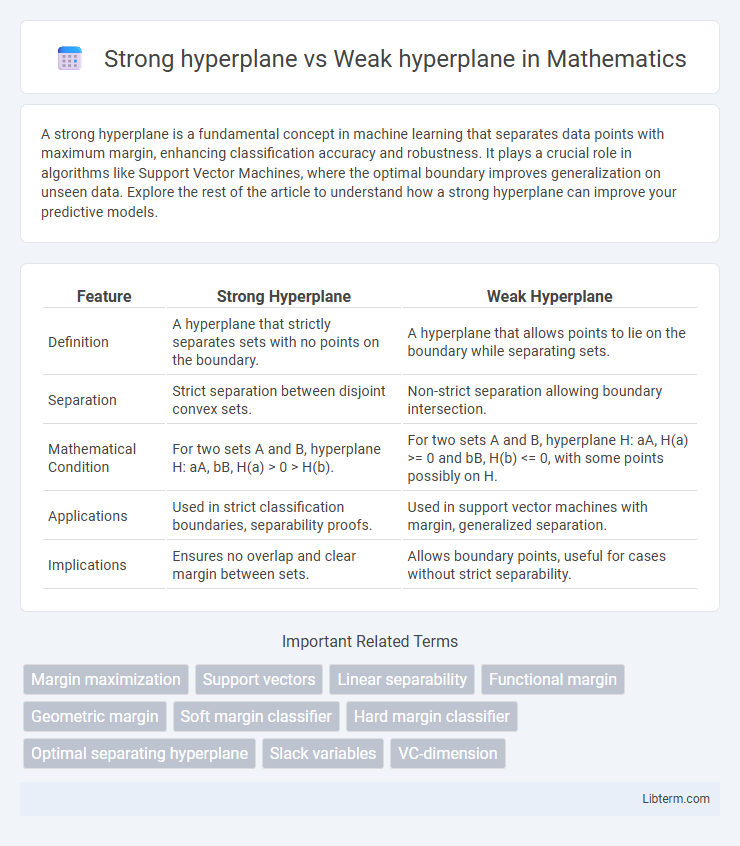

| Feature | Strong Hyperplane | Weak Hyperplane |

|---|---|---|

| Definition | A hyperplane that strictly separates sets with no points on the boundary. | A hyperplane that allows points to lie on the boundary while separating sets. |

| Separation | Strict separation between disjoint convex sets. | Non-strict separation allowing boundary intersection. |

| Mathematical Condition | For two sets A and B, hyperplane H: aA, bB, H(a) > 0 > H(b). | For two sets A and B, hyperplane H: aA, H(a) >= 0 and bB, H(b) <= 0, with some points possibly on H. |

| Applications | Used in strict classification boundaries, separability proofs. | Used in support vector machines with margin, generalized separation. |

| Implications | Ensures no overlap and clear margin between sets. | Allows boundary points, useful for cases without strict separability. |

Understanding Hyperplanes: Definition and Importance

Strong hyperplanes distinctly separate data points in classification tasks by maximizing the margin between different classes, enhancing model accuracy and robustness. Weak hyperplanes provide limited separation with smaller margins, often leading to higher classification errors and reduced generalization. Understanding the difference between strong and weak hyperplanes is crucial for optimizing support vector machines and other linear classifiers in machine learning.

What Makes a Hyperplane Strong?

A strong hyperplane effectively separates data points with a maximal margin, ensuring robust classification and minimizing misclassification risk in support vector machines. It achieves this by maximizing the distance between the hyperplane and the closest training samples, known as support vectors. This maximal margin property enhances generalization performance compared to weak hyperplanes that allow smaller margins or tolerate misclassifications.

Defining Weak Hyperplanes in Machine Learning

Weak hyperplanes in machine learning refer to decision boundaries that only marginally separate classes and perform slightly better than random guessing, often used in boosting algorithms like AdaBoost to improve model accuracy. These hyperplanes typically have higher classification errors individually but contribute to a strong classifier when combined iteratively. The concept contrasts with strong hyperplanes, which achieve significant separation and accuracy independently.

Geometric Interpretation of Strong vs Weak Hyperplanes

Strong hyperplanes in geometric terms strictly separate data points with a clear margin, ensuring all points lie on one side or at a defined distance from the hyperplane, which optimizes classification robustness. Weak hyperplanes, by contrast, allow some points within the margin or even misclassified on the wrong side, reflecting less stringent separation criteria that may lead to reduced generalization. The geometric interpretation highlights the margin width difference: strong hyperplanes maximize this margin for better classification confidence, while weak hyperplanes tolerate narrower margins or overlap.

Role in Support Vector Machines (SVM)

In Support Vector Machines (SVM), strong hyperplanes correspond to the optimal decision boundary maximizing the margin between classes, ensuring robust generalization and minimal classification error. Weak hyperplanes represent suboptimal boundaries that do not maximize margin, leading to higher misclassification rates and reduced model performance. The SVM algorithm specifically identifies the strong hyperplane by focusing on support vectors that define the maximum-margin separator in high-dimensional feature spaces.

Mathematical Criteria: Strong vs Weak Hyperplanes

Strong hyperplanes satisfy strict separation criteria where data points lie entirely on one side with a positive margin, ensuring no overlap occurs, which is essential in hard-margin support vector machines. Weak hyperplanes allow for some data points to be on or within the margin boundary, accommodating misclassifications or soft margins by introducing slack variables in optimization. The mathematical distinction centers on margin maximization under constraints: strong hyperplanes enforce \( y_i(\mathbf{w} \cdot \mathbf{x}_i + b) \ge 1 \) strictly, whereas weak hyperplanes relax this to \( y_i(\mathbf{w} \cdot \mathbf{x}_i + b) \ge 1 - \xi_i \) with slack \(\xi_i \ge 0\), balancing margin width and classification tolerance.

Impact on Classification Accuracy

Strong hyperplanes, characterized by maximizing the margin between classes, significantly enhance classification accuracy by reducing generalization error and improving robustness to noise. Weak hyperplanes, which may inadequately separate classes or have smaller margins, often lead to higher misclassification rates and poorer performance on unseen data. Optimizing for strong hyperplanes in algorithms like SVM ensures more reliable and accurate prediction outcomes in diverse classification tasks.

Visualization: Distinguishing Hyperplane Strength

A strong hyperplane distinctly separates classes with a wide margin, clearly visualized as a gap empty of data points on either side, enhancing model generalization. In contrast, a weak hyperplane has a narrow or non-existent margin, often intersecting or lying very close to data points, leading to potential misclassifications. Visualization of these hyperplanes in datasets typically highlights the robustness of a strong hyperplane versus the susceptibility of a weak one to noise and overlapping classes.

Real-World Applications and Implications

Strong hyperplanes in machine learning optimize margin maximization for better generalization, crucial in support vector machines used for image recognition and bioinformatics. Weak hyperplanes, often resulting from noisy or limited data, may lead to overfitting or underperforming classifiers impacting fraud detection systems and speech recognition accuracy. Choosing a strong hyperplane improves robustness and predictive power, enhancing real-world applications in finance, healthcare diagnostics, and autonomous driving systems.

Choosing the Right Hyperplane for Optimal Performance

Choosing the right hyperplane is crucial for optimal performance in support vector machines, where a strong hyperplane maximizes the margin between classes, enhancing generalization and robustness. Weak hyperplanes may lead to smaller margins, increasing the risk of overfitting and reduced predictive accuracy, particularly in noisy or complex datasets. Selecting a strong hyperplane involves solving convex optimization problems that prioritize margin maximization, directly impacting model stability and classification effectiveness.

Strong hyperplane Infographic

libterm.com

libterm.com