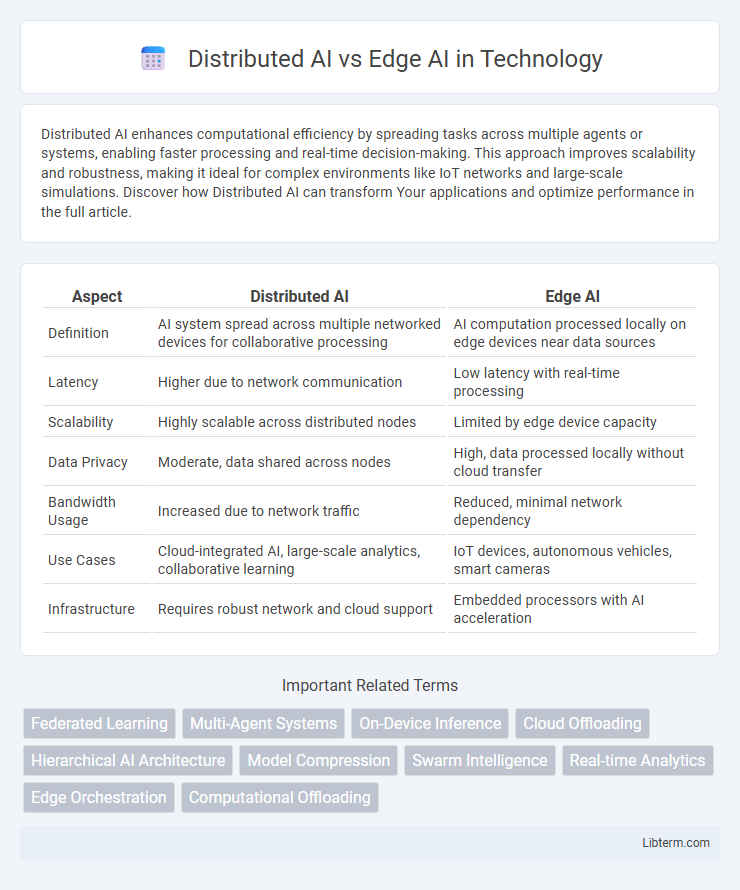

Distributed AI enhances computational efficiency by spreading tasks across multiple agents or systems, enabling faster processing and real-time decision-making. This approach improves scalability and robustness, making it ideal for complex environments like IoT networks and large-scale simulations. Discover how Distributed AI can transform Your applications and optimize performance in the full article.

Table of Comparison

| Aspect | Distributed AI | Edge AI |

|---|---|---|

| Definition | AI system spread across multiple networked devices for collaborative processing | AI computation processed locally on edge devices near data sources |

| Latency | Higher due to network communication | Low latency with real-time processing |

| Scalability | Highly scalable across distributed nodes | Limited by edge device capacity |

| Data Privacy | Moderate, data shared across nodes | High, data processed locally without cloud transfer |

| Bandwidth Usage | Increased due to network traffic | Reduced, minimal network dependency |

| Use Cases | Cloud-integrated AI, large-scale analytics, collaborative learning | IoT devices, autonomous vehicles, smart cameras |

| Infrastructure | Requires robust network and cloud support | Embedded processors with AI acceleration |

Introduction to Distributed AI and Edge AI

Distributed AI refers to the deployment of artificial intelligence models across multiple interconnected devices or systems, enabling collaborative processing and decision-making to enhance scalability and fault tolerance. Edge AI processes data locally on devices such as sensors, smartphones, or edge servers, reducing latency and dependence on cloud infrastructure while improving real-time performance and data privacy. Both approaches aim to optimize AI efficiency, with Distributed AI emphasizing network-wide intelligence sharing and Edge AI focusing on localized, immediate inference.

Core Principles of Distributed AI

The core principles of Distributed AI focus on decentralizing intelligence across multiple interconnected agents or nodes that collaboratively solve complex problems through communication and coordination. This architecture emphasizes scalability, fault tolerance, and data privacy by processing information closer to the source while enabling collective learning and decision-making. Distributed AI systems leverage networked resources to optimize computational efficiency and robustness, distinguishing them from centralized or single-node Edge AI setups.

Core Principles of Edge AI

Edge AI operates by processing data locally on devices such as sensors, smartphones, or IoT hardware, reducing latency and bandwidth usage compared to Distributed AI, which involves data sharing across centralized servers or cloud infrastructures. Core principles of Edge AI include real-time data analysis, privacy preservation through on-device computing, and energy efficiency by minimizing data transmission. This decentralized approach enables faster decision-making and enhanced security crucial for applications in autonomous vehicles, smart cities, and healthcare devices.

Key Differences Between Distributed AI and Edge AI

Distributed AI distributes processing across multiple networked devices, optimizing large-scale data handling and collaborative learning. Edge AI processes data locally on devices near the data source, reducing latency and enhancing real-time decision-making. Key differences include the architecture scope, with Distributed AI leveraging centralized coordination across nodes, while Edge AI emphasizes autonomous intelligence at the network edge for faster response times.

Advantages of Distributed AI Architecture

Distributed AI architecture enhances scalability by allocating computational tasks across multiple devices or nodes, reducing the burden on any single system. This approach improves fault tolerance, as the failure of one node does not compromise the entire AI process, ensuring continuous operation. Furthermore, distributed AI optimizes resource utilization by leveraging heterogeneous hardware, enabling faster data processing and real-time decision-making across diverse environments.

Benefits of Edge AI for Real-Time Processing

Edge AI enhances real-time processing by minimizing latency through on-device data analysis, ensuring faster decision-making compared to Distributed AI systems reliant on cloud communication. It reduces bandwidth usage and improves data privacy by keeping sensitive information local instead of transmitting it across networks. These benefits make Edge AI ideal for applications requiring immediate responsiveness, such as autonomous vehicles and industrial automation.

Use Cases: Distributed AI vs Edge AI

Distributed AI excels in large-scale applications such as smart cities, where multiple data sources across different locations analyze traffic patterns and energy consumption in real-time. Edge AI is ideal for use cases requiring low-latency responses and privacy, including autonomous vehicles and industrial automation, by processing data directly on devices. Both paradigms enhance decision-making efficiency but differ primarily in deployment scale and proximity to data sources.

Security and Privacy Implications

Distributed AI enhances security by decentralizing data processing across multiple nodes, reducing single points of failure and minimizing risks associated with centralized data breaches. Edge AI processes data locally on devices, significantly improving privacy by limiting data transmission to external servers and enabling real-time threat detection. Both approaches require robust encryption and access control mechanisms to protect sensitive information in dynamic and heterogeneous environments.

Scalability and Deployment Challenges

Distributed AI offers significant scalability by leveraging multiple interconnected nodes to process data collaboratively, enabling large-scale applications across diverse environments. Edge AI faces deployment challenges due to limited computational resources and the need for real-time data processing close to the source, which can restrict scalability compared to distributed systems. Managing network latency, data privacy, and synchronization across distributed AI infrastructures remains complex, while edge AI demands optimized hardware and tailored software solutions for efficient deployment at the device level.

Future Trends in Distributed and Edge AI

Future trends in Distributed AI emphasize enhanced collaboration between decentralized nodes, leveraging federated learning to improve data privacy and computational efficiency across networks. Edge AI developments prioritize real-time analytics and reduced latency by deploying advanced neural networks directly on IoT devices and edge servers. Integration of 5G technology and AI-powered edge computing is expected to accelerate autonomous systems and smart city applications, driving innovation in both Distributed and Edge AI domains.

Distributed AI Infographic

libterm.com

libterm.com