Distributed computing enables multiple interconnected computers to work together, sharing resources and processing power to solve complex problems more efficiently than a single machine. This approach enhances scalability, fault tolerance, and speed, making it essential for modern applications like cloud services, big data analytics, and decentralized networks. Explore the rest of the article to understand how this technology can transform your computing capabilities and streamline operations.

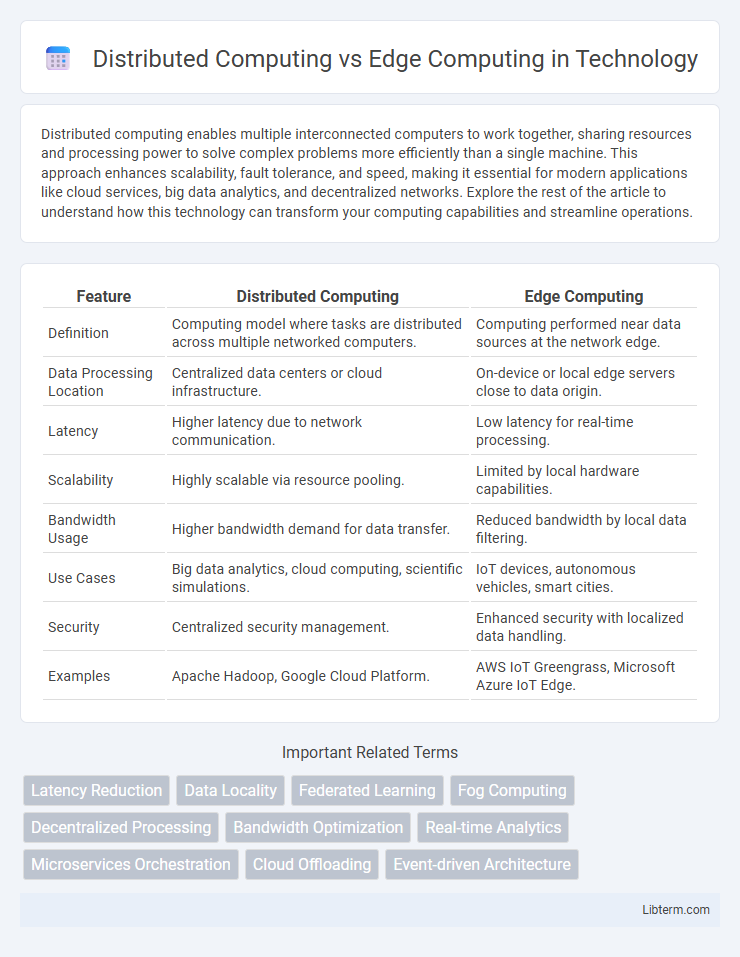

Table of Comparison

| Feature | Distributed Computing | Edge Computing |

|---|---|---|

| Definition | Computing model where tasks are distributed across multiple networked computers. | Computing performed near data sources at the network edge. |

| Data Processing Location | Centralized data centers or cloud infrastructure. | On-device or local edge servers close to data origin. |

| Latency | Higher latency due to network communication. | Low latency for real-time processing. |

| Scalability | Highly scalable via resource pooling. | Limited by local hardware capabilities. |

| Bandwidth Usage | Higher bandwidth demand for data transfer. | Reduced bandwidth by local data filtering. |

| Use Cases | Big data analytics, cloud computing, scientific simulations. | IoT devices, autonomous vehicles, smart cities. |

| Security | Centralized security management. | Enhanced security with localized data handling. |

| Examples | Apache Hadoop, Google Cloud Platform. | AWS IoT Greengrass, Microsoft Azure IoT Edge. |

Introduction to Distributed and Edge Computing

Distributed computing involves a network of interconnected computers working together to perform complex tasks, enabling resource sharing and parallel processing across multiple locations. Edge computing processes data closer to the source or end-user devices, reducing latency and bandwidth usage by minimizing reliance on centralized data centers. Both paradigms enhance system scalability and efficiency but differ in data processing locations and application scenarios.

Key Concepts: What is Distributed Computing?

Distributed computing is a model where multiple computers or nodes work together to perform complex tasks by sharing resources and coordinating processes over a network. It enables parallel processing, scalability, and fault tolerance by dividing workloads among interconnected systems. Key components include distributed databases, distributed algorithms, and communication protocols that ensure data consistency and system reliability.

Understanding Edge Computing: An Overview

Edge computing processes data closer to the source, reducing latency and bandwidth usage compared to centralized distributed computing systems. It enables real-time analytics and decision-making by leveraging local devices such as IoT sensors, gateways, and edge servers. This architecture supports scalable, efficient operations across diverse applications like autonomous vehicles, smart cities, and industrial automation.

Core Differences Between Distributed and Edge Computing

Distributed computing involves a network of interconnected computers working together to perform a task, often centralized in data centers, enhancing scalability and resource sharing across locations. Edge computing processes data closer to the data source or end-user, reducing latency and bandwidth usage by performing computations locally on devices or nearby edge servers. The core difference lies in distributed computing prioritizing broad resource pooling and parallel processing, while edge computing emphasizes localized processing to improve real-time responsiveness and reduce data transmission.

Architecture Comparison: Distributed vs Edge Computing

Distributed computing architecture involves a network of interconnected nodes that collaboratively process data across multiple locations, enhancing scalability and fault tolerance. Edge computing architecture, by contrast, decentralizes data processing by placing computational resources closer to data sources at the network's edge, reducing latency and bandwidth usage. The key difference lies in distributed computing's broad-scale resource sharing versus edge computing's localized, real-time data handling.

Use Cases for Distributed Computing

Distributed computing excels in large-scale data processing, powering applications such as cloud services, scientific simulations, and financial modeling that demand high computational capacity and fault tolerance. It enables resource sharing across geographically dispersed servers, optimizing workload distribution for enterprise applications and big data analytics. Use cases include online transaction processing, large-scale machine learning training, and global content delivery networks for efficient data handling and scalability.

Real-World Applications of Edge Computing

Edge computing processes data near its source, reducing latency and bandwidth usage compared to distributed computing's reliance on centralized data centers. Real-world applications of edge computing include autonomous vehicles, where immediate data analysis is crucial for safety, and smart cities, enabling real-time traffic management and environmental monitoring. Industrial IoT leverages edge computing to enhance machine maintenance through on-site data processing, improving efficiency and reducing downtime.

Advantages and Challenges of Distributed Computing

Distributed computing offers enhanced scalability and resource efficiency by enabling multiple machines to work on complex tasks simultaneously, improving fault tolerance through redundancy and parallel processing. Key challenges include managing data consistency across nodes, handling network latency, and ensuring robust security against cyber threats in a distributed environment. Optimal deployment requires balancing workload distribution while addressing synchronization issues and maintaining system reliability.

Benefits and Limitations of Edge Computing

Edge computing reduces latency by processing data near the source, enhancing real-time analytics and improving bandwidth efficiency through localized data handling. It offers better security by minimizing data transmission to centralized servers, yet faces limitations including limited processing power and storage capacity compared to centralized distributed computing systems. Scalability challenges arise as edge devices require consistent management, and the complexity of deploying updates across numerous edge nodes can hinder operational efficiency.

Selecting the Right Approach: When to Use Distributed or Edge Computing

Distributed computing is ideal for handling large-scale data processing across multiple centralized servers, offering scalability and resource optimization for complex applications. Edge computing excels in scenarios requiring low latency, real-time data processing, and reduced bandwidth usage by processing data close to the source, such as IoT devices and autonomous systems. Selecting the right approach depends on factors like proximity to data sources, latency sensitivity, network reliability, and the computational demands of the application.

Distributed Computing Infographic

libterm.com

libterm.com