Normalized data improves database efficiency by organizing information to minimize redundancy and maintain data integrity. This structured approach facilitates faster queries and simplifies data maintenance. Discover how normalized data can optimize your database in the rest of this article.

Table of Comparison

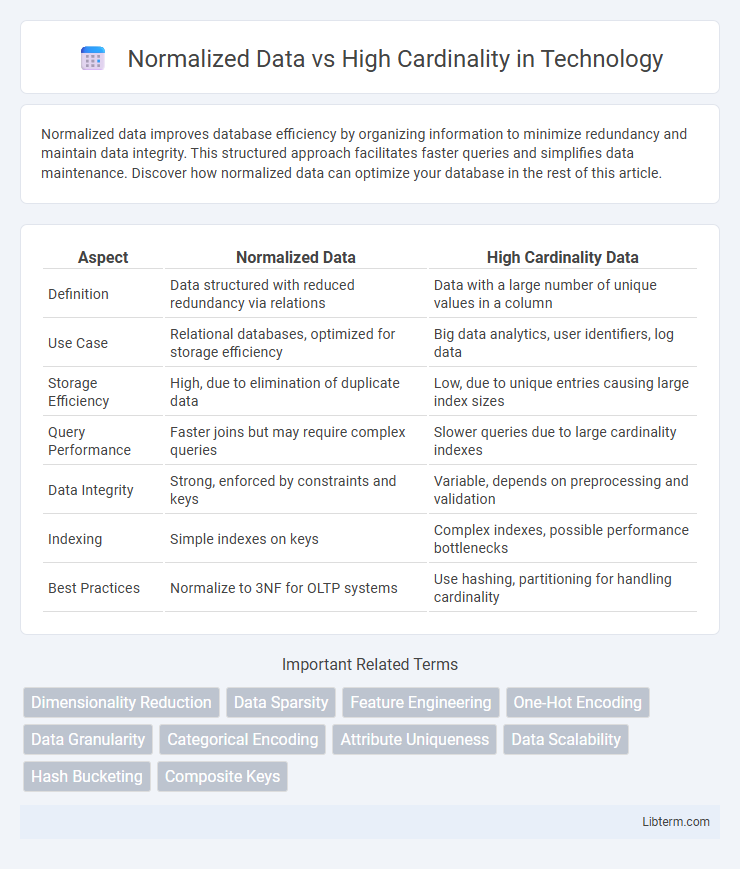

| Aspect | Normalized Data | High Cardinality Data |

|---|---|---|

| Definition | Data structured with reduced redundancy via relations | Data with a large number of unique values in a column |

| Use Case | Relational databases, optimized for storage efficiency | Big data analytics, user identifiers, log data |

| Storage Efficiency | High, due to elimination of duplicate data | Low, due to unique entries causing large index sizes |

| Query Performance | Faster joins but may require complex queries | Slower queries due to large cardinality indexes |

| Data Integrity | Strong, enforced by constraints and keys | Variable, depends on preprocessing and validation |

| Indexing | Simple indexes on keys | Complex indexes, possible performance bottlenecks |

| Best Practices | Normalize to 3NF for OLTP systems | Use hashing, partitioning for handling cardinality |

Understanding Normalized Data

Normalized data organizes information by reducing redundancy and ensuring data integrity through structured tables linked by keys. This process simplifies updates and maintains consistency, enhancing database performance and scalability. Understanding normalized data is essential for managing complex datasets where avoiding duplication and ensuring accurate relationships are critical.

Defining High Cardinality

High cardinality refers to a data attribute containing a large number of unique values, such as user IDs or email addresses, which can complicate data processing and storage. Unlike normalized data structures that minimize redundancy by dividing data into related tables, high cardinality attributes often lead to increased complexity and slower query performance. Efficient handling of high cardinality is crucial for optimizing database design and improving scalability in big data environments.

Key Differences Between Normalized Data and High Cardinality

Normalized data organizes information into related tables to reduce redundancy and improve data integrity, whereas high cardinality refers to columns with a large number of unique values, often challenging database indexing and query performance. Normalization emphasizes structure and relationships in the database schema, while high cardinality impacts data distribution and storage efficiency within those structures. Understanding these differences helps optimize database design and query optimization strategies based on data characteristics.

Advantages of Normalized Data

Normalized data reduces redundancy and enhances data integrity by organizing information into related tables with defined relationships. This structure improves query efficiency and reduces storage costs by minimizing duplicate data entries. Normalization also simplifies data maintenance and updates, ensuring consistency across large datasets compared to handling high cardinality attributes in denormalized formats.

Challenges Posed by High Cardinality

High cardinality presents significant challenges in database management by increasing index size and slowing query performance due to the vast number of unique values. It complicates data analysis and machine learning by inflating feature dimensionality, leading to overfitting and reduced model efficiency. Unlike normalized data, which reduces redundancy, high cardinality demands advanced techniques for compression and feature selection to maintain system scalability and responsiveness.

Impact on Database Performance

Normalized data reduces redundancy by organizing information into related tables, which enhances update efficiency and maintains data integrity but can increase the complexity of query execution due to multiple table joins. High cardinality columns, containing many unique values, can degrade index performance and increase search times, leading to slower query responses in large datasets. Balancing normalization with the management of high cardinality fields is critical for optimizing database performance and ensuring scalable, efficient data retrieval.

Use Cases for Normalized Data

Normalized data is essential in relational database designs where data integrity and efficient storage are priorities, such as transactional systems and financial applications. It reduces data redundancy, ensures consistency, and simplifies updates by organizing data into related tables with primary and foreign keys. Use cases include ERP systems, customer relationship management (CRM) platforms, and inventory management where maintaining accurate and up-to-date records across multiple entities is critical.

Use Cases for High Cardinality Fields

High cardinality fields, characterized by a large number of unique values, are essential in use cases such as user identification in e-commerce platforms, telemetry data in IoT devices, and detailed transaction logs in finance, where precise tracking and differentiation are critical. Normalized data structures improve query performance and data integrity but often require advanced indexing and partitioning strategies to efficiently handle the complexity and volume of high cardinality fields. Using high cardinality fields effectively supports personalized recommendations, fraud detection algorithms, and granular data analytics by enabling detailed segmentation and accurate pattern recognition.

Best Practices for Managing High Cardinality

Managing high cardinality in databases requires leveraging normalization to reduce data redundancy and improve query performance. Utilizing techniques such as surrogate keys, indexing strategies, and data partitioning effectively handles vast unique values. Employing compression algorithms and monitoring cardinality metrics ensures scalable and efficient storage solutions.

Choosing the Right Approach for Your Data Model

Choosing between normalized data and high cardinality depends on the specific requirements of your data model, such as query performance and data integrity. Normalized data reduces redundancy by organizing tables with clear relationships, which is ideal for transactional systems requiring consistency. High cardinality is suitable when handling large datasets with many unique values, but may require denormalization or indexing strategies to optimize query speed and manage complexity effectively.

Normalized Data Infographic

libterm.com

libterm.com