Low dimensionality simplifies data analysis and enhances computational efficiency by reducing the number of variables to consider. It helps uncover meaningful patterns and relationships while minimizing noise and redundancy in datasets. Explore the rest of the article to discover how low dimensionality can optimize your data processing strategies.

Table of Comparison

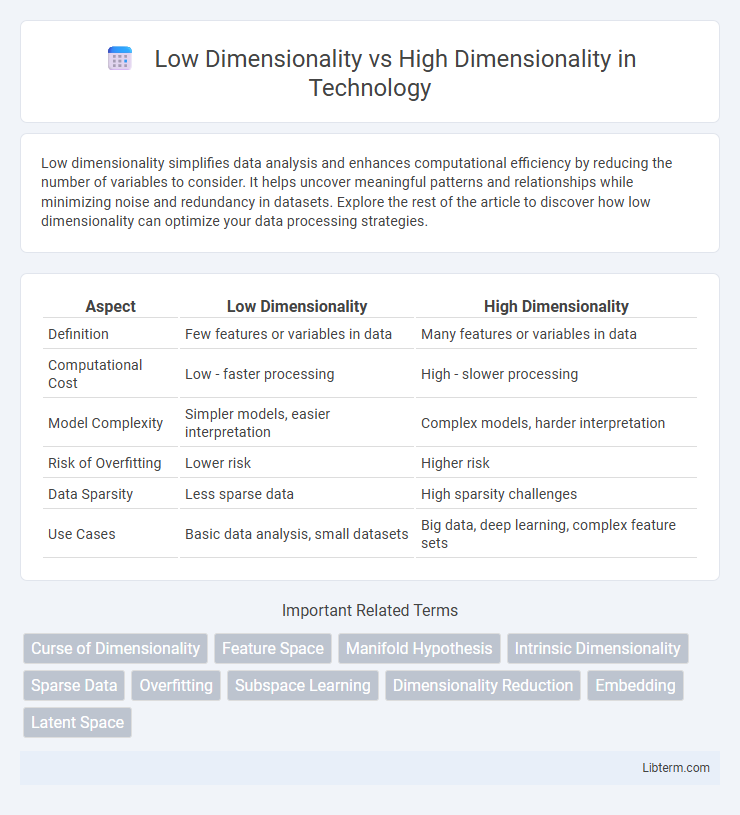

| Aspect | Low Dimensionality | High Dimensionality |

|---|---|---|

| Definition | Few features or variables in data | Many features or variables in data |

| Computational Cost | Low - faster processing | High - slower processing |

| Model Complexity | Simpler models, easier interpretation | Complex models, harder interpretation |

| Risk of Overfitting | Lower risk | Higher risk |

| Data Sparsity | Less sparse data | High sparsity challenges |

| Use Cases | Basic data analysis, small datasets | Big data, deep learning, complex feature sets |

Understanding Dimensionality: An Overview

Low dimensionality typically refers to datasets with fewer features, making visualization, analysis, and interpretation more straightforward, while high dimensionality involves numerous variables that can complicate modeling due to the "curse of dimensionality." Understanding dimensionality is crucial for selecting appropriate algorithms, as high-dimensional data might require dimensionality reduction techniques like PCA or t-SNE to enhance performance and reveal intrinsic structures. Effective handling of dimensionality impacts data quality, model accuracy, and computational efficiency across machine learning and data science applications.

Defining Low Dimensionality

Low dimensionality refers to datasets or feature spaces with a small number of variables or attributes, often making them easier to visualize and analyze. In machine learning, low-dimensional data typically have fewer than 10 features, reducing complexity and minimizing the risk of overfitting. This contrasts with high dimensionality, where datasets contain hundreds or thousands of features, leading to challenges such as the curse of dimensionality.

Defining High Dimensionality

High dimensionality refers to datasets with a large number of features or variables, often exceeding hundreds or thousands, which increases computational complexity and the risk of overfitting in machine learning models. In such spaces, data points become sparse, making it difficult to identify meaningful patterns due to the "curse of dimensionality." Effective dimensionality reduction techniques like PCA or t-SNE are essential to transform high-dimensional data into lower-dimensional representations while preserving significant structures.

Key Differences Between Low and High Dimensionality

Low dimensionality refers to datasets with fewer features, making visualization and interpretation easier, while high dimensionality involves a large number of features that can lead to the "curse of dimensionality," causing sparsity and increased computational complexity. Key differences include the impact on model performance, where low-dimensional data often requires simpler models, whereas high-dimensional data benefits from dimensionality reduction techniques like PCA to improve accuracy and avoid overfitting. In machine learning, managing high dimensionality is crucial for maintaining efficiency and generalization, whereas low dimensionality allows for faster processing and clearer insights.

Impact on Data Analysis and Machine Learning

Low dimensionality simplifies data visualization and reduces computational complexity, enabling faster model training and easier interpretation, but it may omit critical features leading to underfitting. High dimensionality captures more complex patterns and improves model expressiveness, though it often causes the curse of dimensionality, increasing overfitting risk and requiring advanced dimensionality reduction techniques such as PCA or t-SNE. Balancing dimensionality is crucial for optimizing model performance, accuracy, and generalization in machine learning tasks.

The Curse of Dimensionality Explained

The curse of dimensionality refers to the exponential increase in data volume and sparsity as the number of dimensions grows, complicating machine learning tasks and reducing model performance. In low-dimensional spaces, data points are densely packed, enabling more effective clustering, classification, and regression. High-dimensional spaces lead to overfitting, increased computational complexity, and challenges in distance measurement, necessitating dimensionality reduction techniques like PCA and t-SNE.

Visualization in Low vs High Dimensional Spaces

Visualization in low-dimensional spaces, such as 2D or 3D, allows for intuitive representation and clear understanding of data patterns, clusters, and relationships through plots or charts. High-dimensional spaces challenge direct visualization because human perception is limited to three dimensions, requiring dimensionality reduction techniques like PCA or t-SNE to project data into interpretable lower dimensions while preserving essential structure. These methods enable insightful exploration of complex datasets by maintaining variance or neighborhood fidelity, bridging the gap between high-dimensional data complexity and human cognitive capabilities.

Advantages and Challenges of Low Dimensionality

Low dimensionality reduces computational complexity, enabling faster processing and easier visualization of data patterns, which is advantageous in machine learning and data analysis. It mitigates the curse of dimensionality, improving model generalization and reducing overfitting by focusing on the most relevant features. However, dimensionality reduction may lead to loss of critical information, potentially impacting the accuracy and interpretability of predictive models.

Advantages and Challenges of High Dimensionality

High dimensionality offers advantages such as capturing complex patterns and improving model expressiveness, particularly in fields like genomics and image recognition where numerous features are essential. However, challenges include the curse of dimensionality, which leads to increased computational costs, sparse data distribution, and risk of overfitting, making dimensionality reduction techniques crucial. Methods like Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE) are often employed to mitigate these challenges while preserving meaningful data structure.

Practical Applications and Real-World Examples

Low dimensionality simplifies data visualization and enables faster processing, ideal for applications like image compression and principal component analysis in finance for risk management. High dimensionality captures complex patterns and relationships, critical in genomics for gene expression analysis, and machine learning models dealing with large feature spaces such as text classification and recommendation systems. Balancing dimensionality is key in real-world scenarios to optimize computational efficiency and model accuracy, evidenced by techniques like feature selection and dimensionality reduction in healthcare diagnostics and e-commerce personalization.

Low Dimensionality Infographic

libterm.com

libterm.com