Accuracy ensures precise results and reliable information, which is crucial in decision-making and everyday tasks. High accuracy minimizes errors and enhances trust in data, tools, or processes you rely on. Discover how improving accuracy can benefit your work and life by exploring the rest of this article.

Table of Comparison

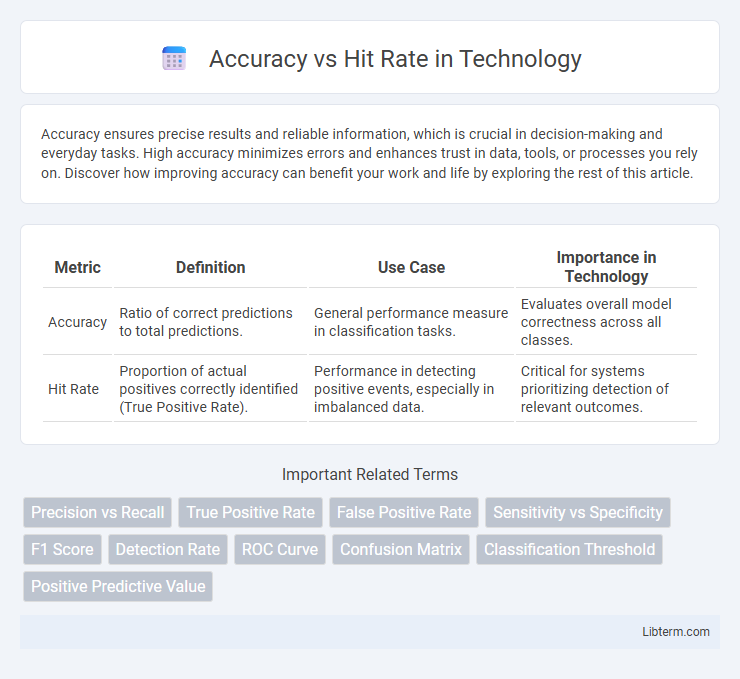

| Metric | Definition | Use Case | Importance in Technology |

|---|---|---|---|

| Accuracy | Ratio of correct predictions to total predictions. | General performance measure in classification tasks. | Evaluates overall model correctness across all classes. |

| Hit Rate | Proportion of actual positives correctly identified (True Positive Rate). | Performance in detecting positive events, especially in imbalanced data. | Critical for systems prioritizing detection of relevant outcomes. |

Understanding Accuracy and Hit Rate

Accuracy measures the proportion of correct predictions out of all predictions made, providing a broad view of a model's overall performance. Hit rate, often used in recommendation systems, calculates the fraction of relevant items successfully retrieved, emphasizing the effectiveness in capturing meaningful results. Understanding the distinction helps in selecting the appropriate metric based on the specific goals of a predictive or retrieval task.

Defining Accuracy in Data Analysis

Accuracy in data analysis measures the proportion of true results, both true positives and true negatives, among the total number of cases examined. It quantifies how often a model correctly predicts or classifies data points, making it a key metric for overall performance evaluation. High accuracy indicates strong predictive reliability but may be misleading in imbalanced datasets where one class dominates.

Explaining Hit Rate: What Does It Measure?

Hit rate measures the proportion of successful detections or positive identifications made by a system compared to the total number of actual positive instances. It reflects the system's ability to correctly recognize relevant events, such as true positives in diagnostic tests or correct recommendations in prediction models. High hit rate indicates effective performance in capturing meaningful outcomes without considering false positives.

Key Differences Between Accuracy and Hit Rate

Accuracy measures the proportion of correct predictions out of all predictions made, reflecting overall model performance across both positive and negative classes. Hit Rate, also known as recall or true positive rate, evaluates the ability of a model to correctly identify positive instances, focusing solely on relevant class detection. The key difference between Accuracy and Hit Rate lies in their sensitivity to class imbalance; Accuracy can be misleading when classes are imbalanced, while Hit Rate provides a more precise measure of performance for the positive class.

When to Use Accuracy vs Hit Rate

Accuracy is best used when class distributions are balanced and the cost of false positives and false negatives is similar, providing a straightforward measure of overall correctness. Hit rate, or recall, is preferable in imbalanced datasets or when identifying all relevant instances is critical, such as fraud detection or medical diagnosis. Choosing between accuracy and hit rate depends on the specific context and the relative importance of false negatives versus false positives.

Common Misconceptions About Accuracy and Hit Rate

Accuracy and hit rate are often confused metrics in evaluating model performance, but accuracy measures the proportion of true results (both true positives and true negatives) among the total number of cases, while hit rate specifically refers to the fraction of true positives identified by the model. A common misconception is that high accuracy always indicates good model performance; however, in imbalanced datasets, a model can achieve high accuracy by simply predicting the majority class, rendering the hit rate a more insightful measure for detecting positive instances. Misinterpreting accuracy without considering hit rate can lead to overestimating the effectiveness of models, especially in scenarios like fraud detection or medical diagnosis where positive class identification is critical.

Practical Applications in Various Fields

Accuracy measures the proportion of correctly identified instances among all cases, essential for evaluating overall model performance in fields like healthcare diagnostics and fraud detection. Hit Rate, or recall, focuses on correctly detecting relevant positive cases, crucial in applications such as cybersecurity threat detection and marketing campaign targeting where missing critical instances can have significant consequences. Balancing accuracy and hit rate allows industries like finance and autonomous driving to optimize decision-making systems for both reliability and sensitivity to true positives.

Limitations of Accuracy and Hit Rate Metrics

Accuracy often fails to reflect true model performance in imbalanced datasets, as it can be misleadingly high when the majority class dominates. Hit rate, or recall, emphasizes the proportion of correctly identified positive cases but ignores false positives, which can skew overall evaluation. Both metrics lack sensitivity to class distribution and do not provide a comprehensive view of model robustness or precision.

Improving Model Performance: Accuracy vs Hit Rate

Improving model performance requires understanding the difference between accuracy and hit rate, where accuracy measures the proportion of correct predictions over all predictions, capturing overall correctness, while hit rate focuses on the model's success in identifying relevant positive instances among the true positives. Optimizing hit rate often enhances recall, crucial for applications like fraud detection or medical diagnosis, whereas accuracy provides a balanced view but may be misleading in imbalanced datasets. Prioritizing hit rate improvement through techniques like threshold tuning, precision-recall tradeoff analysis, and customized loss functions ensures models better capture critical positive outcomes, ultimately driving more effective decision-making.

Selecting the Right Metric for Your Project

Accuracy measures the overall correctly predicted instances out of total predictions, making it suitable for balanced datasets but potentially misleading in imbalanced scenarios. Hit Rate focuses on the proportion of relevant items successfully identified, ideal for recommendation systems and ranking tasks where capturing true positives is critical. Selecting the right metric depends on your project's goal: use accuracy for general classification tasks with balanced classes, and prefer hit rate when evaluating retrieval effectiveness or prioritizing positive outcomes.

Accuracy Infographic

libterm.com

libterm.com