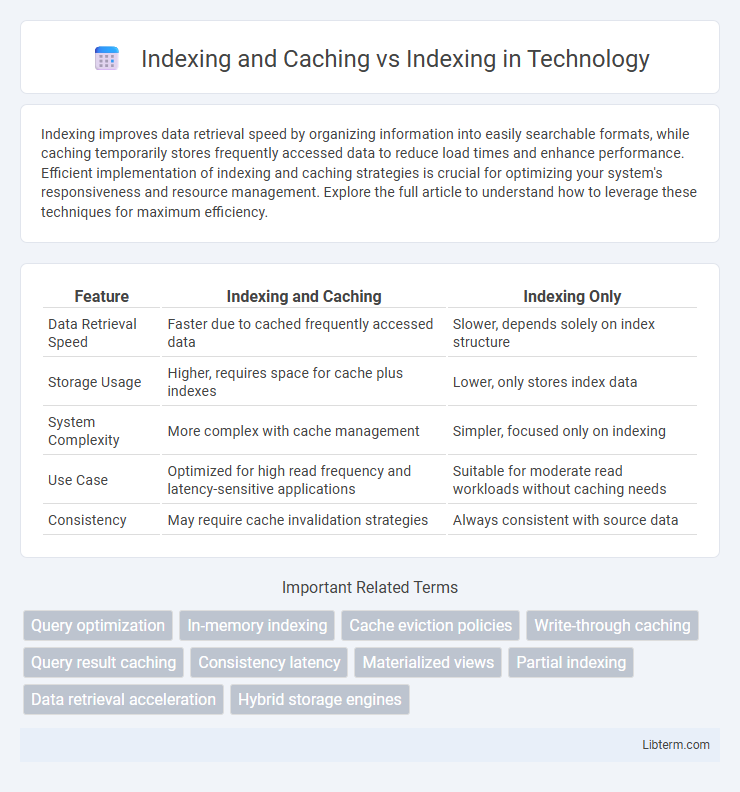

Indexing improves data retrieval speed by organizing information into easily searchable formats, while caching temporarily stores frequently accessed data to reduce load times and enhance performance. Efficient implementation of indexing and caching strategies is crucial for optimizing your system's responsiveness and resource management. Explore the full article to understand how to leverage these techniques for maximum efficiency.

Table of Comparison

| Feature | Indexing and Caching | Indexing Only |

|---|---|---|

| Data Retrieval Speed | Faster due to cached frequently accessed data | Slower, depends solely on index structure |

| Storage Usage | Higher, requires space for cache plus indexes | Lower, only stores index data |

| System Complexity | More complex with cache management | Simpler, focused only on indexing |

| Use Case | Optimized for high read frequency and latency-sensitive applications | Suitable for moderate read workloads without caching needs |

| Consistency | May require cache invalidation strategies | Always consistent with source data |

Understanding Indexing: Core Concepts

Indexing involves creating data structures that speed up database queries by allowing rapid data retrieval without scanning the entire dataset. Caching complements indexing by temporarily storing frequently accessed data in memory, reducing access time during repeated queries. Understanding core indexing concepts such as B-trees, hash indexes, and indexing strategies is essential for optimizing database performance and query efficiency.

The Role of Caching in Data Retrieval

Caching enhances indexing by temporarily storing frequently accessed data, significantly reducing retrieval times and server load. While indexing organizes data for quick searches, caching directly serves repeated queries from fast memory locations, improving performance and user experience. Effective caching strategies complement indexing to optimize overall data retrieval efficiency in database systems.

Indexing Alone: Strengths and Limitations

Indexing alone significantly enhances data retrieval speed by organizing information into structured formats like B-trees or hash tables, enabling quick lookups without scanning entire datasets. Its strengths include reduced search time and improved query performance, particularly in read-heavy environments where data updates are infrequent. However, limitations arise in handling large-scale, dynamic data since index maintenance can become resource-intensive, and it does not inherently reduce latency caused by repeated data access, which caching effectively addresses.

How Caching Enhances Indexing Performance

Caching significantly enhances indexing performance by temporarily storing frequently accessed index data in faster storage, reducing direct access to slower disk-based indexes. This optimization minimizes latency and speeds up query processing, especially in large-scale databases where index traversal is resource-intensive. The synergy between indexing and caching results in accelerated data retrieval and improved overall system responsiveness.

Indexing and Caching: Key Differences

Indexing and caching are critical techniques in data management, where indexing organizes data for efficient retrieval using structured keys, while caching stores frequently accessed data in faster storage to reduce access latency. Indexing improves query performance by enabling rapid data location through B-trees or hash maps, whereas caching enhances performance by temporarily holding data closer to the processor or application layer. The key difference lies in their scope: indexing optimizes data search paths within databases, and caching optimizes access speed by minimizing repeated data retrieval operations.

When to Use Only Indexing

Only indexing is ideal when handling static or infrequently updated datasets where query performance is critical but caching overhead is unnecessary. It efficiently accelerates data retrieval by organizing records with minimal maintenance costs compared to caching. Use solely indexing in environments with limited memory resources or when real-time data accuracy outweighs the benefits of cached results.

Scenarios Favoring Combined Indexing and Caching

Combined indexing and caching significantly enhance read-heavy applications by reducing latency and improving query performance through faster data retrieval. Scenarios involving frequent access to large datasets, such as e-commerce platforms or content delivery networks, benefit from cached indexes that minimize database load and accelerate response times. High-concurrency environments also favor this approach, as caching reduces repeated disk I/O operations while indexing ensures efficient search and sorting of data.

Performance Comparison: Indexing vs Indexing with Caching

Indexing with caching significantly improves query response times by storing frequently accessed data in fast-access memory, reducing disk I/O compared to standalone indexing. Caching optimizes performance by minimizing the need to repeatedly traverse index structures, thus lowering latency and CPU utilization during data retrieval. Benchmarks show that systems with combined indexing and caching achieve up to 70% faster query execution than those relying solely on indexing.

Best Practices for Implementing Indexing and Caching

Implementing indexing and caching enhances database performance by reducing query response times and minimizing server load. Best practices include creating indexes on frequently queried columns, using composite indexes for complex queries, and avoiding over-indexing to prevent increased storage and maintenance overhead. Efficient caching strategies involve setting appropriate cache expiration policies, leveraging in-memory caches for hot data, and regularly monitoring cache hit rates to optimize system performance.

Choosing the Right Strategy for Your Application

Choosing the right strategy between Indexing and Caching versus just Indexing depends on your application's data retrieval patterns and performance needs. Indexing improves query speed by organizing data for quick search, while caching temporarily stores frequently accessed data to reduce database load and latency. Applications with high read volumes and repetitive queries benefit most from combining both strategies for optimal response time and system efficiency.

Indexing and Caching Infographic

libterm.com

libterm.com