Throughput measures the rate at which data is processed or transmitted in a system, indicating overall efficiency and performance. High throughput is essential for optimizing network speed, reducing latency, and ensuring smoother user experience in digital communications. Discover how enhancing throughput can significantly impact Your technology infrastructure by reading the rest of the article.

Table of Comparison

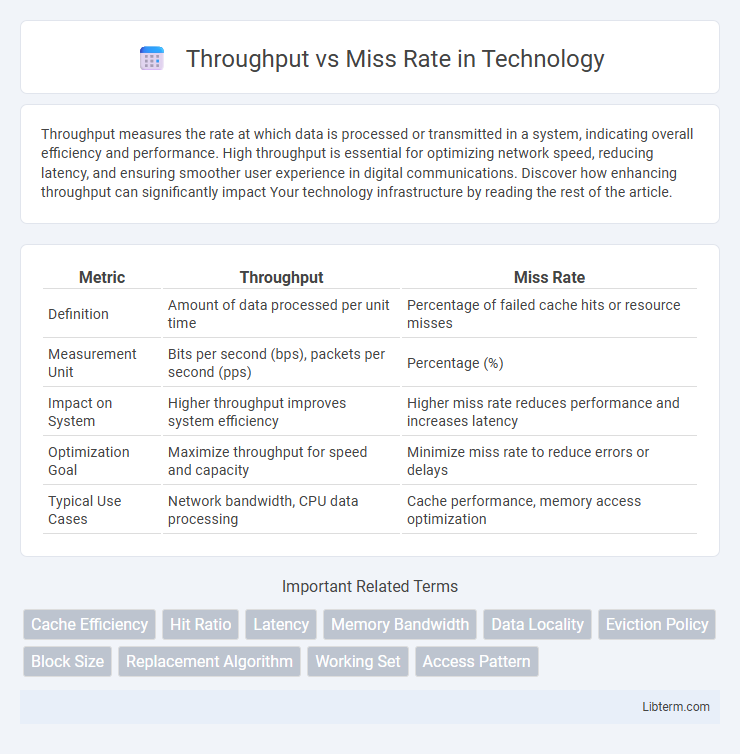

| Metric | Throughput | Miss Rate |

|---|---|---|

| Definition | Amount of data processed per unit time | Percentage of failed cache hits or resource misses |

| Measurement Unit | Bits per second (bps), packets per second (pps) | Percentage (%) |

| Impact on System | Higher throughput improves system efficiency | Higher miss rate reduces performance and increases latency |

| Optimization Goal | Maximize throughput for speed and capacity | Minimize miss rate to reduce errors or delays |

| Typical Use Cases | Network bandwidth, CPU data processing | Cache performance, memory access optimization |

Introduction to Throughput and Miss Rate

Throughput refers to the rate at which a system processes data or completes tasks within a specific time frame, commonly measured in instructions per cycle (IPC) or data packets per second. Miss rate, in the context of cache memory, quantifies the frequency at which data requests fail to find the required data within the cache, typically expressed as a percentage or ratio of missed accesses to total accesses. Understanding the interplay between throughput and miss rate is crucial for optimizing system performance, as higher miss rates can significantly reduce effective throughput due to increased latency and memory access delays.

Defining Throughput in System Performance

Throughput measures the amount of data processed by a system in a given time frame, reflecting its efficiency and speed. It quantifies how many tasks or transactions a system can handle per second, impacting overall system performance. High throughput indicates effective resource utilization and reduced processing latency, essential for optimizing computing workloads and network operations.

Understanding Miss Rate in Computing

Miss rate in computing refers to the percentage of cache accesses that fail to find the requested data, triggering slower memory fetches. A lower miss rate directly enhances system throughput by reducing latency and increasing the efficiency of memory operations. Optimizing cache architecture and algorithms is essential for minimizing miss rates and maximizing overall computing performance.

Importance of Throughput and Miss Rate Metrics

Throughput measures the volume of data processed within a system, directly impacting performance efficiency and user experience. Miss rate indicates the frequency of cache misses, affecting memory access speed and system latency. Optimizing both throughput and miss rate is essential to balance speed and resource utilization, ensuring high-performance computing environments.

Factors Affecting Throughput

Throughput in computer systems is influenced by factors such as CPU clock speed, memory latency, and cache hit rate, which directly impacts processing efficiency. A higher miss rate in the cache leads to increased memory access delays, reducing overall throughput. Optimizing cache size, associativity, and replacement policies helps minimize miss rates and thereby improve system throughput.

Factors Influencing Miss Rate

Miss rate in cache memory is influenced by factors such as cache size, block size, and the replacement policy used. Larger cache sizes typically reduce miss rate by storing more data, while optimal block sizes balance spatial locality without causing excessive overhead. Replacement policies like LRU (Least Recently Used) impact miss rate by determining which cache lines are overwritten, affecting throughput and overall system performance.

Relationship Between Throughput and Miss Rate

Throughput and miss rate exhibit an inverse relationship in cache memory systems, where a higher miss rate typically reduces throughput due to increased latency from accessing slower main memory. Optimizing cache performance to lower the miss rate directly enhances throughput by minimizing delays and allowing more instructions to be processed per unit time. System designers prioritize balancing cache size and replacement policies to achieve optimal throughput while keeping the miss rate low.

Optimizing Systems for Better Throughput

Optimizing systems for better throughput involves minimizing the miss rate in cache or memory operations, which directly impacts processing speed and efficiency. Lower miss rates reduce the frequency of costly data retrievals from slower memory tiers, enabling faster data flow and higher transaction rates. Employing strategies such as cache size adjustment, prefetching algorithms, and workload-specific tuning enhances throughput by effectively balancing hit rates and resource utilization.

Strategies to Minimize Miss Rate

Optimizing cache performance requires balancing throughput and miss rate, with strategies to minimize miss rate including increasing cache size, employing advanced replacement policies like Least Recently Used (LRU), and utilizing prefetching techniques to load data before it is requested. Enhancing spatial and temporal locality through data clustering and efficient data access patterns further reduces cache misses. These methods collectively improve overall system throughput by significantly decreasing costly memory access delays.

Balancing Throughput vs Miss Rate for Optimal Performance

Balancing throughput and miss rate is crucial for optimizing cache performance and overall system efficiency. A higher throughput often demands aggressive prefetching or larger cache sizes, which can lower the miss rate but may increase latency and resource consumption. Fine-tuning cache replacement policies and prefetch algorithms enables a strategic equilibrium where throughput maximizes while miss rate remains acceptably low, enhancing both speed and resource utilization.

Throughput Infographic

libterm.com

libterm.com