Model staleness occurs when machine learning models become outdated due to changes in data patterns or environments, leading to decreased accuracy and performance. Regular updates and retraining are essential to maintain model relevance and effectiveness over time. Explore the rest of the article to discover strategies for detecting and addressing model staleness to keep your AI systems performing optimally.

Table of Comparison

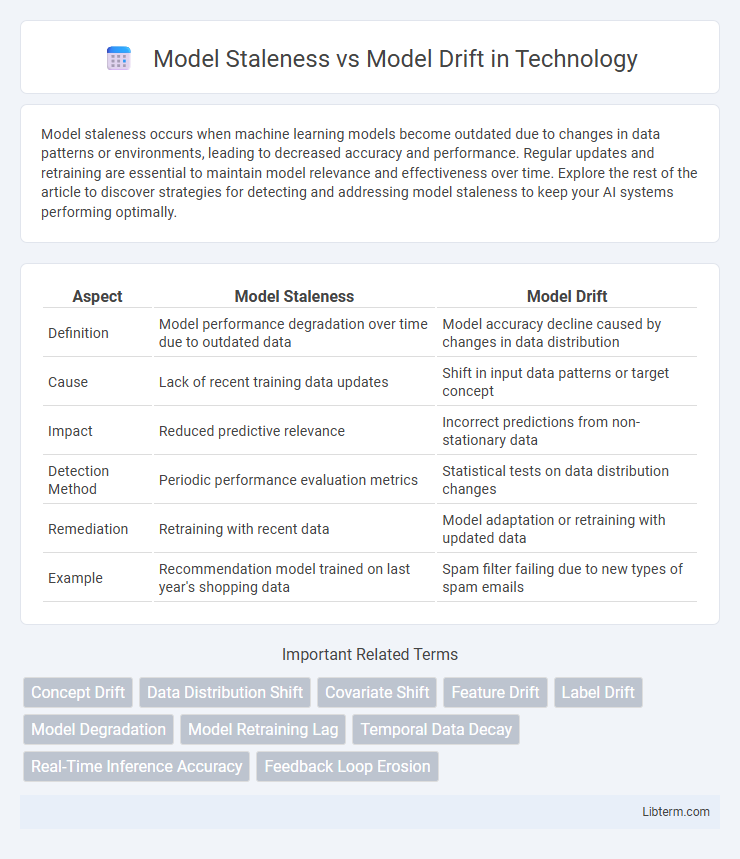

| Aspect | Model Staleness | Model Drift |

|---|---|---|

| Definition | Model performance degradation over time due to outdated data | Model accuracy decline caused by changes in data distribution |

| Cause | Lack of recent training data updates | Shift in input data patterns or target concept |

| Impact | Reduced predictive relevance | Incorrect predictions from non-stationary data |

| Detection Method | Periodic performance evaluation metrics | Statistical tests on data distribution changes |

| Remediation | Retraining with recent data | Model adaptation or retraining with updated data |

| Example | Recommendation model trained on last year's shopping data | Spam filter failing due to new types of spam emails |

Understanding Model Staleness

Model staleness occurs when a machine learning model's performance degrades over time due to outdated training data that no longer reflects current real-world patterns. It results from the static nature of the model failing to incorporate recent information, leading to inaccuracies and reduced predictive power. Monitoring model staleness involves tracking performance metrics and implementing retraining schedules to ensure the model remains relevant and effective.

Defining Model Drift

Model drift refers to the degradation in a machine learning model's performance over time due to changes in the underlying data distribution, often caused by evolving user behavior, external conditions, or data sources. Unlike model staleness, which simply describes an outdated model lacking recent updates, model drift specifically highlights discrepancies between the training data and new incoming data that lead to inaccurate predictions. Detecting and addressing model drift requires continuous monitoring and adaptive retraining techniques to maintain model accuracy and reliability in dynamic environments.

Key Differences Between Model Staleness and Model Drift

Model staleness occurs when a machine learning model becomes outdated due to changes in the underlying data distribution over time, leading to decreased performance without any intrinsic change in the model itself. Model drift specifically refers to the shift in data patterns that affect the model's predictive accuracy, often categorized as concept drift or data drift, requiring model retraining or adaptation. Key differences include staleness being a temporal degradation issue, while drift involves active changes in data characteristics impacting model effectiveness.

Causes of Model Staleness

Model staleness occurs when a machine learning model's performance degrades due to the accumulation of outdated or irrelevant data, often caused by shifting data distributions or changes in the underlying system environment. It is primarily driven by a lack of regular updates, infrequent retraining schedules, and failure to incorporate new data reflecting current trends or behaviors. Unlike model drift, which involves gradual changes in data patterns, staleness results from models becoming obsolete as they no longer capture the evolving data landscape.

Causes of Model Drift

Model drift occurs when the statistical properties of the target variable change over time, leading to decreased model accuracy. Common causes include changes in data distribution, evolving user behavior, or shifting environmental conditions that render previous training data less representative. Unlike model staleness, which stems from outdated models lacking recent updates, model drift specifically highlights dynamic variations in the underlying data patterns.

Impact on Model Performance

Model staleness occurs when a machine learning model becomes outdated due to changes in the underlying data distribution, leading to a gradual decline in predictive accuracy over time. Model drift specifically refers to shifts in data patterns, such as covariate drift or concept drift, causing the model's performance to deteriorate as it no longer reflects the current environment. Both phenomena result in increased error rates and reduced reliability, necessitating regular model retraining or adaptation techniques to maintain optimal performance.

Detection Methods for Model Staleness

Detection methods for model staleness primarily involve statistical monitoring techniques such as performance degradation analysis, where metrics like accuracy, precision, and recall are tracked over time to identify declines. Data distribution monitoring techniques, including Population Stability Index (PSI) and Kullback-Leibler divergence, detect shifts in input features indicating staleness. Employing automated alerts based on threshold breaches in these statistical measures ensures timely identification of stale models requiring retraining or updating.

Detecting and Measuring Model Drift

Detecting model drift involves continuous monitoring of model performance metrics such as accuracy, precision, recall, or specific loss values against real-world data, identifying deviations from baseline metrics established during model training. Techniques like data distribution comparison using statistical tests (e.g., Kolmogorov-Smirnov or Population Stability Index) and drift detection algorithms like ADWIN or DDM help quantify changes in input features or output predictions over time. Precise measurement of model drift enables timely retraining or adjustment of models to maintain predictive accuracy and robustness in dynamic environments.

Strategies to Mitigate Model Staleness and Drift

To mitigate model staleness and drift, implement continuous monitoring systems that track predictive performance and data distribution shifts in real time. Employ incremental learning techniques and periodic retraining with fresh, labeled data to adapt models to evolving environments. Leveraging automated feedback loops and active learning frameworks enhances model robustness by promptly incorporating new information and correcting deviations.

Best Practices for Maintaining Model Relevance

Regularly monitoring model performance through validation metrics and real-world feedback helps detect model drift, while scheduled retraining using up-to-date datasets prevents model staleness. Implementing automated alert systems for performance degradation and incorporating continuous learning pipelines ensures timely adaptation to evolving data patterns. Employing feature importance analysis and data drift detection tools enhances proactive maintenance, preserving model accuracy and relevance in dynamic environments.

Model Staleness Infographic

libterm.com

libterm.com