Containers streamline application deployment by packaging software and its dependencies into isolated units, ensuring consistent performance across various environments. This technology enhances scalability and flexibility, making it easier for developers to manage complex systems and accelerate delivery. Discover how containers can transform your development process by reading the full article.

Table of Comparison

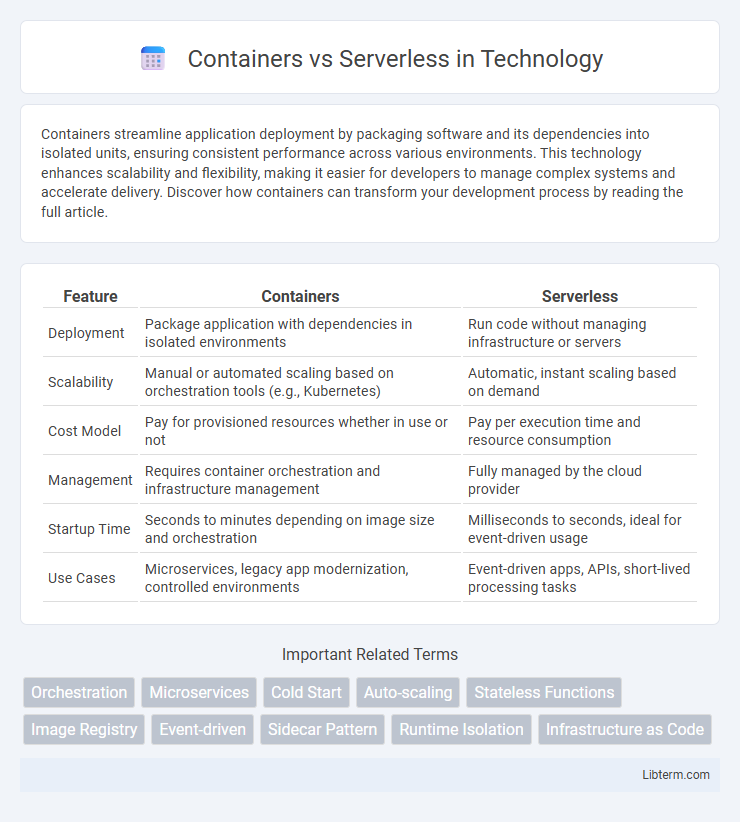

| Feature | Containers | Serverless |

|---|---|---|

| Deployment | Package application with dependencies in isolated environments | Run code without managing infrastructure or servers |

| Scalability | Manual or automated scaling based on orchestration tools (e.g., Kubernetes) | Automatic, instant scaling based on demand |

| Cost Model | Pay for provisioned resources whether in use or not | Pay per execution time and resource consumption |

| Management | Requires container orchestration and infrastructure management | Fully managed by the cloud provider |

| Startup Time | Seconds to minutes depending on image size and orchestration | Milliseconds to seconds, ideal for event-driven usage |

| Use Cases | Microservices, legacy app modernization, controlled environments | Event-driven apps, APIs, short-lived processing tasks |

Introduction to Containers and Serverless

Containers are lightweight, portable units that package an application and its dependencies, enabling consistent execution across various environments by isolating the software from the underlying infrastructure. Serverless computing abstracts server management by automatically provisioning resources and running code in response to events, charging only for actual usage without requiring infrastructure maintenance. Both technologies aim to enhance scalability and efficiency but differ in operational control and deployment models.

Core Concepts: How Containers Work

Containers encapsulate applications and their dependencies into isolated environments that run consistently across different computing platforms, leveraging OS-level virtualization. They use container engines like Docker to create, deploy, and manage lightweight, portable units by sharing the host system's kernel while maintaining process isolation. This approach enables rapid scaling, efficient resource utilization, and seamless integration with orchestration tools like Kubernetes for automated deployment and management.

Core Concepts: Understanding Serverless

Serverless computing abstracts away server management, allowing developers to deploy functions that automatically scale based on demand without provisioning infrastructure. Core concepts of serverless include event-driven execution, where functions run in response to triggers such as HTTP requests or database changes, and pay-as-you-go pricing, which bills only for actual compute time consumed. Unlike containers, which encapsulate entire application environments, serverless focuses on granular, stateless code execution managed entirely by cloud providers like AWS Lambda, Azure Functions, and Google Cloud Functions.

Deployment and Scalability Differences

Containers provide consistent deployment environments by packaging applications and their dependencies into isolated units, enabling rapid scaling through orchestrators like Kubernetes. Serverless platforms automatically manage infrastructure, allowing developers to deploy functions without worrying about server provisioning, and scale dynamically based on request volume with built-in event-driven triggers. Unlike containers that require explicit scaling configurations, serverless offers seamless scalability with minimal operational overhead, ideal for variable or unpredictable workloads.

Cost Comparison: Containers vs Serverless

Containers generally offer predictable costs with resource allocation based on reserved CPU and memory, making them cost-efficient for steady workloads. Serverless pricing follows a pay-as-you-go model, charging only for actual execution time and resources consumed, which is more economical for intermittent or unpredictable workloads. Evaluating workload patterns and scaling needs is crucial to determining cost-effectiveness between containers and serverless architectures.

Performance and Latency Considerations

Containers provide consistent performance with predictable resource allocation, enabling low-latency responses ideal for high-demand, stateful applications. Serverless architectures may introduce cold start latency, impacting performance during initial function invocations, but automatically scale to handle intermittent traffic efficiently. Choosing between containers and serverless involves evaluating workload patterns, response time sensitivity, and the need for granular control over infrastructure resources.

Application Use Cases for Containers

Containers excel in microservices architectures, enabling consistent application deployment across diverse environments with rapid scaling and resource efficiency. They are ideal for applications requiring complex orchestration, persistent storage, and stateful workloads, such as e-commerce platforms, data processing pipelines, and legacy application modernization. Containers also support CI/CD workflows, facilitating continuous integration and delivery for development teams working on multi-component applications.

Application Use Cases for Serverless

Serverless architecture excels in applications with event-driven workloads, including real-time data processing, IoT backends, and chatbots, where scalability and low latency are crucial. It suits microservices and APIs that experience unpredictable traffic, enabling automatic scaling without infrastructure management. This model is ideal for applications requiring rapid development and deployment, such as mobile backends and automated workflows, where developers focus solely on code rather than server provisioning.

Security Implications: Containers vs Serverless

Containers offer strong isolation through kernel namespaces and control groups but require diligent patching and configuration to prevent vulnerabilities from the underlying host or container images. Serverless architectures abstract server management, reducing attack surface and patching responsibilities, yet introduce risks related to third-party dependencies and event-driven execution contexts. Both models demand robust identity and access management, encryption, and continuous monitoring to mitigate security threats effectively.

Choosing the Right Approach for Your Project

Containers offer consistent environments, scalability, and control, making them ideal for complex applications requiring customization and stateful services. Serverless computing provides automatic scaling, reduced operational overhead, and cost efficiency, best suited for event-driven, stateless functions or microservices with unpredictable workloads. Evaluating project requirements, such as control needs, scalability demands, and cost constraints, helps determine whether container orchestration platforms like Kubernetes or serverless platforms like AWS Lambda are the optimal choice.

Containers Infographic

libterm.com

libterm.com