The event loop is a core mechanism in programming that manages asynchronous operations by continuously checking for and executing tasks from the event queue. It enables non-blocking behavior, allowing your application to handle multiple events efficiently without freezing or waiting. Dive into the rest of the article to explore how the event loop enhances performance and responsiveness in modern software development.

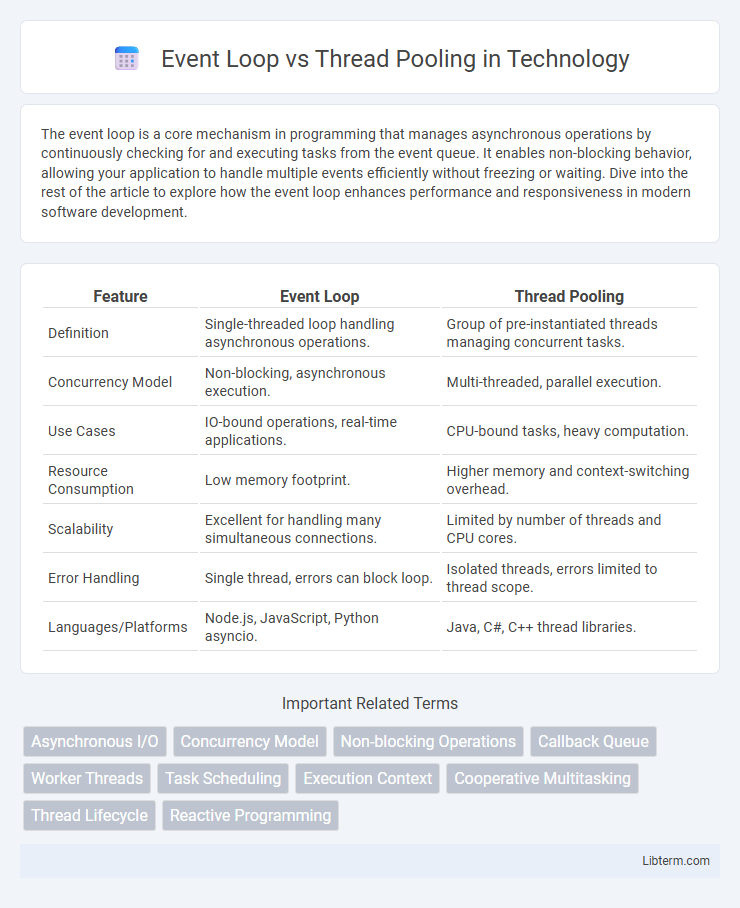

Table of Comparison

| Feature | Event Loop | Thread Pooling |

|---|---|---|

| Definition | Single-threaded loop handling asynchronous operations. | Group of pre-instantiated threads managing concurrent tasks. |

| Concurrency Model | Non-blocking, asynchronous execution. | Multi-threaded, parallel execution. |

| Use Cases | IO-bound operations, real-time applications. | CPU-bound tasks, heavy computation. |

| Resource Consumption | Low memory footprint. | Higher memory and context-switching overhead. |

| Scalability | Excellent for handling many simultaneous connections. | Limited by number of threads and CPU cores. |

| Error Handling | Single thread, errors can block loop. | Isolated threads, errors limited to thread scope. |

| Languages/Platforms | Node.js, JavaScript, Python asyncio. | Java, C#, C++ thread libraries. |

Introduction to Event Loop and Thread Pooling

The event loop is a programming construct that handles asynchronous operations by continuously checking and executing tasks from a queue, enabling non-blocking I/O operations in environments like Node.js. Thread pooling involves managing a collection of pre-created threads to execute concurrent tasks, improving performance by reusing threads rather than creating new ones for each task. Both mechanisms optimize resource management and concurrency, with the event loop focusing on single-threaded asynchronous execution and thread pooling enabling multi-threaded parallelism.

Core Concepts: Event Loop Explained

The Event Loop is a single-threaded mechanism that handles asynchronous operations by continuously checking the call stack and task queue, ensuring non-blocking execution in environments like Node.js. It processes callbacks and events by executing tasks from the queue only when the call stack is empty, maintaining efficient management of I/O operations without creating multiple threads. This core concept optimizes application performance by enabling concurrency through event-driven programming rather than traditional multi-threading models like thread pooling.

Understanding Thread Pooling

Thread pooling optimizes application performance by maintaining a fixed number of worker threads to execute concurrent tasks, reducing the overhead of thread creation and destruction. Unlike the Event Loop, which handles asynchronous operations in a single thread using non-blocking I/O, thread pooling allows true parallelism by distributing tasks across multiple threads. This approach is critical for CPU-intensive operations, enabling efficient resource management and improved responsiveness in multi-threaded environments.

How Event Loop Works in Practice

The event loop operates by continuously monitoring a queue of tasks or events and executing them sequentially without blocking the main thread, ensuring non-blocking I/O operations. It processes callbacks, promises, and asynchronous operations by delegating tasks such as file reads, network requests, or timers to the system or thread pool, then resumes execution once these tasks complete. This mechanism allows efficient handling of numerous concurrent operations with a single-threaded event loop, minimizing overhead compared to thread pooling, which manages multiple threads for parallel execution.

How Thread Pooling Manages Tasks

Thread pooling manages tasks by maintaining a pool of pre-instantiated, reusable threads that execute tasks concurrently, reducing the overhead of thread creation and destruction. Tasks are submitted to the pool's queue, where worker threads fetch and process them asynchronously, enabling efficient handling of multiple CPU-bound or blocking operations. This approach optimizes resource utilization by controlling the number of active threads, minimizing context switching, and improving overall system throughput compared to spawning new threads for each task.

Key Differences: Event Loop vs Thread Pooling

Event Loop operates on a single-threaded, non-blocking architecture, efficiently handling multiple concurrent I/O operations by queuing callbacks in an event queue. Thread Pooling uses multiple threads to execute tasks concurrently, enabling parallel processing but introducing context-switching overhead and resource contention. The key difference lies in Event Loop's asynchronous, cooperative multitasking versus Thread Pooling's preemptive, parallel task execution.

Performance Comparison in Real-World Scenarios

Event Loop excels in handling high concurrency with minimal overhead by processing asynchronous I/O operations in a single thread, making it ideal for lightweight, non-blocking tasks such as web servers and real-time applications. Thread Pooling offers better performance for CPU-intensive tasks by distributing workloads across multiple threads, leveraging multi-core processors but incurring higher context-switching costs. In real-world scenarios, Event Loop outperforms Thread Pooling for I/O-bound workloads, while Thread Pooling is more efficient for compute-bound operations requiring parallel execution.

Use Cases for Event Loop

Event Loop is ideal for I/O-bound applications like web servers, chat applications, and real-time data streaming where non-blocking operations and high concurrency are required to handle multiple connections efficiently. Unlike Thread Pooling, which is better suited for CPU-intensive tasks, the Event Loop excels in scenarios demanding lightweight, asynchronous processing without the overhead of multiple threads. Its single-threaded, event-driven architecture significantly reduces context switching and memory consumption, making it perfect for scalable network applications.

Use Cases for Thread Pooling

Thread pooling is ideal for managing multiple blocking or CPU-intensive tasks concurrently, such as database queries, file I/O operations, or complex computations, where threads can run in parallel without blocking the main event loop. Use cases include web servers handling numerous simultaneous requests, background processing jobs, and multithreaded applications requiring efficient resource utilization. Thread pooling improves scalability and responsiveness by distributing workloads across a fixed number of threads, preventing thread exhaustion and minimizing latency in resource-heavy environments.

Choosing the Right Approach for Your Application

Event Loop excels in handling high-concurrency I/O-bound tasks with minimal overhead, making it ideal for real-time applications like chat servers and live data feeds. Thread Pooling offers better performance for CPU-intensive operations by distributing workloads across multiple threads, preventing blocking in single-threaded environments. Choosing between Event Loop and Thread Pooling hinges on task nature, concurrency needs, and resource management goals to optimize application responsiveness and scalability.

Event Loop Infographic

libterm.com

libterm.com