Hashing transforms data into fixed-size values, enabling efficient data retrieval, verification, and storage. It plays a critical role in cybersecurity, ensuring data integrity and secure password management. Discover how hashing impacts your digital security and everyday technology use in the full article.

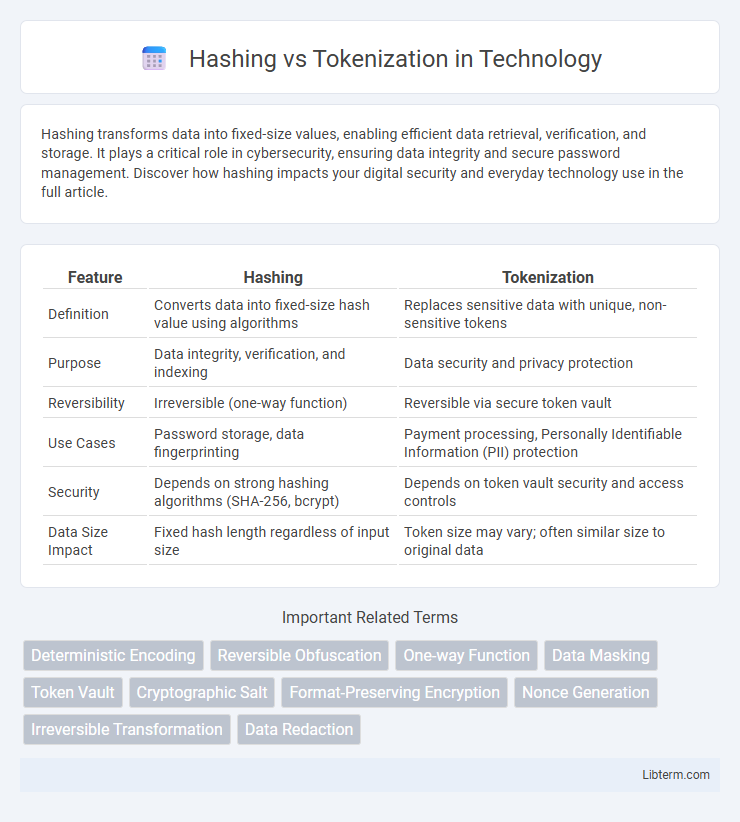

Table of Comparison

| Feature | Hashing | Tokenization |

|---|---|---|

| Definition | Converts data into fixed-size hash value using algorithms | Replaces sensitive data with unique, non-sensitive tokens |

| Purpose | Data integrity, verification, and indexing | Data security and privacy protection |

| Reversibility | Irreversible (one-way function) | Reversible via secure token vault |

| Use Cases | Password storage, data fingerprinting | Payment processing, Personally Identifiable Information (PII) protection |

| Security | Depends on strong hashing algorithms (SHA-256, bcrypt) | Depends on token vault security and access controls |

| Data Size Impact | Fixed hash length regardless of input size | Token size may vary; often similar size to original data |

Introduction to Hashing and Tokenization

Hashing transforms sensitive data into fixed-length, irreversible strings using cryptographic algorithms, ensuring data confidentiality and integrity. Tokenization replaces sensitive information with unique, non-sensitive tokens that map back to the original data stored securely in a separate database. Both techniques enhance data security, with hashing ideal for verification and tokenization suited for protecting payment and personal information.

Defining Hashing: Purpose and Process

Hashing is a cryptographic process that transforms input data into a fixed-size string of characters, typically a hash code, used to verify data integrity and secure sensitive information. The primary purpose of hashing is to create a unique digital fingerprint for data, enabling quick data comparison without revealing the original content. Hash functions are deterministic, irreversible, and produce a consistent output for the same input, making them essential in data authentication, password storage, and integrity verification.

Understanding Tokenization: How It Works

Tokenization replaces sensitive data with unique, non-sensitive tokens that have no mathematical relationship to the original information, ensuring data confidentiality during storage and transmission. Each token acts as a reference pointer stored in a secure token vault, allowing authorized systems to retrieve the original data only when necessary. This process helps organizations protect Personally Identifiable Information (PII) and Payment Card Industry (PCI) data while maintaining data usability for analytics and processing.

Key Differences Between Hashing and Tokenization

Hashing transforms original data into a fixed-length string using a cryptographic algorithm, making it irreversible and primarily used for data integrity and verification. Tokenization replaces sensitive data with non-sensitive placeholders called tokens, which can be mapped back to the original data securely via a token vault, enhancing data security in payment processing and compliance. Key differences lie in reversibility, purpose, and storage requirements: hashing is irreversible and used for verification, while tokenization is reversible and designed for secure data storage and transmission.

Security Implications: Hashing vs Tokenization

Hashing transforms data into a fixed-length string using algorithms like SHA-256, providing irreversible protection ideal for password storage by ensuring the original data cannot be retrieved. Tokenization replaces sensitive data with non-sensitive, unique tokens stored separately, significantly reducing exposure risk in data breaches by isolating actual data in secure vaults. While hashing offers strong integrity and verification, tokenization excels in environments requiring secure, reversible substitution without revealing original information during transactions.

Use Cases for Hashing in Data Protection

Hashing is widely used in data protection for securely storing passwords, ensuring that original values cannot be reversed from the hash. It provides data integrity verification by generating unique hash values for files, making it easier to detect unauthorized changes or corruption. Additionally, hashing supports digital signatures and message authentication codes (MACs), enhancing the security of data transmission in various applications.

Tokenization Applications in Compliance and Privacy

Tokenization enhances compliance with regulations like PCI-DSS by replacing sensitive data with non-sensitive placeholders, reducing the scope of data breach risks. It is widely applied in payment processing, healthcare, and cloud storage to safeguard personal identifiable information (PII) without altering data usability for authorized operations. Unlike hashing, tokenization allows reversible data retrieval, enabling secure data management while maintaining privacy and regulatory adherence.

Performance and Scalability Comparison

Hashing offers faster data transformation with fixed-length output, making it highly efficient for quick lookups and large-scale indexing in high-performance environments. Tokenization, while generally slower due to maintaining reversible mappings and storage overhead, excels in securely managing sensitive data with scalability tied to the complexity of token vault management. Performance in hashing remains consistent regardless of dataset size, whereas tokenization scalability depends on the infrastructure supporting token generation and retrieval processes.

Choosing the Right Method for Your Needs

Selecting between hashing and tokenization depends on your data security requirements and compliance standards. Hashing provides irreversible data transformation, ideal for scenarios like password storage where original data recovery is unnecessary, while tokenization replaces sensitive data with reversible tokens, suitable for secure payment processing and data retrieval needs. Evaluate factors such as data sensitivity, operational complexity, and regulatory obligations to determine the method that best balances security, usability, and compliance.

Conclusion: Hashing or Tokenization for Your Data Security

Hashing provides a one-way transformation ideal for verifying data integrity without exposing original content, making it suitable for passwords and data fingerprinting. Tokenization replaces sensitive data with non-sensitive tokens, offering reversible protection crucial for securing payment information and personal identifiers. Choosing between hashing and tokenization depends on whether data reversibility is necessary; hashing ensures irreversible protection, while tokenization supports secure data retrieval and system functionality.

Hashing Infographic

libterm.com

libterm.com