Classification is a fundamental process in machine learning that involves categorizing data into predefined classes based on input features. Effective classification techniques enable your models to predict outcomes accurately, from email spam detection to medical diagnosis. Explore the rest of this article to understand various classification methods and their practical applications.

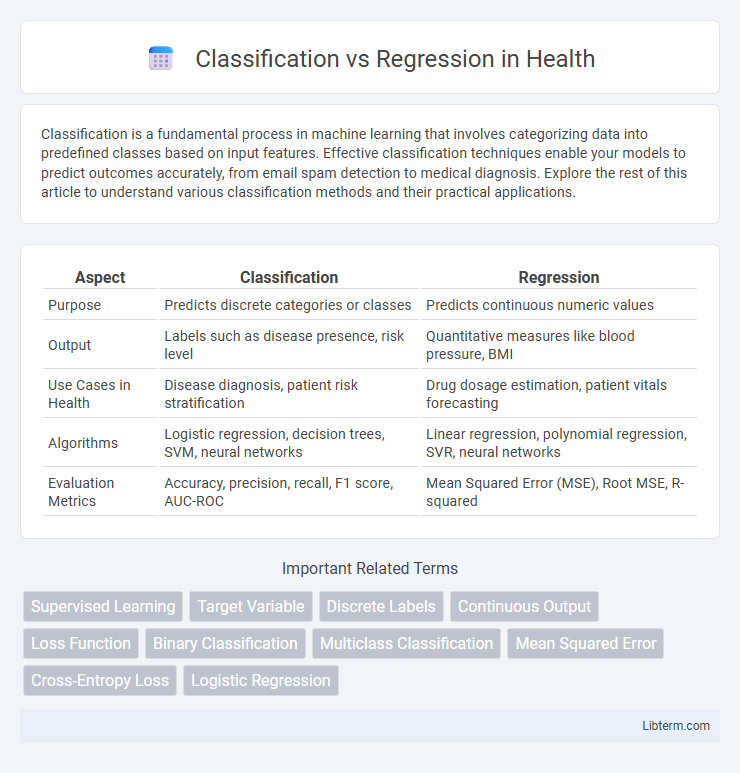

Table of Comparison

| Aspect | Classification | Regression |

|---|---|---|

| Purpose | Predicts discrete categories or classes | Predicts continuous numeric values |

| Output | Labels such as disease presence, risk level | Quantitative measures like blood pressure, BMI |

| Use Cases in Health | Disease diagnosis, patient risk stratification | Drug dosage estimation, patient vitals forecasting |

| Algorithms | Logistic regression, decision trees, SVM, neural networks | Linear regression, polynomial regression, SVR, neural networks |

| Evaluation Metrics | Accuracy, precision, recall, F1 score, AUC-ROC | Mean Squared Error (MSE), Root MSE, R-squared |

Introduction to Classification and Regression

Classification involves categorizing data points into predefined classes or labels, such as identifying whether an email is spam or not. Regression predicts continuous numerical values by modeling the relationship between input features and a target variable, like forecasting housing prices based on various attributes. Both techniques are fundamental in supervised machine learning, enabling decision-making through pattern recognition in labeled datasets.

Key Differences Between Classification and Regression

Classification involves predicting discrete labels or categories, such as determining if an email is spam or not, whereas regression predicts continuous numerical values, like forecasting house prices. Classification algorithms use metrics like accuracy, precision, recall, and F1-score to evaluate performance, while regression relies on metrics such as mean squared error (MSE), mean absolute error (MAE), and R-squared. Key differences also include the output type--categorical for classification and numerical for regression--and the types of algorithms commonly used, such as logistic regression and decision trees for classification, versus linear regression and support vector regression for regression tasks.

Understanding Classification Algorithms

Classification algorithms categorize input data into predefined classes by analyzing patterns and relationships within labeled datasets, enabling accurate prediction of discrete outcomes. Techniques such as decision trees, support vector machines, and neural networks optimize the separation of classes by learning decision boundaries that minimize classification errors. These algorithms are essential in applications like spam detection, medical diagnosis, and image recognition, where distinguishing between distinct categories is critical.

Exploring Regression Algorithms

Regression algorithms predict continuous numerical outcomes by modeling relationships between dependent and independent variables. Popular regression techniques include linear regression, ridge regression, lasso regression, and support vector regression, each optimizing different error functions and regularization methods to enhance model accuracy. These algorithms are essential in fields like finance, healthcare, and environmental science for forecasting trends, estimating values, and identifying critical variable impacts.

Common Use Cases for Classification

Classification models are widely used in email spam detection, sentiment analysis, and image recognition tasks where discrete labels categorize inputs. In healthcare, classification helps diagnose diseases by assigning patient data to specific classes such as 'healthy' or 'diseased.' Fraud detection systems leverage classification algorithms to distinguish between legitimate and fraudulent transactions effectively.

Typical Applications of Regression

Typical applications of regression include predicting continuous outcomes such as housing prices, stock market trends, and customer lifetime value. Regression models analyze relationships between dependent variables and one or more independent variables to forecast numerical data. These models are essential in fields like finance, real estate, and healthcare for demand forecasting and risk assessment.

Evaluation Metrics for Classification

Evaluation metrics for classification include accuracy, precision, recall, F1-score, and area under the ROC curve (AUC-ROC). Accuracy measures the overall correctness, while precision and recall focus on the performance related to positive class predictions and actual positives, respectively. The F1-score balances precision and recall, making it useful for imbalanced datasets, and AUC-ROC assesses the model's ability to distinguish between classes across different threshold settings.

Performance Metrics for Regression

Performance metrics for regression models include Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and R-squared (R2). MAE measures average absolute differences between predicted and actual values, while MSE and RMSE emphasize larger errors by squaring the deviations. R-squared quantifies the proportion of variance explained by the model, providing insight into its predictive power.

Choosing Between Classification and Regression

Choosing between classification and regression depends primarily on the nature of the target variable: classification is used when the output is categorical, such as labels or classes, while regression is suitable for continuous numerical predictions. In scenarios where the goal is to predict discrete outcomes, like spam detection or disease diagnosis, classification models like logistic regression or decision trees excel. Conversely, tasks requiring forecasting of quantities, such as housing prices or temperature, benefit from regression techniques like linear regression or support vector regression.

Summary and Best Practices

Classification predicts discrete labels by categorizing data into predefined classes, while regression estimates continuous numerical values based on input features. Best practices for classification include selecting balanced datasets, using metrics like accuracy and F1-score, and applying techniques such as cross-validation and hyperparameter tuning. For regression, prioritize data normalization, evaluate performance with RMSE or MAE, and validate models through residual analysis and robust feature selection.

Classification Infographic

libterm.com

libterm.com