Eigenvalues are crucial in understanding the behavior of linear transformations and matrices, revealing important properties such as stability and resonance. They play a significant role in fields like physics, engineering, and computer science by simplifying complex systems into manageable components. Discover how eigenvalues can enhance your analytical skills and explore their applications in the rest of this article.

Table of Comparison

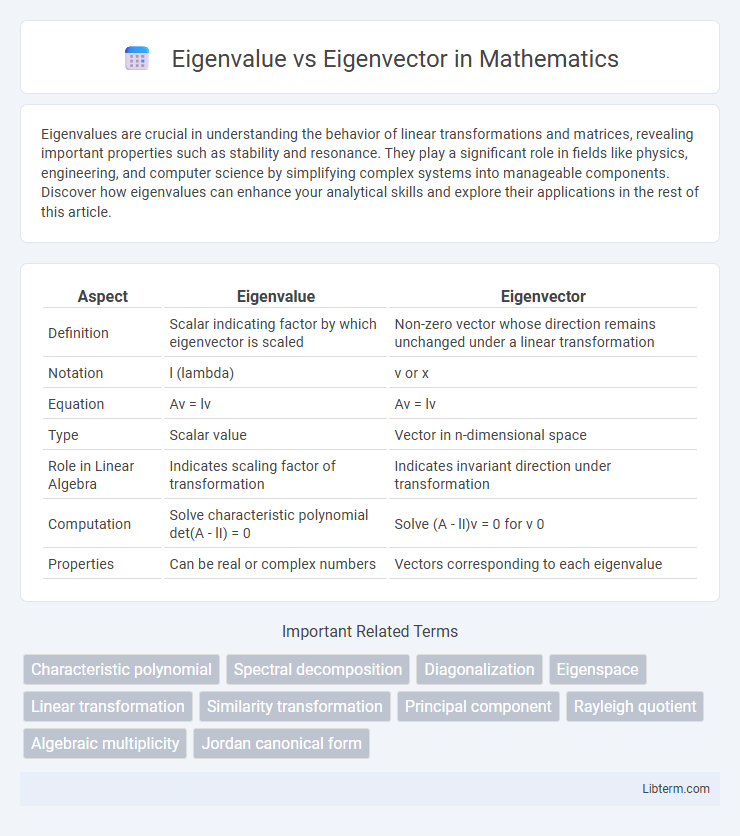

| Aspect | Eigenvalue | Eigenvector |

|---|---|---|

| Definition | Scalar indicating factor by which eigenvector is scaled | Non-zero vector whose direction remains unchanged under a linear transformation |

| Notation | l (lambda) | v or x |

| Equation | Av = lv | Av = lv |

| Type | Scalar value | Vector in n-dimensional space |

| Role in Linear Algebra | Indicates scaling factor of transformation | Indicates invariant direction under transformation |

| Computation | Solve characteristic polynomial det(A - lI) = 0 | Solve (A - lI)v = 0 for v 0 |

| Properties | Can be real or complex numbers | Vectors corresponding to each eigenvalue |

Introduction to Eigenvalue and Eigenvector

Eigenvalues are scalar values that reveal the magnitude by which a corresponding eigenvector is stretched or compressed during a linear transformation. Eigenvectors are non-zero vectors whose direction remains unchanged under the associated linear transformation, except for scaling by the eigenvalue. In linear algebra, understanding eigenvalues and eigenvectors is fundamental for analyzing matrix behavior, stability in systems, and dimensionality reduction techniques such as Principal Component Analysis (PCA).

The Mathematical Definition of Eigenvalues

Eigenvalues are scalars l in the equation A*v = l*v, where A is a square matrix and v is a nonzero vector known as the eigenvector. The mathematical definition of eigenvalues involves solving the characteristic polynomial det(A - lI) = 0, where I is the identity matrix of the same dimension as A. This determinant equation yields the eigenvalues, which represent scalar factors that stretch or compress the eigenvectors during the linear transformation defined by A.

Understanding Eigenvectors: A Simple Explanation

Eigenvectors are non-zero vectors that only scale when a linear transformation is applied to them, meaning their direction remains unchanged. They are crucial in simplifying matrix operations and revealing intrinsic properties of a linear system. Eigenvalues correspond to the scale factors by which these eigenvectors are stretched or compressed during the transformation.

Key Differences Between Eigenvalues and Eigenvectors

Eigenvalues represent scalar values indicating the magnitude by which an eigenvector is scaled during a linear transformation, whereas eigenvectors are nonzero vectors whose direction remains unchanged under that transformation. The computation of eigenvalues involves solving the characteristic equation det(A - lI) = 0, with l representing eigenvalues, while eigenvectors are found by substituting each eigenvalue back into the matrix equation (A - lI)v = 0 to solve for vector v. Eigenvalues provide insight into matrix properties such as stability and scaling factors, while eigenvectors reveal invariant directions critical in applications like principal component analysis and quantum mechanics.

How to Calculate Eigenvalues and Eigenvectors

Calculate eigenvalues by solving the characteristic equation det(A - lI) = 0, where A is the square matrix, l represents eigenvalues, and I is the identity matrix. Substitute each eigenvalue l back into the equation (A - lI)v = 0 to find the corresponding eigenvector v by solving the resulting system of linear equations. Using numerical methods like QR algorithm or power iteration helps compute eigenvalues and eigenvectors for large matrices efficiently.

Geometric Interpretation of Eigenvalues vs Eigenvectors

Eigenvectors represent specific directions in a vector space where linear transformations act by merely stretching or compressing, without changing direction. Eigenvalues quantify the factor by which these eigenvectors are scaled during the transformation. Geometrically, eigenvectors define invariant lines through the origin, while eigenvalues indicate the magnitude and sense of dilation along those lines.

Real-World Applications in Data Science and Engineering

Eigenvalues and eigenvectors play a crucial role in data science through dimensionality reduction techniques such as Principal Component Analysis (PCA), enabling efficient data compression and noise reduction. In engineering, they are fundamental in stability analysis and vibration mode characterization of mechanical structures, ensuring safe and optimized designs. Their ability to decompose complex systems into simpler components enhances predictive modeling, fault detection, and system optimization across various scientific and industrial domains.

Common Misconceptions and Mistakes

Eigenvalues and eigenvectors often cause confusion, with common misconceptions including the belief that eigenvectors must be unique or that all vectors in a matrix space are eigenvectors. Mistakes arise when eigenvalues are treated as vectors or when the zero vector is incorrectly considered an eigenvector. Clarifying that eigenvalues are scalars linked to non-zero eigenvectors that satisfy the equation A*v = l*v helps prevent these fundamental errors.

Eigenvalue and Eigenvector Computation in Python

Eigenvalue and eigenvector computation in Python is efficiently handled using libraries such as NumPy and SciPy, which provide functions like `numpy.linalg.eig` and `scipy.linalg.eig` for calculating eigenvalues and eigenvectors of square matrices. These computations are crucial in various applications including Principal Component Analysis (PCA), stability analysis in differential equations, and quantum mechanics simulations. Optimizing eigenvalue and eigenvector calculations can significantly improve performance in large-scale data processing and machine learning algorithms by leveraging sparse matrix techniques and parallel computing capabilities available in Python.

Conclusion: Choosing the Right Concept for Your Problem

Choosing between eigenvalues and eigenvectors depends on the problem's focus: eigenvalues quantify matrix transformations by indicating scaling factors, while eigenvectors identify invariant directions under these transformations. Problems involving system stability, vibration modes, or principal component analysis leverage eigenvalues to understand magnitude effects, whereas eigenvectors are essential for identifying directionality and feature extraction. Effective application requires aligning the concept with the goal--use eigenvalues to assess system dynamics and eigenvectors to reveal underlying structural properties.

Eigenvalue Infographic

libterm.com

libterm.com