Uncertainty is a natural part of life that influences decision-making and risk assessment in various aspects, from personal choices to business strategies. Understanding how to manage and adapt to uncertainty can improve resilience and lead to more informed outcomes. Explore the rest of this article to discover practical techniques for navigating uncertainty effectively.

Table of Comparison

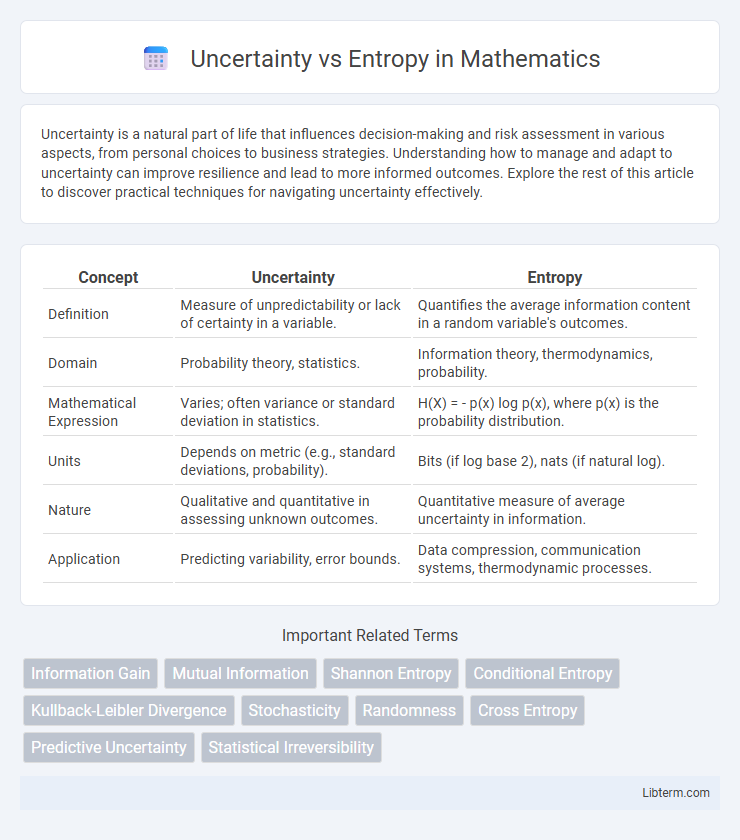

| Concept | Uncertainty | Entropy |

|---|---|---|

| Definition | Measure of unpredictability or lack of certainty in a variable. | Quantifies the average information content in a random variable's outcomes. |

| Domain | Probability theory, statistics. | Information theory, thermodynamics, probability. |

| Mathematical Expression | Varies; often variance or standard deviation in statistics. | H(X) = - p(x) log p(x), where p(x) is the probability distribution. |

| Units | Depends on metric (e.g., standard deviations, probability). | Bits (if log base 2), nats (if natural log). |

| Nature | Qualitative and quantitative in assessing unknown outcomes. | Quantitative measure of average uncertainty in information. |

| Application | Predicting variability, error bounds. | Data compression, communication systems, thermodynamic processes. |

Defining Uncertainty in Information Theory

Uncertainty in information theory quantifies the unpredictability of a random variable's possible outcomes, representing the lack of knowledge about which event will occur. It is mathematically formalized through entropy, which measures the average amount of information produced by a stochastic source of data. High uncertainty corresponds to high entropy, indicating more information is needed to describe the system's state accurately.

What is Entropy?

Entropy quantifies the degree of disorder or unpredictability within a system, commonly measured in information theory as the average amount of information produced by a stochastic source. It reflects the uncertainty inherent in a set of possible outcomes, with higher entropy indicating greater unpredictability and less information redundancy. In thermodynamics, entropy represents the measure of molecular randomness or energy dispersal in physical systems, linking microscopic states to macroscopic properties.

Mathematical Formulations: Uncertainty vs Entropy

Uncertainty in probability theory is quantified by the variance or standard deviation, measuring the spread of possible outcomes around the mean. Entropy, specifically Shannon entropy in information theory, is mathematically defined as \( H(X) = -\sum_{i} p(x_i) \log p(x_i) \), representing the average information content or unpredictability of a random variable \(X\). While uncertainty captures dispersion in values, entropy quantifies the expected surprise or information gain from probabilistic events, highlighting their conceptual difference through distinct mathematical formulations.

Measuring Information: The Role of Probability

Uncertainty and entropy are fundamental concepts in measuring information, where entropy quantifies the average uncertainty inherent in a probability distribution. Probability plays a crucial role by assigning likelihoods to different outcomes, enabling the calculation of entropy through formulas like Shannon entropy, which sums the negative product of each probability and its logarithm. This mathematical framework allows information theory to model and analyze the efficiency of data encoding and transmission by evaluating the expected unpredictability of events.

Key Differences Between Uncertainty and Entropy

Uncertainty measures the lack of knowledge about an event's outcome, while entropy quantifies the average amount of information produced by a stochastic source. Uncertainty is a qualitative concept referring to unpredictability, whereas entropy is a quantitative metric calculated using probability distributions. In information theory, entropy provides a precise numerical value representing uncertainty in a system, enabling comparison and analysis of information content.

Real-World Applications of Entropy and Uncertainty

Entropy quantifies the unpredictability or disorder within a system, playing a crucial role in data compression, cryptography, and thermodynamics by optimizing information transmission and assessing system efficiency. Uncertainty measures the lack of confidence in predictions or outcomes, impacting decision-making processes in fields like finance, weather forecasting, and artificial intelligence. Real-world applications leverage entropy to enhance machine learning algorithms and improve error detection, while uncertainty quantification aids risk assessment and resource allocation in complex environments.

Uncertainty vs Entropy in Data Science

In data science, uncertainty quantifies the unpredictability of outcomes in a dataset, while entropy measures the average level of disorder or impurity within the data distribution. Entropy serves as a fundamental metric in decision trees and information theory, guiding feature selection by quantifying the reduction in uncertainty after splitting the data. Understanding the relationship between uncertainty and entropy enables more efficient data modeling, leading to improved predictive accuracy and optimized information gain.

Visualizing Entropy and Uncertainty

Visualizing entropy involves representing the degree of disorder or randomness in a system, often using measures like Shannon entropy to quantify information content. Uncertainty captures the lack of confidence in predicting outcomes, which can be graphically depicted through probability distributions or confidence intervals. Heatmaps and entropy maps effectively illustrate spatial variations in entropy, while uncertainty can be visualized using error bars and probabilistic models to highlight prediction variability.

Limitations and Challenges

Uncertainty in information theory reflects the unpredictability of outcomes, but its measurement can be context-dependent and lacks a universal standard across diverse applications. Entropy quantifies average uncertainty in a probability distribution, yet its limitations arise when applied to non-stationary or complex systems where underlying assumptions of independence and identical distribution break down. These challenges hinder accurate modeling in real-world scenarios, necessitating advanced methods to address dynamic changes and correlations beyond traditional Shannon entropy frameworks.

Summary: Choosing Between Uncertainty and Entropy

Uncertainty measures the unpredictability of a single event, while entropy quantifies the average unpredictability across a set of possible outcomes. For decision-making in information theory, entropy provides a holistic assessment of information content in a probability distribution. Selecting between uncertainty and entropy depends on whether the focus is on individual event risk or overall system randomness.

Uncertainty Infographic

libterm.com

libterm.com