Markov processes describe systems where the future state depends only on the current state, not on the sequence of events that preceded it, making them fundamental in stochastic modeling. These processes have applications in various fields such as finance, economics, and machine learning, where predicting outcomes based on current conditions is essential. Explore the rest of the article to discover how understanding Markov processes can enhance your analysis of dynamic systems.

Table of Comparison

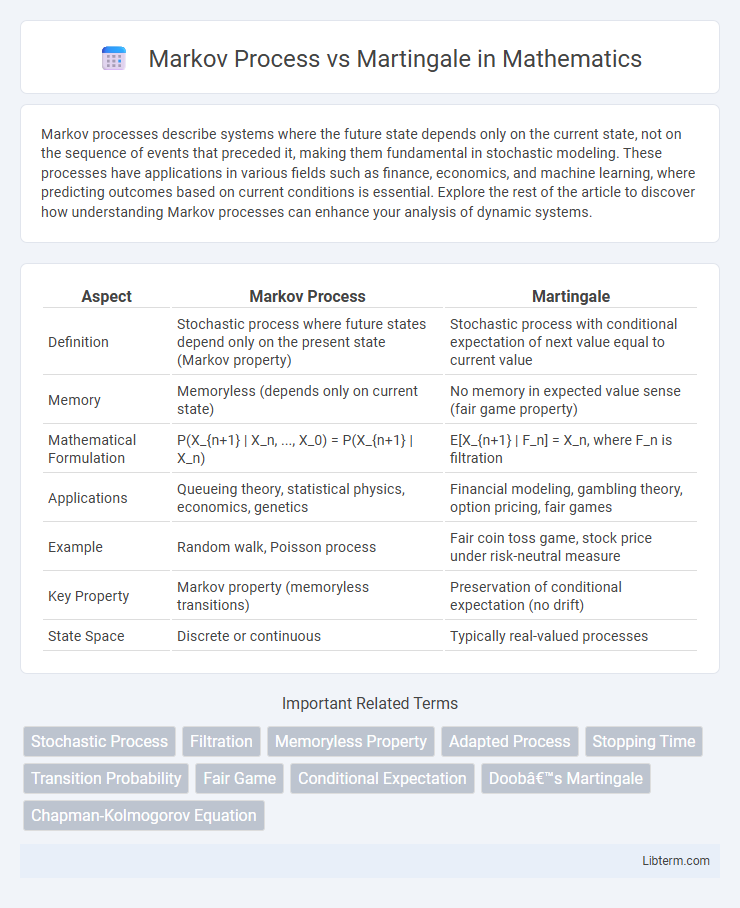

| Aspect | Markov Process | Martingale |

|---|---|---|

| Definition | Stochastic process where future states depend only on the present state (Markov property) | Stochastic process with conditional expectation of next value equal to current value |

| Memory | Memoryless (depends only on current state) | No memory in expected value sense (fair game property) |

| Mathematical Formulation | P(X_{n+1} | X_n, ..., X_0) = P(X_{n+1} | X_n) | E[X_{n+1} | F_n] = X_n, where F_n is filtration |

| Applications | Queueing theory, statistical physics, economics, genetics | Financial modeling, gambling theory, option pricing, fair games |

| Example | Random walk, Poisson process | Fair coin toss game, stock price under risk-neutral measure |

| Key Property | Markov property (memoryless transitions) | Preservation of conditional expectation (no drift) |

| State Space | Discrete or continuous | Typically real-valued processes |

Introduction to Markov Processes and Martingales

Markov processes are stochastic models characterized by the memoryless property, where the future state depends solely on the current state, exemplified by transition probabilities in Markov chains. Martingales are stochastic processes with conditional expectation equal to the present value, reflecting "fair game" properties in probability theory. Both concepts are foundational in stochastic analysis, with Markov processes modeling random systems evolving over time and martingales ensuring unbiasedness in sequential predictions.

Core Definitions and Mathematical Foundations

A Markov process is a stochastic process characterized by the memoryless property, wherein the future state depends solely on the present state and not on the sequence of events that preceded it, formalized by P(X_{n+1} | X_n, ..., X_0) = P(X_{n+1} | X_n). A martingale is a stochastic process with the defining property that the expected future value, conditioned on the past and present values, is equal to the current value, mathematically expressed as E[X_{n+1} | X_n, ..., X_0] = X_n. While the Markov process focuses on state transitions with no memory, the martingale emphasizes a fair game condition with conserved conditional expectation, both central to probability theory and stochastic analysis.

Key Differences Between Markov Processes and Martingales

Markov processes are stochastic models where future states depend solely on the present state, embodying the memoryless property, whereas martingales represent fair games with conditional expected values of future observations equal to the current value, reflecting no drift over time. Key differences include Markov processes focusing on transition probabilities between states, while martingales emphasize maintaining constant conditional expectations, regardless of underlying transitions. Furthermore, Markov processes may exhibit trends or drifts, contrary to martingales, which are strictly unbiased and used to model "fair" stochastic behavior.

Properties Unique to Markov Processes

Markov processes exhibit the memoryless property, where the future state depends solely on the present state and not on the sequence of events that preceded it. This distinguishes them from martingales, which focus on the fair game property where the conditional expectation of the next value, given all past values, equals the present value. Markov processes are characterized by transition probabilities defining state changes over time, enabling applications in stochastic modeling and dynamic programming.

Distinct Characteristics of Martingales

Martingales are stochastic processes characterized by their fair game property, where the conditional expected value of the next observation, given all past observations, equals the present value, ensuring no predictable trend or drift. Unlike general Markov processes, martingales exhibit an intrinsic memoryless expectation focused on preserving the current state's expected value over time rather than the current state alone. This distinct characteristic makes martingales essential in modeling fair betting systems, financial derivatives pricing, and proving convergence theorems in probability theory.

Real-World Applications: Markov Process vs Martingale

Markov processes are widely used in modeling real-world systems with memoryless properties such as queueing networks, stock price modeling for option pricing, and weather prediction. Martingales apply primarily in financial mathematics, serving as a foundation for arbitrage pricing theory and risk-neutral valuation of derivatives. In practice, Markov processes capture transitions between states over time, while martingales represent fair game conditions where future expectations equal the current state based on available information.

Relationship and Intersections Between Markov Processes and Martingales

Markov processes and martingales intersect in the study of stochastic processes where Markov property ensures future states depend only on the present state, while martingales model fair game conditions with conditional expectation equaling the current value. Certain Markov processes, such as Brownian motion, inherently possess martingale properties, highlighting their relationship through shared probabilistic structures. This intersection facilitates advanced applications in financial modeling, stochastic calculus, and filtering theory, where understanding both concepts enhances prediction and risk assessment.

Examples Illustrating Markov Processes

Markov processes model systems where the future state depends only on the present state, exemplified by the random walk where the next position depends solely on the current one. In contrast, martingales represent fair game sequences where the expected future value equals the current value regardless of past history, such as in betting scenarios or stock price models under the efficient market hypothesis. An example illustrating a Markov process includes the weather model where tomorrow's weather depends only on today's conditions, not the sequence of past weather patterns.

Examples Demonstrating Martingales

Martingales are stochastic processes where the conditional expectation of the next value, given all past values, equals the present value, exemplified by fair games such as unbiased coin tossing and the symmetric random walk in gambling. In contrast, Markov processes depend only on the current state, not the entire history, illustrated by phenomena like stock price models and queueing systems where future states rely solely on the present position. The classic martingale example of a gambler's fortune in fair betting highlights the property that expected gains remain zero over time, a key characteristic distinguishing martingales from general Markov processes.

Summary: Choosing the Right Model for Stochastic Analysis

Markov processes model systems where future states depend solely on the current state, making them ideal for analyzing memoryless stochastic systems and transition probabilities. Martingales represent fair game scenarios with conditional expectations equal to the present value, useful for modeling unbiased stochastic processes in finance and gambling. Selecting between Markov processes and martingales depends on whether the focus is on state-dependent dynamics or preserving conditional expectation properties in stochastic analysis.

Markov Process Infographic

libterm.com

libterm.com