Ergodicity is a fundamental concept in statistics and physics that ensures time averages converge to ensemble averages under certain conditions. This property allows for analyzing complex systems by examining single trajectories instead of multiple instances, simplifying predictions and understanding of dynamic behaviors. Explore the full article to uncover how ergodicity impacts your study of statistical mechanics and beyond.

Table of Comparison

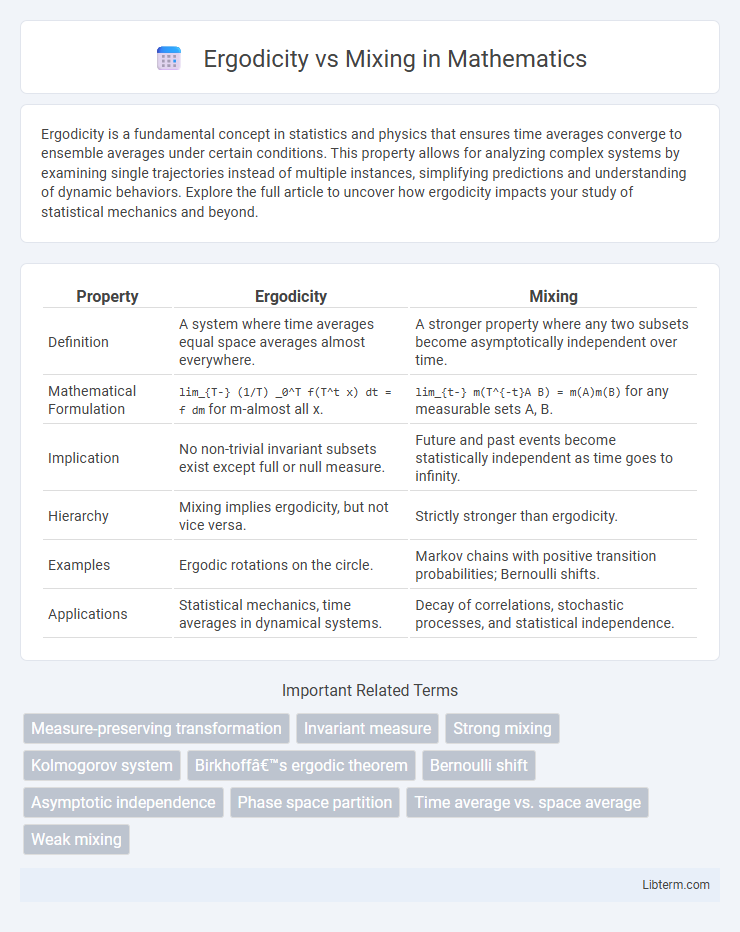

| Property | Ergodicity | Mixing |

|---|---|---|

| Definition | A system where time averages equal space averages almost everywhere. | A stronger property where any two subsets become asymptotically independent over time. |

| Mathematical Formulation |

lim_{T-} (1/T) _0^T f(T^t x) dt = f dm for m-almost all x.

|

lim_{t-} m(T^{-t}A B) = m(A)m(B) for any measurable sets A, B.

|

| Implication | No non-trivial invariant subsets exist except full or null measure. | Future and past events become statistically independent as time goes to infinity. |

| Hierarchy | Mixing implies ergodicity, but not vice versa. | Strictly stronger than ergodicity. |

| Examples | Ergodic rotations on the circle. | Markov chains with positive transition probabilities; Bernoulli shifts. |

| Applications | Statistical mechanics, time averages in dynamical systems. | Decay of correlations, stochastic processes, and statistical independence. |

Introduction to Ergodicity and Mixing

Ergodicity describes a property of dynamical systems where time averages of observables equal their space averages, ensuring long-term statistical behavior is independent of initial conditions. Mixing enhances this by requiring that any two measurable sets become statistically independent as time progresses, demonstrating stronger randomness and loss of memory. Both concepts are fundamental in statistical mechanics and chaos theory for understanding system predictability and equilibrium states.

Defining Ergodicity in Dynamical Systems

Ergodicity in dynamical systems refers to the property where time averages of a system's observables converge to the same value as space averages taken over the entire phase space, ensuring that the system explores all accessible states evenly over time. This concept is crucial in statistical mechanics and chaos theory for connecting microscopic dynamics with macroscopic statistical behavior. Ergodic systems guarantee that long-term behavior is independent of initial conditions, enabling reliable predictions of equilibrium properties.

Understanding Mixing in Mathematics

Mixing in mathematics refers to a strong form of statistical independence where, over time, the states of a dynamical system become increasingly uncorrelated, ensuring that any two measurable sets lose memory of their initial configurations. This concept is crucial in ergodic theory as it guarantees that the system's long-term behavior is not only ergodic but also exhibits rapid decay of correlations, implying randomness and unpredictability in the evolution. Understanding mixing helps in analyzing chaotic systems, probability measures, and their convergence properties within measure-preserving transformations.

Key Differences: Ergodicity vs Mixing

Ergodicity refers to a system's property where time averages equal space averages, meaning a single trajectory covers the entire phase space given sufficient time. Mixing, a stronger property, implies that any two subsets of the phase space become statistically independent as time progresses, leading to complete loss of memory of initial states. The key difference lies in ergodicity ensuring uniform coverage over time, while mixing guarantees asymptotic statistical independence and decay of correlations between states.

Physical and Mathematical Significance

Ergodicity in dynamical systems ensures that time averages of observables converge to ensemble averages, providing a foundation for equilibrium statistical mechanics in physical systems. Mixing represents a stronger property where correlations between states decay to zero, guaranteeing rapid loss of memory and uniform distribution over phase space, crucial for understanding thermalization processes. Mathematically, ergodicity implies indecomposability of invariant measures, while mixing entails stronger statistical independence, essential for proving limit theorems in stochastic processes and chaotic dynamics.

Historical Context and Development

Ergodicity and mixing concepts emerged from the foundational work of Ludwig Boltzmann in the late 19th century, aiming to justify statistical mechanics through dynamical systems. The formal mathematical development gained momentum in the early 20th century with contributions from George Birkhoff and John von Neumann, who established ergodic theorems linking time averages and space averages. Mixing theory evolved later to describe stronger forms of statistical independence, with notable advancements by Sinai and Kolmogorov in the mid-20th century, solidifying their roles in chaos theory and statistical physics.

Examples of Ergodic Systems

Ergodic systems include the ideal gas in a rigid container, where particle trajectories uniformly fill the available phase space over time, ensuring time averages equal ensemble averages. The Bernoulli shift map exemplifies a simple ergodic transformation in dynamical systems, demonstrating statistical uniformity despite deterministic rules. Hamiltonian systems with strong chaotic behavior also exhibit ergodicity, as their orbits explore the energy surface thoroughly.

Examples of Mixing Systems

Mixing systems, a stronger property than ergodicity, ensure that any two subsets of the phase space become statistically independent as time evolves, exemplified by the Bernoulli shift and the Baker's map. The Bernoulli shift, a classical example of a mixing system, demonstrates how sequences of independent random variables evolve under the shift map, resulting in a loss of correlation over time. The Baker's map visually represents mixing dynamics by stretching and folding the unit square, illustrating rapid homogenization and the thorough blending of subsets in the phase space.

Applications in Physics and Mathematics

Ergodicity in physics ensures time averages equal ensemble averages, critical for statistical mechanics and thermodynamics to predict macroscopic properties from microscopic states. Mixing describes stronger chaotic behavior where any initial distribution evolves to a uniform state, essential for understanding turbulence, diffusion processes, and the stability of dynamical systems. In mathematics, ergodic theory applies to measure-preserving transformations to analyze long-term behavior of systems, while mixing properties quantify the rate of convergence to equilibrium in probabilistic and dynamical frameworks.

Conclusion: Ergodicity and Mixing Compared

Ergodicity ensures that time averages converge to ensemble averages, highlighting the statistical uniformity of a system over time, while mixing implies a stronger form of statistical independence as system states become increasingly uncorrelated. Mixing systems are always ergodic, but ergodic systems are not necessarily mixing, indicating a hierarchy in dynamical behavior. This distinction is crucial in fields like statistical mechanics and chaos theory, where understanding the depth of randomness and predictability affects model accuracy and interpretation.

Ergodicity Infographic

libterm.com

libterm.com