The null space of a matrix consists of all vectors that, when multiplied by the matrix, result in the zero vector. Understanding the null space is essential for solving homogeneous linear systems and determining the matrix's rank and linear independence of its columns. Explore the article to learn how the null space impacts your linear algebra applications and problem-solving strategies.

Table of Comparison

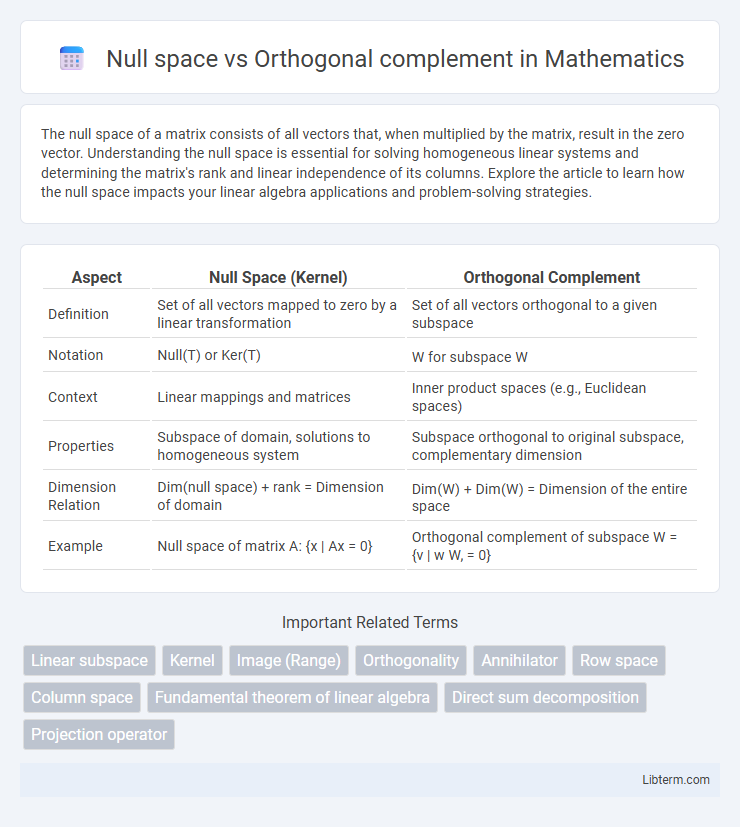

| Aspect | Null Space (Kernel) | Orthogonal Complement |

|---|---|---|

| Definition | Set of all vectors mapped to zero by a linear transformation | Set of all vectors orthogonal to a given subspace |

| Notation | Null(T) or Ker(T) | W for subspace W |

| Context | Linear mappings and matrices | Inner product spaces (e.g., Euclidean spaces) |

| Properties | Subspace of domain, solutions to homogeneous system | Subspace orthogonal to original subspace, complementary dimension |

| Dimension Relation | Dim(null space) + rank = Dimension of domain | Dim(W) + Dim(W) = Dimension of the entire space |

| Example | Null space of matrix A: {x | Ax = 0} | Orthogonal complement of subspace W = {v | w W, |

Introduction to Null Space and Orthogonal Complement

The null space of a matrix A consists of all vectors x satisfying Ax = 0, representing the solution set to homogeneous linear equations and revealing dependencies among columns of A. The orthogonal complement of a subspace W in an inner product space includes all vectors orthogonal to every vector in W, serving as a geometric counterpart that isolates directions perpendicular to W. Understanding the null space and orthogonal complement is fundamental in linear algebra for analyzing linear transformations, solving systems of equations, and decomposing vector spaces into meaningful components.

Definitions: Null Space Explained

The null space of a matrix A consists of all vectors x such that Ax = 0, representing the solution set to the homogeneous equation. It is a fundamental subspace in linear algebra that captures the concept of linear dependence and the kernel of the linear transformation defined by A. The orthogonal complement, in contrast, refers to the set of all vectors orthogonal to a given subspace, often relating to the row space or column space of A in the context of projections and least squares problems.

Understanding the Orthogonal Complement

The orthogonal complement consists of all vectors that are orthogonal to every vector in a given subspace, providing crucial insights into the structure of vector spaces. Unlike the null space, which contains vectors mapped to the zero vector by a linear transformation, the orthogonal complement helps identify vectors perpendicular to a subspace, facilitating solutions in least squares and projections. Understanding the orthogonal complement enables decomposition of vector spaces into direct sums, essential in advanced linear algebra applications such as spectral theory and functional analysis.

Mathematical Formulation of Null Space

The null space of a matrix \( A \in \mathbb{R}^{m \times n} \) is defined as the set of all vectors \( \mathbf{x} \in \mathbb{R}^n \) such that \( A\mathbf{x} = \mathbf{0} \), forming a subspace of \( \mathbb{R}^n \). In contrast, the orthogonal complement of a subspace \( V \subseteq \mathbb{R}^n \) consists of all vectors orthogonal to every vector in \( V \), symbolically \( V^\perp = \{\mathbf{y} \in \mathbb{R}^n : \mathbf{y}^\top \mathbf{v} = 0, \forall \mathbf{v} \in V \} \). The null space can be viewed as the orthogonal complement of the row space of \( A \), since the rows of \( A \) define linear functionals whose kernels form the null space.

Mathematical Formulation of Orthogonal Complement

The orthogonal complement of a subspace \( V \subseteq \mathbb{R}^n \) is defined as \( V^\perp = \{ \mathbf{y} \in \mathbb{R}^n \mid \mathbf{y} \cdot \mathbf{v} = 0 \text{ for all } \mathbf{v} \in V \} \), representing all vectors orthogonal to every vector in \( V \). The null space of a matrix \( A \), denoted \( \mathcal{N}(A) \), is the set \( \{\mathbf{x} \in \mathbb{R}^n \mid A\mathbf{x} = \mathbf{0}\} \), serving as a specific case where the null space is orthogonal to the row space of \( A \). The orthogonal complement relationship satisfies \( \dim(V) + \dim(V^\perp) = n \), emphasizing the dimension theorem in linear algebra.

Key Differences Between Null Space and Orthogonal Complement

Null space consists of all vectors mapped to the zero vector by a linear transformation, representing solutions to homogeneous equations, while the orthogonal complement includes all vectors orthogonal to a given subspace in an inner product space. The null space is specific to a matrix or linear operator, reflecting kernel properties, whereas the orthogonal complement relates to geometric orthogonality with respect to the entire space. Dimensionally, the null space's dimension equals the nullity of the matrix, whereas the orthogonal complement's dimension combined with the original subspace equals the dimension of the ambient space.

Geometric Interpretation of Null Space and Orthogonal Complement

The null space of a matrix consists of all vectors that map to the zero vector, representing directions flattened to a point in the transformation, while the orthogonal complement includes all vectors perpendicular to a given subspace, describing the space's geometric "shadow." In geometric terms, the null space is the set of input vectors collapsed to zero by the matrix transformation, forming a subspace where the linear mapping loses information. The orthogonal complement, by contrast, captures all vectors that maintain zero inner product with the original subspace, delineating a complementary dimension orthogonal to the space of interest.

Applications in Linear Algebra and Beyond

The null space of a matrix consists of all vectors mapped to the zero vector, playing a crucial role in solving homogeneous linear systems and determining matrix rank. The orthogonal complement, defined as the set of vectors orthogonal to a given subspace, is essential for projections, least squares solutions, and constructing orthonormal bases. Both concepts extend beyond linear algebra into signal processing, data compression, and machine learning, where understanding vector subspaces facilitates dimensionality reduction and feature extraction.

Common Misconceptions and Clarifications

The null space of a matrix consists of all vectors that the matrix maps to the zero vector, whereas the orthogonal complement is the set of vectors orthogonal to a given subspace, often linked to the row space for matrices. A common misconception is that the null space and orthogonal complement are the same, but the null space pertains to the kernel of the matrix transformation, while the orthogonal complement relates to perpendicularity in the vector space. Clarifying this distinction is crucial for understanding concepts like the Fundamental Theorem of Linear Algebra, which connects these subspaces but does not equate them.

Summary: Choosing Between Null Space and Orthogonal Complement

The null space of a matrix consists of all vectors that the matrix maps to zero, representing solutions to homogeneous linear systems, while the orthogonal complement comprises vectors orthogonal to a given subspace, characterizing constraints and projections. Selecting between null space and orthogonal complement depends on the problem context: null space is essential for solving equations and understanding kernel properties, whereas the orthogonal complement is critical in optimization, projections, and subspace decompositions. Understanding these distinctions enhances linear algebra applications in data analysis, signal processing, and machine learning feature extraction.

Null space Infographic

libterm.com

libterm.com