T5 is a powerful text-to-text transformer model designed to handle a wide range of natural language processing tasks by converting all problems into a text generation format. Its versatile architecture enables efficient fine-tuning on various datasets, enhancing performance in translation, summarization, and question-answering tasks. Discover how T5 can revolutionize your approach to language models by exploring the full article.

Table of Comparison

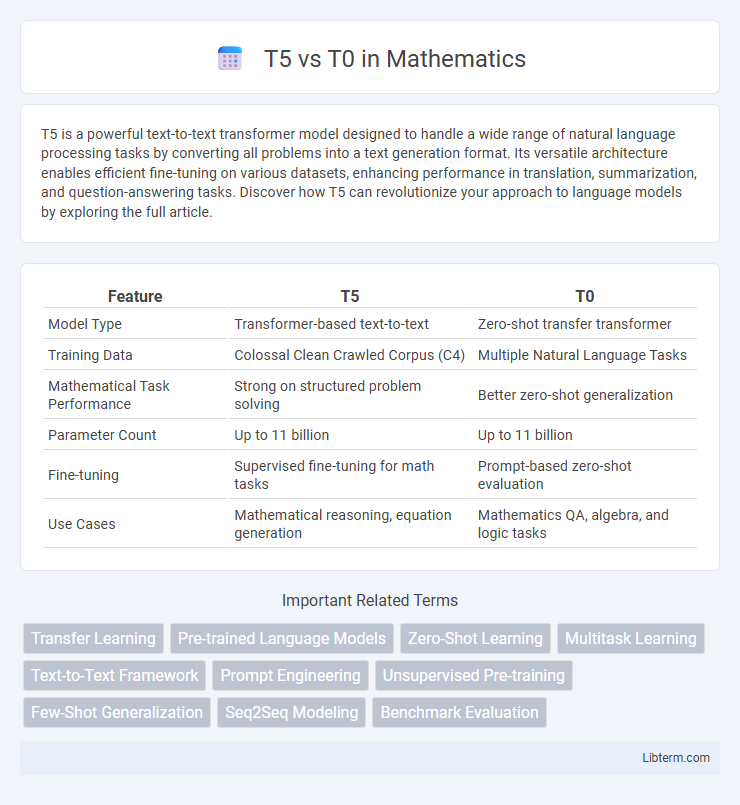

| Feature | T5 | T0 |

|---|---|---|

| Model Type | Transformer-based text-to-text | Zero-shot transfer transformer |

| Training Data | Colossal Clean Crawled Corpus (C4) | Multiple Natural Language Tasks |

| Mathematical Task Performance | Strong on structured problem solving | Better zero-shot generalization |

| Parameter Count | Up to 11 billion | Up to 11 billion |

| Fine-tuning | Supervised fine-tuning for math tasks | Prompt-based zero-shot evaluation |

| Use Cases | Mathematical reasoning, equation generation | Mathematics QA, algebra, and logic tasks |

Introduction to T5 and T0

T5 (Text-to-Text Transfer Transformer) is a versatile language model developed by Google that frames all NLP tasks as a text-to-text problem, improving transfer learning and achieving state-of-the-art results across diverse benchmarks. T0, based on T5, is fine-tuned using a multitask mixture of datasets combined with task-specific natural language prompts, enhancing zero-shot generalization and adaptability to unseen tasks. Both models leverage transformer architectures, but T0's prompt-based fine-tuning enables more effective task-oriented understanding compared to the original T5.

Background and Development

T5 (Text-to-Text Transfer Transformer) was developed by Google Research in 2019, designed to convert all NLP tasks into a unified text-to-text format, enabling efficient transfer learning across diverse benchmarks. T0, introduced by BigScience in 2021, builds on T5's framework but is fine-tuned using a large mixture of natural language prompts across various tasks, enhancing zero-shot generalization without task-specific fine-tuning. The development of T0 emphasizes leveraging prompt-based supervision and multi-task learning to improve adaptability and performance in unseen tasks.

Model Architectures Compared

T5 (Text-To-Text Transfer Transformer) employs an encoder-decoder architecture optimized for a wide range of NLP tasks by converting all tasks into text-to-text format, leveraging a large Transformer with up to 11 billion parameters in its largest variant. T0, building upon T5's architecture, incorporates prompt-based fine-tuning on a mixture of tasks to enhance zero-shot generalization, using the same encoder-decoder model but trained with multitask prompted data for better task adaptability. The key architectural difference lies in T0's adaptation process focusing on improved task conditioning via prompts rather than altering the underlying Transformer structure.

Training Objectives and Strategies

T5 employs a span-corruption objective, masking contiguous text spans for reconstruction, which enables robust unsupervised pretraining across diverse NLP tasks. T0 adapts T5's architecture but emphasizes multitask prompted training on a wide range of datasets, aligning model behavior to zero-shot generalization with natural language prompts. This strategy in T0 shifts from purely denoising objectives toward instruction-driven learning, improving performance on unseen tasks by leveraging prompt-based supervision during training.

Key Differences in Design

T5 utilizes a unified text-to-text framework transforming all NLP tasks into a text generation problem, enabling consistent model architecture and training objectives across diverse tasks. T0 builds on T5's foundation by incorporating multitask prompted training with a diverse set of datasets and natural language prompts, enhancing zero-shot generalization and adaptability. Unlike T5, T0 emphasizes instruction tuning to improve performance on unseen tasks without explicit task-specific fine-tuning.

Performance Benchmarks

T5 demonstrates strong performance across multiple natural language understanding benchmarks, including SuperGLUE and SQuAD, leveraging its encoder-decoder architecture for versatile text-to-text tasks. T0, built on T5 architecture but fine-tuned with a diverse mixture of tasks using prompt-based learning, surpasses T5 in zero-shot generalization capabilities and few-shot learning on unseen datasets. Benchmark comparisons reveal T0's superior adaptability in zero-shot settings, while T5 remains competitive in tasks with substantial fine-tuning data.

Applications and Use Cases

T5 excels in diverse natural language processing tasks such as text summarization, translation, and question answering by leveraging its encoder-decoder architecture for generating high-quality outputs. T0, designed for zero-shot learning, effectively performs a wide range of tasks without task-specific fine-tuning, making it ideal for scenarios requiring adaptability to new tasks like classification and prompt-based tasks. Both models demonstrate strong performance in applications involving few-shot and zero-shot learning, but T0's training on prompted datasets allows for broader generalization across unseen applications.

Strengths and Limitations

T5 excels in versatile text-to-text transformation tasks due to its large-scale pretraining on diverse datasets, enabling strong performance across summarization, translation, and question answering. T0, fine-tuned on prompt-based multitask learning, offers improved zero-shot generalization by leveraging natural language instructions, making it effective for tasks without explicit training examples. However, T5 may struggle with zero-shot tasks lacking fine-tuning, while T0 can be limited by its reliance on well-designed prompts and potentially lower performance on tasks outside its training distribution.

Community Adoption and Support

T5, developed by Google, benefits from a larger and more established community with extensive documentation and numerous open-source implementations, facilitating widespread adoption and continuous improvement. T0, introduced by Hugging Face based on instruction tuning techniques, has gained significant traction through its focus on zero-shot and few-shot learning capabilities, attracting researchers interested in generalization across tasks. Both models receive active support on platforms like GitHub and Hugging Face Hub, but T5's earlier release provides it with a more mature ecosystem and richer community-driven resources.

Future Prospects and Trends

T5 and T0 models continue to evolve with increased scalability and efficiency, showing significant promise for future natural language understanding applications. T5's flexible text-to-text framework is expected to expand across diverse domains, leveraging larger multilingual datasets and more refined pre-training strategies. The T0 model, optimized for zero-shot learning, is anticipated to advance transfer learning capabilities, enabling robust performance on unseen tasks, driving trends towards generalized AI systems.

T5 Infographic

libterm.com

libterm.com