HTTP streaming delivers media content by breaking it into small chunks served over standard HTTP protocols, ensuring smooth playback without buffering. This technology adapts to varying network speeds, providing a seamless viewing experience across devices. Explore the rest of the article to understand how HTTP streaming can enhance your media consumption.

Table of Comparison

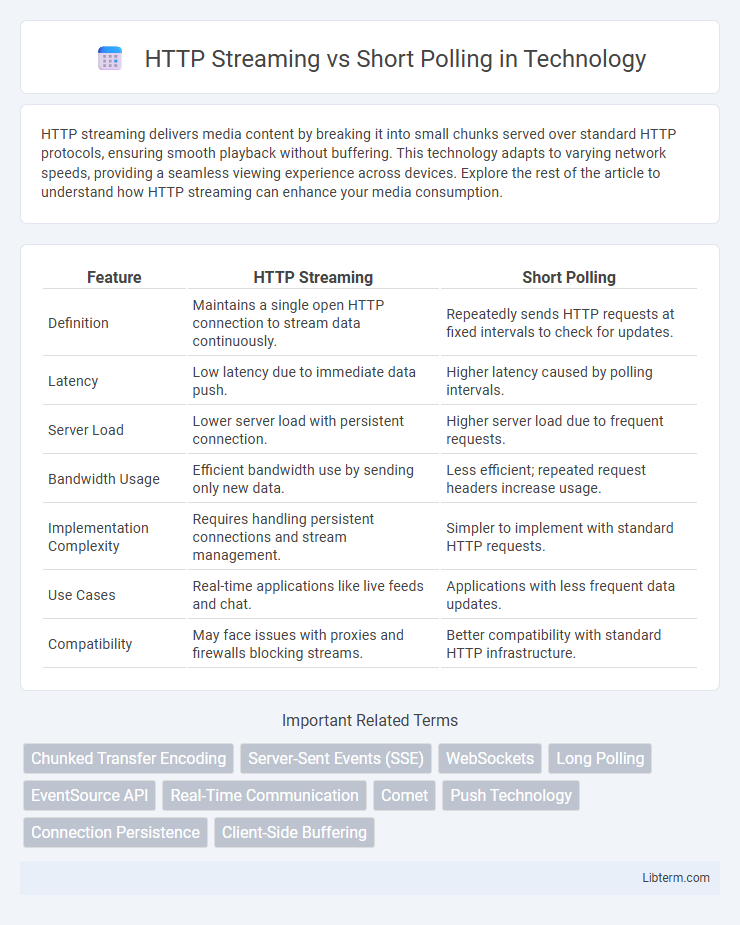

| Feature | HTTP Streaming | Short Polling |

|---|---|---|

| Definition | Maintains a single open HTTP connection to stream data continuously. | Repeatedly sends HTTP requests at fixed intervals to check for updates. |

| Latency | Low latency due to immediate data push. | Higher latency caused by polling intervals. |

| Server Load | Lower server load with persistent connection. | Higher server load due to frequent requests. |

| Bandwidth Usage | Efficient bandwidth use by sending only new data. | Less efficient; repeated request headers increase usage. |

| Implementation Complexity | Requires handling persistent connections and stream management. | Simpler to implement with standard HTTP requests. |

| Use Cases | Real-time applications like live feeds and chat. | Applications with less frequent data updates. |

| Compatibility | May face issues with proxies and firewalls blocking streams. | Better compatibility with standard HTTP infrastructure. |

Introduction to HTTP Streaming and Short Polling

HTTP Streaming maintains a persistent connection between the client and server, allowing continuous data flow in real-time without reopening connections. Short Polling involves the client sending repeated HTTP requests at regular intervals to check for new data, resulting in higher latency and increased server load. HTTP Streaming is more efficient for real-time applications by reducing overhead and delivering instant updates compared to the frequent, discrete requests inherent in Short Polling.

How HTTP Streaming Works

HTTP Streaming works by keeping a single HTTP connection open between the client and server, allowing continuous data flow without repeated requests. The server sends data as a stream of small chunks or events, enabling real-time updates with minimal latency. This method reduces overhead compared to short polling, where the client repeatedly sends requests at intervals to check for new data.

Understanding Short Polling Mechanism

Short polling involves the client repeatedly sending HTTP requests to the server at fixed intervals to check for new data, which can lead to increased latency and higher server load. This mechanism contrasts with HTTP streaming, where the server maintains an open connection to push data to the client in real-time, reducing overhead caused by frequent requests. Understanding short polling is essential for applications with low-frequency data updates or scenarios where maintaining persistent connections is challenging.

Key Differences Between HTTP Streaming and Short Polling

HTTP Streaming maintains a continuous open connection where the server pushes data to the client in real-time, ideal for live updates with minimal latency. Short Polling repeatedly sends HTTP requests at regular intervals, retrieving data updates only at specific polling times, which can lead to increased latency and higher resource consumption. HTTP Streaming is more efficient for frequent data transfer, while Short Polling suits scenarios with less frequent updates or limited server capabilities.

Performance Comparison: Streaming vs Short Polling

HTTP Streaming maintains a persistent connection, enabling continuous data flow that reduces latency and server load compared to short polling, which repeatedly opens and closes connections creating overhead. Streaming enhances real-time performance by minimizing network requests, whereas short polling introduces delays due to periodic polling intervals and increased HTTP headers transmission. Overall, HTTP Streaming offers superior scalability and responsiveness for real-time applications compared to the resource-intensive and less efficient short polling method.

Scalability Implications

HTTP Streaming maintains a persistent connection, enabling real-time data transfer and reducing overhead caused by repeated HTTP requests, which enhances scalability under high concurrency. Short Polling involves frequent client requests to check for updates, increasing server load and network traffic, which can lead to scalability bottlenecks as the number of clients grows. Efficient resource management and load balancing are critical when implementing HTTP Streaming to sustain performance at scale.

Use Cases for HTTP Streaming

HTTP Streaming is ideal for real-time applications such as live sports updates, financial tickers, and online gaming where continuous data flow and low latency are crucial. It enables servers to push data instantly to clients without repeated connection overhead, making it efficient for use cases demanding immediate event-driven responses. Unlike short polling, HTTP Streaming reduces network traffic and server load by maintaining a persistent connection rather than frequent request cycles.

Use Cases for Short Polling

Short polling is ideal for applications with low to moderate update frequencies, such as checking email inboxes or retrieving user notifications, where real-time data delivery is not critical. It suits environments with limited server resources or when network reliability is inconsistent, ensuring clients periodically request updates without maintaining persistent connections. Mobile apps or legacy systems often leverage short polling to balance bandwidth consumption and server load while providing timely, though not instantaneous, data refreshes.

Security Considerations

HTTP streaming maintains a continuous connection, reducing the exposure to repeated authentication processes compared to short polling, which initiates frequent requests that can increase attack surfaces such as replay attacks or man-in-the-middle interception. Short polling's repetitive nature may lead to higher vulnerability to denial-of-service (DoS) attacks due to the constant server requests, while HTTP streaming benefits from persistent connection encryption protocols like TLS to safeguard data integrity and confidentiality over longer sessions. Secure implementation of HTTP streaming requires vigilant resource management to prevent connection hijacking, whereas short polling demands robust rate limiting and authentication mechanisms to mitigate security risks associated with frequent polling intervals.

Choosing the Right Approach for Your Application

HTTP Streaming maintains an open connection to deliver real-time data continuously, making it ideal for applications requiring instant updates, such as live sports scores or chat systems. Short polling repeatedly sends HTTP requests at fixed intervals, better suited for less time-sensitive data or when server resources are limited, like periodic status checks in dashboard apps. Evaluate your application's latency sensitivity, server load, and complexity to select between HTTP Streaming's persistent connection and short polling's intermittent requests for optimal performance and resource management.

HTTP Streaming Infographic

libterm.com

libterm.com