The nuclear norm is a matrix norm defined as the sum of the singular values of a matrix, often used in convex optimization problems to promote low-rank solutions. This norm is particularly valuable in machine learning and signal processing for tasks like matrix completion and compressed sensing. Explore the rest of the article to understand how the nuclear norm can enhance your data analysis techniques.

Table of Comparison

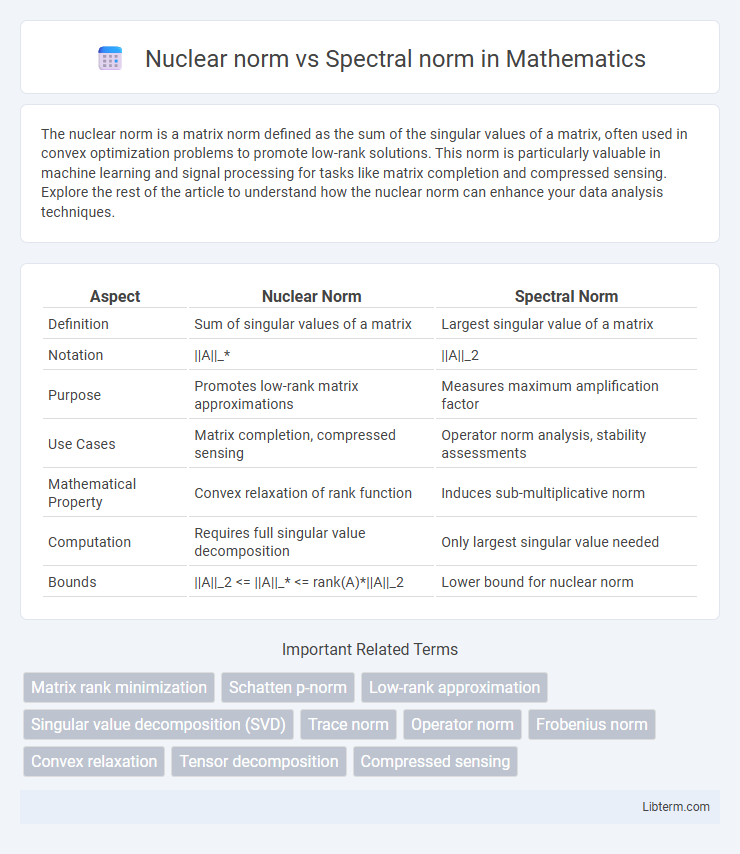

| Aspect | Nuclear Norm | Spectral Norm |

|---|---|---|

| Definition | Sum of singular values of a matrix | Largest singular value of a matrix |

| Notation | ||A||_* | ||A||_2 |

| Purpose | Promotes low-rank matrix approximations | Measures maximum amplification factor |

| Use Cases | Matrix completion, compressed sensing | Operator norm analysis, stability assessments |

| Mathematical Property | Convex relaxation of rank function | Induces sub-multiplicative norm |

| Computation | Requires full singular value decomposition | Only largest singular value needed |

| Bounds | ||A||_2 <= ||A||_* <= rank(A)*||A||_2 | Lower bound for nuclear norm |

Introduction to Matrix Norms

Matrix norms quantify the size or length of matrices, providing essential tools for numerical analysis and optimization problems. The nuclear norm, defined as the sum of singular values, promotes low-rank matrix approximations, making it crucial in matrix completion and compressed sensing. The spectral norm corresponds to the largest singular value, measuring the maximum linear transformation effect and often used to bound operator behavior in stability and error analysis.

Defining the Nuclear Norm

The nuclear norm, also known as the trace norm, is defined as the sum of the singular values of a matrix, representing its rank-minimization proxy in convex optimization. It is widely used in matrix completion and low-rank approximation problems due to its ability to promote sparsity in singular values. Unlike the spectral norm, which measures only the largest singular value, the nuclear norm captures the overall magnitude of all singular values, providing a tighter convex relaxation for rank constraints.

Understanding the Spectral Norm

The spectral norm of a matrix is defined as its largest singular value, representing the maximum stretching factor the matrix applies to any unit vector. It plays a crucial role in applications like stability analysis and operator theory, providing a measure of the matrix's maximum amplification capability. Understanding the spectral norm is essential for assessing matrix behavior in optimization problems and numerical linear algebra, contrasting with the nuclear norm which sums all singular values to promote low-rank structures.

Mathematical Formulations

The nuclear norm of a matrix, denoted as ||A||*, is defined as the sum of its singular values, representing the convex envelope of the rank function and promoting low-rank solutions in optimization problems. The spectral norm, ||A||2, corresponds to the largest singular value, measuring the maximum stretching factor of the matrix as a linear operator. These norms relate through singular value decomposition (SVD), where if s1 >= s2 >= ... >= s_r are the singular values of A, then ||A||* = S_i=1^r s_i and ||A||2 = s1.

Key Differences: Nuclear vs Spectral Norm

The nuclear norm, also known as the trace norm, is the sum of the singular values of a matrix, often used as a convex surrogate for rank minimization in matrix completion problems. In contrast, the spectral norm corresponds to the largest singular value, reflecting the maximum stretching factor of the matrix in any direction. While the nuclear norm promotes low-rank solutions by aggregating all singular values, the spectral norm measures the operator norm and is sensitive only to the dominant singular direction.

Applications in Machine Learning

Nuclear norm and spectral norm play crucial roles in machine learning for regularization and matrix completion tasks. The nuclear norm promotes low-rank solutions, making it effective for dimensionality reduction, collaborative filtering, and robust principal component analysis. Spectral norm controls the largest singular value, which helps in stabilizing training processes of deep neural networks by constraining weight matrices to improve generalization and prevent overfitting.

Computational Complexity Comparison

The nuclear norm involves computing the sum of singular values, requiring a full singular value decomposition (SVD) with a computational complexity of O(mn^2) for an mxn matrix, making it computationally expensive for large-scale problems. The spectral norm is the largest singular value, which can be approximated more efficiently using iterative methods like the power iteration, generally with complexity O(mn k) where k is the number of iterations. Consequently, spectral norm calculations are often faster and more scalable compared to nuclear norm computations in practical applications.

Advantages and Limitations

Nuclear norm minimizes the sum of singular values, promoting low-rank matrix approximations useful in convex optimization and matrix completion problems, offering a tight relaxation of rank constraints. Spectral norm focuses on the largest singular value, providing robustness in controlling operator norms and stability in perturbation theory but may be less effective for rank minimization tasks. While nuclear norm excels in inducing sparsity in singular values, it is computationally more intensive, whereas spectral norm is simpler to compute but may overlook the global structure captured by the entire singular value spectrum.

Practical Use Cases

Nuclear norm is widely used in matrix completion problems such as collaborative filtering in recommendation systems due to its ability to promote low-rank solutions. Spectral norm finds practical applications in stability analysis of control systems and regularization in machine learning models to constrain the largest singular value, ensuring robustness. Both norms serve as convex relaxations of rank constraints but are chosen based on whether promoting low rank (nuclear norm) or controlling maximum singular value (spectral norm) is more critical for the application.

Conclusion and Future Perspectives

The nuclear norm and spectral norm serve distinct purposes in matrix analysis, with the nuclear norm promoting low-rank approximations valuable in machine learning and signal processing, while the spectral norm measures the largest singular value, crucial for stability and robustness assessments. Emerging research explores hybrid norms and adaptive techniques to leverage advantages of both, potentially enhancing optimization frameworks and regularization methods. Future perspectives emphasize integrating these norms into scalable algorithms and deep learning models to improve performance in high-dimensional data applications.

Nuclear norm Infographic

libterm.com

libterm.com