Eigenvalues are fundamental in understanding linear transformations and matrix behavior, revealing key properties such as stability and resonance frequencies in systems. They play a crucial role in fields like physics, engineering, and machine learning by simplifying complex computations and providing insights into system dynamics. Explore the rest of this article to discover how eigenvalues impact your work and their practical applications across various disciplines.

Table of Comparison

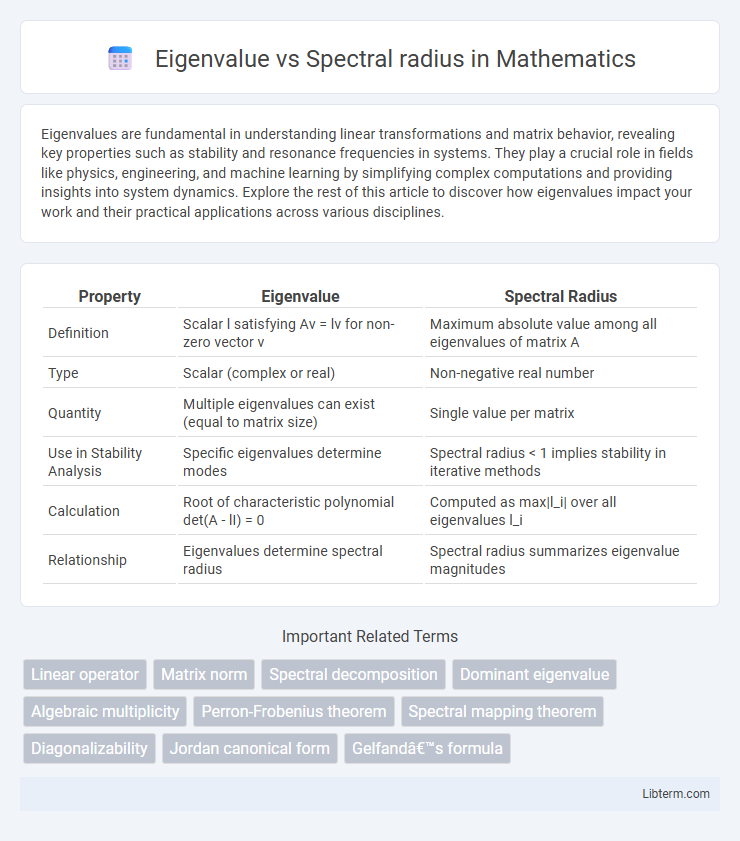

| Property | Eigenvalue | Spectral Radius |

|---|---|---|

| Definition | Scalar l satisfying Av = lv for non-zero vector v | Maximum absolute value among all eigenvalues of matrix A |

| Type | Scalar (complex or real) | Non-negative real number |

| Quantity | Multiple eigenvalues can exist (equal to matrix size) | Single value per matrix |

| Use in Stability Analysis | Specific eigenvalues determine modes | Spectral radius < 1 implies stability in iterative methods |

| Calculation | Root of characteristic polynomial det(A - lI) = 0 | Computed as max|l_i| over all eigenvalues l_i |

| Relationship | Eigenvalues determine spectral radius | Spectral radius summarizes eigenvalue magnitudes |

Introduction to Eigenvalues and Spectral Radius

Eigenvalues represent the scalar values linked to a matrix that indicate how much a corresponding eigenvector is stretched during the linear transformation. The spectral radius is defined as the largest absolute value among all eigenvalues of a matrix, serving as a critical measure in stability analysis. Understanding the relationship between eigenvalues and the spectral radius is essential for applications in systems theory, numerical analysis, and network dynamics.

Defining Eigenvalues in Linear Algebra

In linear algebra, eigenvalues are scalars that characterize the behavior of a linear transformation represented by a matrix, defined as solutions to the characteristic equation det(A - lI) = 0. The spectral radius of a matrix is the largest absolute value among its eigenvalues, providing insight into the matrix's stability and convergence properties. Understanding eigenvalues helps in analyzing diagonalization, matrix powers, and the long-term dynamics of linear systems.

Understanding the Spectral Radius Concept

The spectral radius of a matrix is the largest absolute value among its eigenvalues, representing the dominant behavior of the linear transformation. It plays a crucial role in stability analysis, especially in iterative methods and dynamical systems, where convergence depends on the spectral radius being less than one. Understanding the spectral radius involves analyzing eigenvalue magnitudes rather than their directions, highlighting its importance in matrix norms and operator theory.

Mathematical Differences: Eigenvalue vs Spectral Radius

Eigenvalues are scalar values associated with a square matrix that satisfy the equation \(A \mathbf{v} = \lambda \mathbf{v}\), where \(\lambda\) represents an eigenvalue and \(\mathbf{v}\) is a corresponding eigenvector. The spectral radius of a matrix is the largest absolute value among all of its eigenvalues, denoted as \(\rho(A) = \max \{|\lambda_i|\}\). While eigenvalues can be complex numbers with varying magnitudes and phases, the spectral radius condenses this information into a single nonnegative scalar reflecting the dominant eigenvalue magnitude.

Calculation Methods for Eigenvalues and Spectral Radius

Calculation of eigenvalues typically involves solving the characteristic polynomial det(A - lI) = 0, using methods such as the QR algorithm or power iteration for approximate solutions. The spectral radius, defined as the largest absolute value among eigenvalues, can be computed by first determining all eigenvalues or through the Gelfand formula, which uses limits of matrix norms. Numerical techniques for spectral radius often rely on iterative methods that converge to the dominant eigenvalue's magnitude without requiring full spectral decomposition.

Applications in Mathematics and Engineering

Eigenvalues provide critical insights into the stability and behavior of linear systems in both mathematics and engineering, often used in solving differential equations and dynamic system analysis. The spectral radius, defined as the largest absolute eigenvalue, serves as a key indicator for convergence rates in iterative methods such as power iteration and stability of numerical algorithms. Applications include vibration analysis in mechanical engineering, control system design in electrical engineering, and optimization problems in applied mathematics.

Spectral Radius and Matrix Stability

The spectral radius of a matrix, defined as the largest absolute value among its eigenvalues, plays a critical role in assessing matrix stability, especially in dynamical systems and iterative methods. A matrix is considered stable if its spectral radius is less than one, ensuring that powers of the matrix converge to zero and system states remain bounded over time. Understanding the spectral radius provides a direct criterion for predicting long-term behavior without computing all eigenvalues explicitly.

Real-World Examples and Use Cases

Eigenvalues and spectral radius critically influence stability analysis in engineering systems, such as controlling oscillations in mechanical structures by assessing the dominant eigenvalue's magnitude. In economics, spectral radius helps predict long-term behavior in input-output models to ensure sustainable growth by examining the system's dominant spectral radius. Power systems utilize eigenvalue analysis to optimize grid stability, where the spectral radius informs the resilience against cascading failures in electrical networks.

Common Misconceptions and Pitfalls

The eigenvalue of a matrix refers to any scalar l such that there exists a nonzero vector v satisfying Av = lv, whereas the spectral radius is defined as the largest absolute value among all eigenvalues of the matrix. A common misconception is assuming the spectral radius equals the largest eigenvalue without considering complex eigenvalues or that the spectral radius directly determines matrix norm behavior without context. Pitfalls include ignoring the difference between spectral radius and matrix norm, which may lead to errors in stability analysis and iterative method convergence predictions.

Summary: Choosing Between Eigenvalue and Spectral Radius

When choosing between eigenvalue and spectral radius, prioritize spectral radius for assessing matrix stability and convergence in iterative methods due to its representation of the largest absolute eigenvalue. Eigenvalues provide detailed insights into individual matrix transformations, but spectral radius offers a more concise measure for stability analysis. For practical applications in control theory and numerical analysis, spectral radius is often the key factor in determining system behavior.

Eigenvalue Infographic

libterm.com

libterm.com