Load balancing distributes network traffic across multiple servers to ensure optimal resource use, reduce response time, and prevent server overload. Effective load balancing enhances system reliability and scalability, improving your application's performance and user experience. Discover the key techniques and benefits of load balancing in the rest of this article.

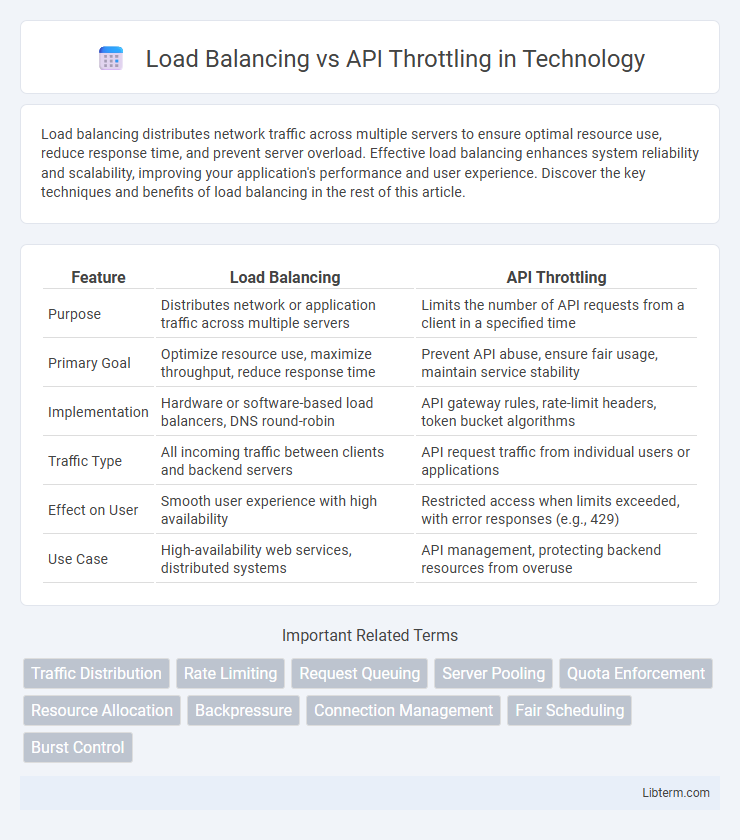

Table of Comparison

| Feature | Load Balancing | API Throttling |

|---|---|---|

| Purpose | Distributes network or application traffic across multiple servers | Limits the number of API requests from a client in a specified time |

| Primary Goal | Optimize resource use, maximize throughput, reduce response time | Prevent API abuse, ensure fair usage, maintain service stability |

| Implementation | Hardware or software-based load balancers, DNS round-robin | API gateway rules, rate-limit headers, token bucket algorithms |

| Traffic Type | All incoming traffic between clients and backend servers | API request traffic from individual users or applications |

| Effect on User | Smooth user experience with high availability | Restricted access when limits exceeded, with error responses (e.g., 429) |

| Use Case | High-availability web services, distributed systems | API management, protecting backend resources from overuse |

Introduction to Load Balancing and API Throttling

Load balancing distributes incoming network traffic across multiple servers to ensure reliability and optimal resource utilization, preventing any single server from becoming overwhelmed. API throttling controls the rate of API requests by limiting the number of calls a client can make within a specified time frame, protecting backend services from excessive load and ensuring fair usage. Both techniques enhance system performance and availability but target different aspects of traffic management in distributed systems.

What is Load Balancing?

Load balancing is the process of distributing incoming network traffic across multiple servers to ensure no single server becomes overwhelmed, enhancing system reliability and performance. It optimizes resource use, maximizes throughput, and reduces latency by evenly spreading requests. Common load balancing techniques include round-robin, least connections, and IP hash methods.

What is API Throttling?

API throttling is a technique used to control the amount of incoming API requests by limiting the number of calls a client can make within a specified time frame, preventing server overload and ensuring fair resource allocation. Unlike load balancing, which distributes traffic across multiple servers to optimize performance and availability, API throttling focuses on regulating request rates to maintain system stability and protect backend services from abuse or excessive demand. Effective API throttling strategies include setting rate limits, managing quotas, and implementing backoff mechanisms to handle traffic peaks smoothly.

Key Differences Between Load Balancing and API Throttling

Load balancing evenly distributes incoming network traffic across multiple servers to optimize resource use, minimize response time, and prevent server overload, while API throttling controls the number of API requests a user can make within a specified timeframe to maintain service stability and prevent abuse. Load balancing focuses on traffic distribution for scalability and availability, whereas API throttling enforces usage limits for security and performance management. Understanding these distinctions is crucial for designing robust and efficient network architectures and API management strategies.

Use Cases for Load Balancing

Load balancing efficiently distributes incoming network traffic across multiple servers to ensure high availability and reliability for web applications, making it ideal for handling large-scale user requests and preventing server overload. It excels in scenarios like e-commerce platforms during peak shopping events, streaming services managing millions of concurrent users, and cloud applications requiring seamless scaling. By optimizing resource utilization and minimizing latency, load balancing supports continuous uptime and improved user experience.

Use Cases for API Throttling

API throttling is essential for controlling the rate of requests from clients to prevent server overload and ensure fair resource distribution during peak traffic. Common use cases include protecting backend systems from abuse, managing subscription plans with different rate limits, and enforcing quality-of-service policies in multi-tenant environments. Unlike load balancing, which distributes traffic across servers for reliability and scalability, API throttling focuses on limiting request volumes to maintain API performance and prevent service degradation.

Benefits of Load Balancing in Modern Architectures

Load balancing enhances system reliability and scalability by distributing incoming network traffic evenly across multiple servers, preventing overload and ensuring high availability. It optimizes resource utilization, reduces latency, and improves response times, which is crucial for handling increasing user demands in cloud-native and microservices architectures. By enabling seamless failover and fault tolerance, load balancing supports continuous service delivery and resilient infrastructure in modern application environments.

Advantages of Implementing API Throttling

API throttling enhances system stability by controlling the rate of incoming requests, preventing server overload and ensuring consistent performance. It protects backend services from abuse and ensures fair resource allocation among users, reducing latency and avoiding downtime. By limiting excessive traffic, API throttling improves security and helps maintain service-level agreements (SLAs) for critical applications.

Choosing Between Load Balancing and API Throttling

Choosing between load balancing and API throttling depends on traffic management goals: load balancing distributes incoming network requests across multiple servers to optimize resource use and prevent overload, while API throttling limits the number of requests a user or client can make to an API within a specific timeframe to protect against abuse and ensure fair usage. Load balancing enhances system availability and scalability by evenly spreading loads, whereas API throttling enforces rate limits to maintain performance and prevent server crashes due to excessive requests. Evaluating system architecture, traffic patterns, and user behavior helps determine the right approach or combination for optimizing API performance and reliability.

Best Practices for Combining Load Balancing and API Throttling

Effective load balancing distributes incoming API requests across multiple servers, preventing any single server from becoming a bottleneck and enhancing system availability. API throttling controls request rates to safeguard backend services against overload and maintain consistent performance levels. Combining load balancing with API throttling involves configuring load balancers to route traffic evenly while applying throttling rules at either the API gateway or service level, ensuring optimized resource utilization and protection against traffic spikes.

Load Balancing Infographic

libterm.com

libterm.com