Centralized AI systems consolidate data and processing power in a single location, enabling faster decision-making and efficient resource management. This approach enhances security and control while simplifying maintenance and updates. Explore the full article to understand how centralized AI can transform your organization's operations.

Table of Comparison

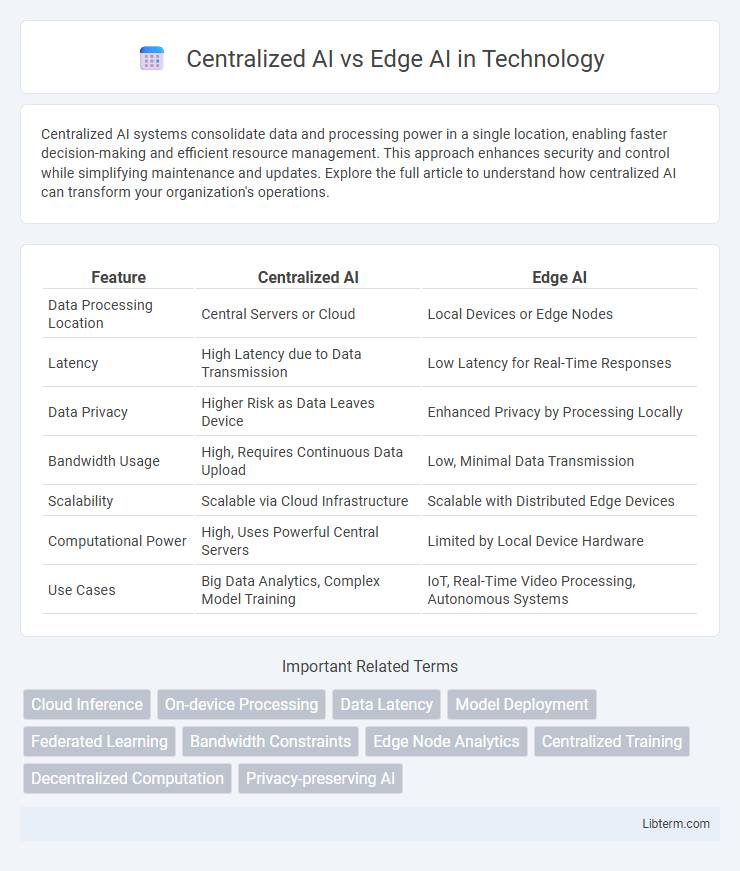

| Feature | Centralized AI | Edge AI |

|---|---|---|

| Data Processing Location | Central Servers or Cloud | Local Devices or Edge Nodes |

| Latency | High Latency due to Data Transmission | Low Latency for Real-Time Responses |

| Data Privacy | Higher Risk as Data Leaves Device | Enhanced Privacy by Processing Locally |

| Bandwidth Usage | High, Requires Continuous Data Upload | Low, Minimal Data Transmission |

| Scalability | Scalable via Cloud Infrastructure | Scalable with Distributed Edge Devices |

| Computational Power | High, Uses Powerful Central Servers | Limited by Local Device Hardware |

| Use Cases | Big Data Analytics, Complex Model Training | IoT, Real-Time Video Processing, Autonomous Systems |

Introduction to Centralized AI and Edge AI

Centralized AI processes data in a central location, typically a cloud server, leveraging powerful computing resources to perform complex machine learning tasks and analytics. Edge AI, by contrast, executes AI algorithms locally on devices such as sensors, smartphones, or IoT gadgets, enabling real-time data analysis with reduced latency and enhanced privacy. This distinction impacts data transmission, processing speed, and system scalability, driving different applications and use cases across industries.

Core Differences Between Centralized and Edge AI

Centralized AI processes data in powerful cloud servers, enabling complex model training and large-scale data analysis with high computational resources. Edge AI operates directly on local devices, minimizing latency and enhancing data privacy by processing information near the source of data generation. The core differences between Centralized AI and Edge AI lie in latency, data privacy, computational power, and reliance on network connectivity.

Data Processing: Cloud vs Local Devices

Centralized AI processes vast amounts of data on cloud servers, offering powerful computational resources and scalability for complex analytics and model training. Edge AI handles data locally on devices, reducing latency and bandwidth usage while enhancing privacy by minimizing data transmission to the cloud. This localized processing enables real-time decision-making critical for applications like autonomous vehicles and IoT devices.

Latency and Real-Time Decision Making

Centralized AI relies on powerful remote servers for data processing, which can introduce latency due to data transmission delays, impacting the speed of real-time decision making. Edge AI processes data locally on devices or nearby edge servers, significantly reducing latency and enabling immediate real-time responses crucial for applications like autonomous vehicles and industrial automation. Lower latency in Edge AI ensures faster analysis and quicker action, enhancing performance in time-sensitive scenarios compared to centralized approaches.

Security and Privacy Considerations

Centralized AI processes data in cloud servers, raising concerns about data breaches and unauthorized access due to the aggregation of sensitive information. Edge AI, by performing computations locally on devices, enhances privacy by minimizing data transmission and reducing vulnerability to cyberattacks. Security frameworks in Edge AI leverage encryption and secure enclaves to protect data, while centralized AI relies heavily on robust cloud security protocols and compliance standards to safeguard user information.

Scalability and Infrastructure Requirements

Centralized AI relies on powerful cloud-based servers that offer high scalability by pooling vast computational resources, but demands robust network infrastructure and low-latency connectivity. Edge AI processes data locally on devices, reducing dependency on network bandwidth and providing real-time analysis, though scaling requires deploying consistent hardware across numerous edge nodes. Infrastructure for centralized AI emphasizes data center capacity and internet reliability, whereas edge AI prioritizes distributed hardware efficiency and localized data processing capabilities.

Energy Efficiency and Resource Consumption

Centralized AI relies on powerful data centers with high energy consumption due to intensive computation and cooling requirements, leading to significant resource use. Edge AI processes data locally on devices such as smartphones or IoT sensors, greatly reducing data transmission energy and latency while optimizing power consumption. This localized approach minimizes bandwidth demand and lowers carbon footprint, making Edge AI more energy-efficient and sustainable for real-time applications.

Use Cases: When to Choose Centralized AI

Centralized AI is ideal for use cases requiring intensive data processing and complex machine learning models, such as large-scale data analytics, natural language processing, and cloud-based AI services. It excels in scenarios where data privacy is not a primary concern, allowing seamless integration from multiple sources to improve model accuracy. Centralized AI supports enterprises that need centralized management, frequent updates, and scalable infrastructure for AI deployment across a global network.

Use Cases: When to Choose Edge AI

Edge AI excels in real-time processing and low-latency applications such as autonomous vehicles, smart cameras, and industrial automation where immediate decision-making is critical. It is ideal for environments with limited or unreliable internet connectivity, ensuring data privacy by processing information locally without sending sensitive data to centralized cloud servers. Devices requiring energy efficiency and reduced bandwidth consumption benefit significantly from Edge AI deployments.

Future Trends in AI Deployment Architectures

Future trends in AI deployment architectures emphasize a hybrid approach that integrates centralized AI's high computational power with edge AI's real-time processing and low latency capabilities. Advances in 5G and edge computing hardware enable more intelligent, distributed systems that optimize data privacy and bandwidth usage while maintaining robust AI performance. Emerging frameworks are focusing on seamless orchestration between cloud-based centralized models and decentralized edge devices to support scalable, efficient AI solutions in industries like autonomous vehicles, smart cities, and IoT ecosystems.

Centralized AI Infographic

libterm.com

libterm.com