HTTP streaming delivers media content over the internet by breaking it into small data chunks, enabling seamless playback without waiting for the entire file to download. This method optimizes bandwidth usage and adapts to varying network conditions, enhancing your viewing experience. Explore the rest of the article to understand how HTTP streaming can transform your online media consumption.

Table of Comparison

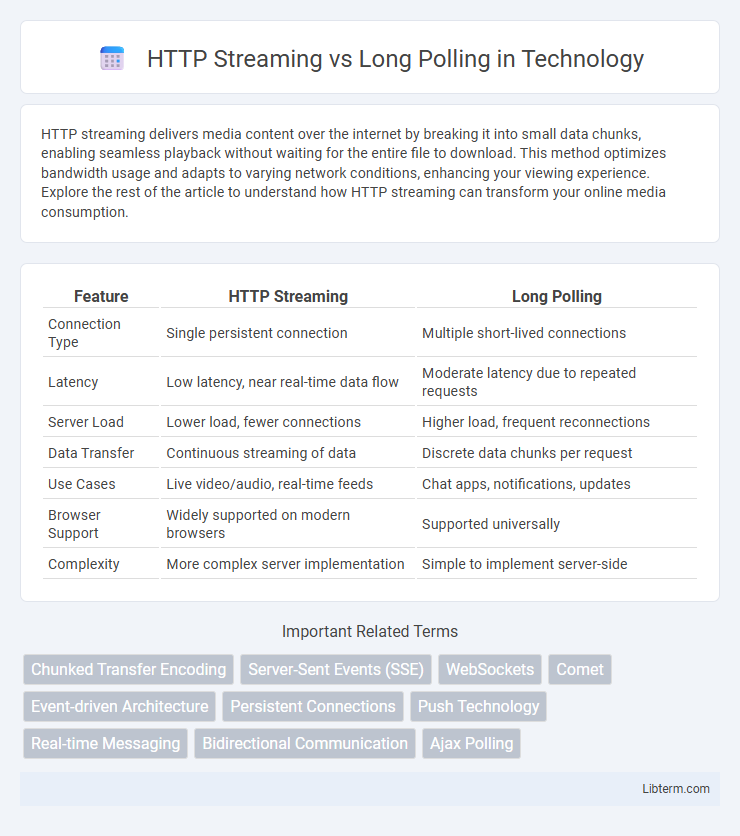

| Feature | HTTP Streaming | Long Polling |

|---|---|---|

| Connection Type | Single persistent connection | Multiple short-lived connections |

| Latency | Low latency, near real-time data flow | Moderate latency due to repeated requests |

| Server Load | Lower load, fewer connections | Higher load, frequent reconnections |

| Data Transfer | Continuous streaming of data | Discrete data chunks per request |

| Use Cases | Live video/audio, real-time feeds | Chat apps, notifications, updates |

| Browser Support | Widely supported on modern browsers | Supported universally |

| Complexity | More complex server implementation | Simple to implement server-side |

Introduction to Real-Time Web Communication

HTTP Streaming maintains an open HTTP connection that continuously sends data from server to client, enabling real-time updates without repeated requests. Long Polling mimics real-time communication by having the client repeatedly send requests and the server hold responses until new data is available. Both techniques address latency issues in real-time web applications but differ in their connection management and resource usage.

Defining HTTP Streaming

HTTP streaming is a web communication technique that allows a server to continuously send data to a client over a single HTTP connection without closing it. This method enables real-time updates by maintaining an open connection, allowing servers to push data efficiently as it becomes available. Compared to long polling, HTTP streaming reduces latency and overhead by avoiding repeated request-response cycles, offering a more seamless and scalable data delivery solution.

Understanding Long Polling

Long Polling is a web communication technique where the client sends a request to the server and the server holds the connection open until new data is available or a timeout occurs, enabling near real-time updates without constant polling. Unlike HTTP Streaming, which keeps the connection continuously open to push data, Long Polling re-establishes the connection after each response, making it compatible with standard HTTP infrastructure. This approach reduces latency and server overhead compared to traditional polling while supporting reliable event-driven communication in web applications.

How HTTP Streaming Works

HTTP Streaming maintains an open HTTP connection between the client and server, allowing continuous data flow without needing to reestablish the connection for each message. The server sends a stream of events or chunks of data incrementally, which the client processes in real-time as they arrive. This method reduces latency and overhead compared to frequent requests, enabling efficient real-time updates for applications like live feeds and chat services.

How Long Polling Works

Long Polling operates by the client sending an HTTP request to the server, which holds the request open until new data is available or a timeout occurs. Once the server responds with data, the client immediately sends a new request to maintain a continuous connection, effectively simulating real-time communication. This technique reduces latency compared to traditional polling by minimizing the frequency of empty responses while ensuring timely updates.

Key Differences Between HTTP Streaming and Long Polling

HTTP Streaming maintains an open connection where the server continuously sends data chunks without closing the session, optimizing real-time data flow and reducing latency. Long Polling involves the client sending a request that the server holds open until new data is available, then closing the connection after each response, which can increase overhead due to repeated reconnections. HTTP Streaming is more efficient for continuous data streams like live video or social feeds, whereas Long Polling suits scenarios with less frequent updates, balancing server resource usage and responsiveness.

Performance Comparison: HTTP Streaming vs Long Polling

HTTP Streaming maintains a persistent connection that continuously sends data from server to client, reducing latency and overhead compared to Long Polling, which repeatedly opens and closes connections to fetch updates. The continuous data flow in HTTP Streaming significantly improves response time and server resource utilization, making it more scalable for real-time applications. Long Polling incurs higher CPU and network usage due to frequent handshakes, resulting in slower performance under heavy loads.

Scalability and Resource Usage

HTTP Streaming maintains a persistent connection that continuously sends data, resulting in lower latency and reduced overhead compared to long polling, which repeatedly opens and closes requests. This persistent connection model improves scalability by minimizing server load and network resources, especially in high concurrent user environments. Long polling can cause increased CPU and memory usage due to frequent connection establishments, making it less efficient for resource-intensive or large-scale applications.

Use Cases and Best Applications

HTTP Streaming is ideal for real-time data delivery in scenarios like live sports updates, financial tickers, or social media feeds where continuous data flow enhances user experience. Long Polling suits use cases such as chat applications, notification systems, or collaborative tools where server push is needed but real-time streaming is impractical. Selecting HTTP Streaming over Long Polling depends on network constraints, latency tolerance, and the necessity for persistent connections versus intermittent data fetches.

Choosing Between HTTP Streaming and Long Polling

Choosing between HTTP Streaming and Long Polling depends on application requirements and network efficiency. HTTP Streaming maintains an open connection for continuous data flow, reducing latency and server load during real-time updates, while Long Polling repeatedly requests data by opening and closing connections, increasing overhead. For low-latency, high-frequency data transmission in environments supporting persistent connections, HTTP Streaming is optimal; Long Polling suits scenarios with intermittent updates or limited connection durations.

HTTP Streaming Infographic

libterm.com

libterm.com